An Update to Our Testing on PageRank Sculpting with Nofollow

The author's views are entirely their own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

The actual results of the test were inconclusive. Plain and simple, my test did not include enough samples to be statistically significant.

In doing so, I unintentionally misinformed all of you. For that, I am extremely sorry.

It is now my goal to make this up to all of you. Below is more information on this and my plan for running a new test.

In the meantime, what should I do about PageRank sculpting?

The first test results should be disregarded. This means that I, along with my co-workers at SEOmoz, recommend neither removing nofollow if it is installed (as we have seen detrimental effects for websites) nor adding it if you don't have it. Quite simply, we don't have enough information. (Which is why I ran the original test in the first place... damn)

Your time is best spent on link building and creating quality content. Tricks and tactics like PageRank sculpting are interesting short term tactics but fail in comparison to long term ROI on building a page that should be found. Remember, the most valuable information that Google ever gave SEOs is in the second sentence on this page. Providing the information that Google wants to make universally accessible in a search engine friendly way is the best long term strategy an SEO can have.

What was the old test?

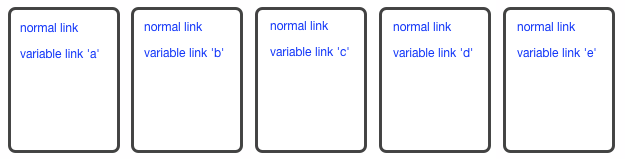

We built 40 websites that looked similar to the following screen shot:

.png)

Each website in the experiment used the same template. Each keyword phrase was targeted in the same place on each page and each page had the same amount of images, text and links.

Each domain was unique and used a different IP address. Each testing group had different information in the WHOIS records, different hosting providers and different payment methods.

The standardized website layout contained:

- Three pages per domain (the homepage and the keyword specific content pages)

- One internal in-link per page (Links in content)

- One in-link to homepage from third party site

- Six total outbound links.

- Two "junk" links to popular website articles to mimic natural linking profile (old Digg articles)

- One normal link to keyword test page

- Three modified links (according to given test) to three separate pages optimized for given keyword

- Links to internal pages only came from internal links

- The internal links used the anchor text (random English phrase) that was optimized for the given internal page

- Outbound links (aka "junk" links) used anchor text that was the same as the title tag of the external page being linked to (Old social media articles)

This graphic represents an ultra simplified version of five test sites.

In the old experiment each of these different "variable links" would have attempted to sculpt PageRank in a different way. (Variable link 'a' might use nofollow, variable link 'b' might use JavaScript, etc..) Each of the "normal links" would then point to one of five different pages trying to rank for the same term.

For testing purposes, I chose phrases that were completely unique to the Internet. These were phrases that had never been written online before. (For example, "I enjoy spending time with Sam Niccolls". Just kidding Sam... don't hurt me) In theory, the page that corresponded to the most effective PageRank sculpting method would outrank its competition for these isolated phrases.

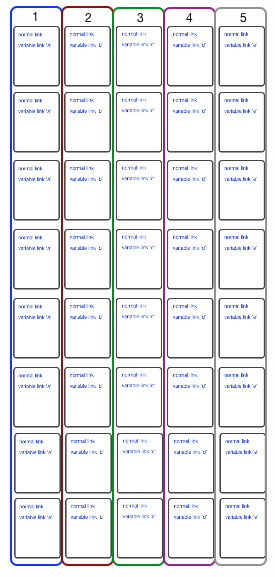

To make us confident in our results we had to compensate for the inherent noisiness of the Internet. To do this, we ran the experiment in parallel eight times.

This shows the full scale of the experiment. Each color (labeled with the numbers 1 - 5) refers to a different PageRank sculpting method. The 8 groups horizontally represent the isolated tests.

What went wrong?

As far as I can tell, the experiment was executed without a problem. As it turned out, the problem wasn't necessarily with the experiment itself but rather with interpreting the results. I used the wrong metric to evaluate the results (average rank of each testing group) and relied on too few samples.

What is the new test?

Rather than testing which PageRank sculpting method works the best, I am now going to test if the nofollow method works at all.

We ran the numbers (see math below) and found out we could run this test in either of two ways. The first way would only require 40 samples but would require a very high rate of success (nofollow beating control) to prove valid. The second test emphasizes precision and requires a much lower success rate but a much larger sample.

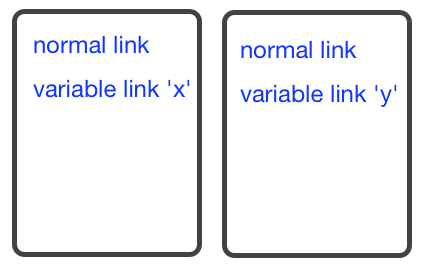

I have both tests planned and would love to hear your feedback of the tests prior to running them. Below is a diagram of the plan to test the nofollow method against a control (null) case.

This diagram shows an ultra simplified version of two test pages to be used in the new nofollow test. The real versions of the pages are more like the "Horsey Cow Tipper" example at the beginning of the post.

For this new test, both "normal links" will point to two separate pages trying to rank for the same unique phrase. "variable link 'x' will then link to a different page. "variable link 'y' " will be nofollowed and also link to a completely separate page. For each test group, we will see which of the two competing pages ranks higher. Our hypothesis is that the page that that is linked to from the page that has the nofollowed link (variable link 'y') will ranker higher. We believe this because we think the control case will split the link value semi-equally between the two links on the page and thus not send its full worth to the page trying to rank for the unique term.

This test will then be duplicated 20 times as seen in the diagram below.

Diagram showing simplified test pages from the new nofollow test

Are 20 tests (40 domains) really enough? We think yes but only for one very specific outcome. In order for this second test to be valid at 95% confidence, 15 out of the 20 tests will need to show that nofollow was an effective PageRank sculpting method.

If this doesn't happen, we will need to run a third test with a much bigger sample size. If we want to be 95% sure we will detect nofollow being better with 95% significance even if the odds nofollow wins a given trial is only 5 out of 8, we will need 168 test pairs. (See math below)

What keeps you from making the same mistake?

While reworking the old test, I got the help of Ben Hendrickson who sits a few desks away. Please feel free to check our math before we run the test.

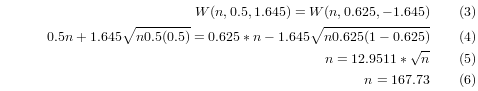

The Math Behind the 168 Pairs Nofollow TestThis test consists of a number of independent trials. In each trial, either nofollow or the control will rank higher. Thus the number of wins will be distributed according to a binomial distribution. Where n is the number of trials, and p is the probability that nofollow wins a trial, the normal approximation to the binomial distribution is:

Where W is the number of wins, and z is the number of standard deviations above the mean, the formula for the number of wins is thus:

The null hypothesis is that nofollow wins at p = 0.5 (even odds). To reject the null hypothesis in favor of the hypothesis that nofollow is an effective PageRank sculpting method with 95% confidence, we would need to see a minimum of W(n,0.5, 1.645) wins. How many wins will we see? We are 95% sure to see at least W(n, p, -1.645), where p of the actual chance that nofollow win a given trial. If we are setting a lower bound of p = 5/8 = 0.625 for what we are trying to detect, then we have a lower bound of seeing W(n, 0.625, -1.645) wins (with 95% likelihood) if in fact nofollow is at least that much better. We can set this lower bound on the number of wins we expect equal to the number of wins we need to see to have 95% confidence nofollow is better. After that we can then solve for the number of trials.

So we conclude we need 168 trials. If this test fails to show nofollow is better, then we are 95% sure that nofollow wins trials less than 62.5% of the time. We wouldn't be able to say nofollow sculpting doesn't matter, but this does say it doesn't seem large in comparison to the other factors we were unable to control for in our experiment.

The Math Behind the 20 Pairs Nofollow Test

So then why don't we run this as our next test? The answer is simple. A 168 trials is a lot of domains to setup. So maybe we will get lucky. If we do a good job of controlling for other factors, and the nofollow sculpting has a modest effect, perhaps the nofollow will win much more frequently than 62.5% of the time on average.

To see a 95% significance of nofollow doing better than the control, we will need to see 15 wins for nofollow out of the 20 trials. One could do more math for this, but how we actually got this number was an online binomial distribution probability calculator. Plug in p=0.5 (as this is the null hypothesis), n=20, and many various values for the numbers of wins until you find the lowest number whose chance of getting greater to or equal to it is less than 5%. That number should be should get 15.

Is there any takeaway from the original test?

Thousands of people read the post about the first PageRank sculpting methods and based on my assessment took it as truth. It wasn't until two days after posting the original entry that Darren Slatten pointed out my mistake. That means that the damage had already been done and it would be practically impossible to contact all of the people who had read the post.The small amount of people who did notice were (rightfully) upset with me. Their frustration with me and SEOmoz was vented on their personal blogs, Twitter, Facebook, e-mails and in the comments on the original post. This was a great (although unintentional) case study on how the Internet affects the distribution of information.

(mis)information on the Internet does not die.

We saw the very real effects of this on a large scale after the Iran election was covered by normal Iranian individuals on Twitter and on a very small scale with the test results of my first experiment. Once the information reached the Internet, it was out of the control of both its creator and those trying to silence it. For me, this was a much needed reminder of how much the Internet empowers its users. Together, all of you are a force to be reckoned with :-)

One more thing... Why don't you post the actual URLs so we can investigate them ourselves?

I will do this, but not right now. Posting them now would compromise the integrity of this and future tests. By linking to the test pages I change their link profile. I am happy to do this after the tests have been run and we no longer need the framework. I hope that makes sense :-)

I would love to hear your thoughts and constructive criticism on our new test. Please feel free to chat your brains out in the comments below :-)

![How To Drive More Conversions With Fewer Clicks [MozCon 2025 Speaker Series]](https://moz.rankious.com/_moz/images/blog/banners/Mozcon2025_SpeakerBlogHeader_1180x400_RebeccaJackson_London.png?w=580&h=196&auto=compress%2Cformat&fit=crop&dm=1750097440&s=296c25041fd58804005c686dfd07b9d1)

![How To Launch, Grow, and Scale a Community That Supports Your Brand [MozCon 2025 Speaker Series]](https://moz.rankious.com/_moz/images/blog/banners/Mozcon2025_SpeakerBlogHeader_1180x400_Areej-abuali_London.png?w=580&h=196&auto=compress%2Cformat&fit=crop&dm=1747732165&s=d887ee9e0e183cbb2bf4d61c717c2aa3)

Comments

Please keep your comments TAGFEE by following the community etiquette

Comments are closed. Got a burning question? Head to our Q&A section to start a new conversation.