How to Future-Proof Your SEO Strategy with Relevance Engineering

The AI shift is reshaping search

If algorithm updates over the last few years have shifted how our industry approaches SEO, then Google's announcements at I/O 2025 will force us to rewrite the playbook and reorient ourselves around the future of search.

Gone are the days when SEO agencies would sell Penguin recovery plans to burned companies and uninitiated marketers. Adapting to the ever-changing search landscape was easier because the curveballs weren't chaotic enough to change the game. While SEOs have been adjusting to life with AI Overviews infiltrating an increasing number of searches, AI Mode has the potential to shift our industry in another direction.

Here are the main takeaways you should have after reading this article:

- AI Mode represents a potential shift in how Google summarizes and surfaces content to users. We are still in the nascent phase of Google’s rollout, confined to the United States, so seeing how adoption rates increase should be interesting.

- Relevance Engineering is a framework that builds on semantic SEO and aligns with how LLMs retrieve and surface information to users.

- SEO could evolve into GEO, Generative Engine Optimization, where getting organic traffic and visibility via LLMs will be focused on passage-level optimization, topical depth, and Brand Authority™ (i.e., the trust and credibility your brand has earned as a reliable, go-to source).

- This article provides a tactical how-to guide to increasing your brand’s visibility in an increasingly competitive AI landscape.

What is AI Mode?

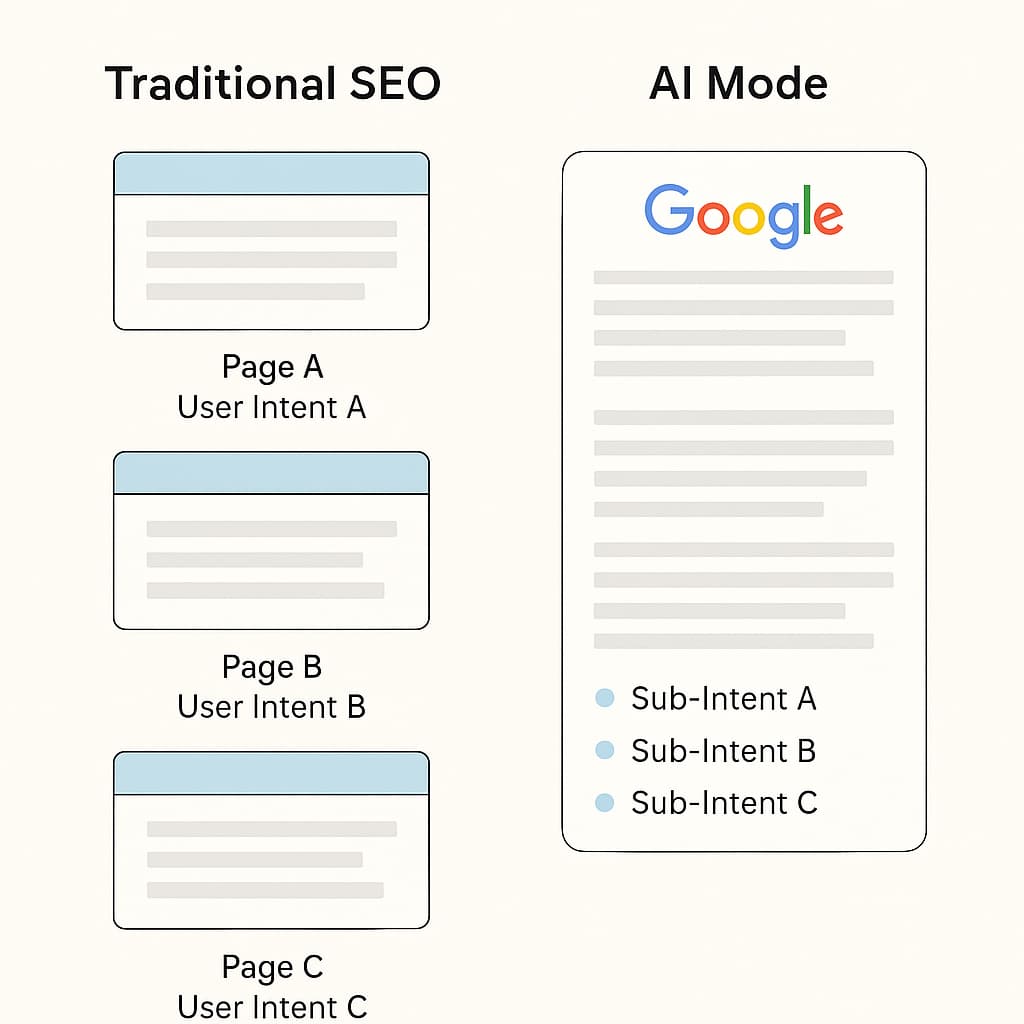

Google announced that as of May 2025, AI Mode would be available to all users in the United States, with plans for a broader international rollout in the coming months. While organic traffic has faced stiff competition from SERP features and sponsored ads, the wider adoption of LLM chat interfaces & AI Overviews has the potential to siphon even more clicks. Unfortunately, this could significantly reduce visibility for brands that rely on siloed-out pages to capture users' attention.

AI Mode in Google Search is a radically different approach to traditional SERP listings. It contains AI-generated overviews powered by Google's Gemini models. The output from AI Mode blends generative responses with traditional web links, product listings, and other elements built to engage users in a fully immersive and, in some cases, overwhelming experience.

The evolution of the search landscape, including features like AI Mode, will cause a significant disruption:

- Search results could become AI Summaries rather than the traditional 10 blue links we've known since search engines were invented.

- Search will evolve into a post-keyword era, focusing on topical depth and authority rather than ranking individual pages well.

- LLM-powered query expansion will be the foundation for AI Mode's popularity, answering questions users haven't thought of before writing a single word.

A common theme here is evolution. When Google rolled out its Penguin and Panda updates over a decade ago, the savviest SEOs adapted by creating more holistic marketing strategies instead of relying on underhanded and outdated tactics (RIP links in blog comments). Yet another watershed moment is on the horizon for the SEO community: we need to think beyond traditional SEO concepts and optimize for discoverability. While SEO’s scope seems to be focused on search engines, Generative Engine Optimization (GEO) encompasses LLMs

But without a proper methodology, it’s just another name.

Enter Relevance Engineering, a new framework that blends semantic SEO, topically deep and citation-worthy content architecture, and AI comprehension.

What makes Relevance Engineering different from SEO?

Relevance Engineering is the evolution of semantic SEO tailored for LLM-based retrieval, interpretation, and content summarization. Unlike traditional SEO, which focuses on building authority for specific web pages, Relevance Engineering isn't susceptible to "get ranked quick" schemes and general manipulation (at least not yet).

Three core principles help define Relevance Engineering:

- Passage-level optimization: LLMs extract and synthesize relevant passages across the web instead of retrieving full web pages from an index. Each section of your content should address a specific user intent or question.

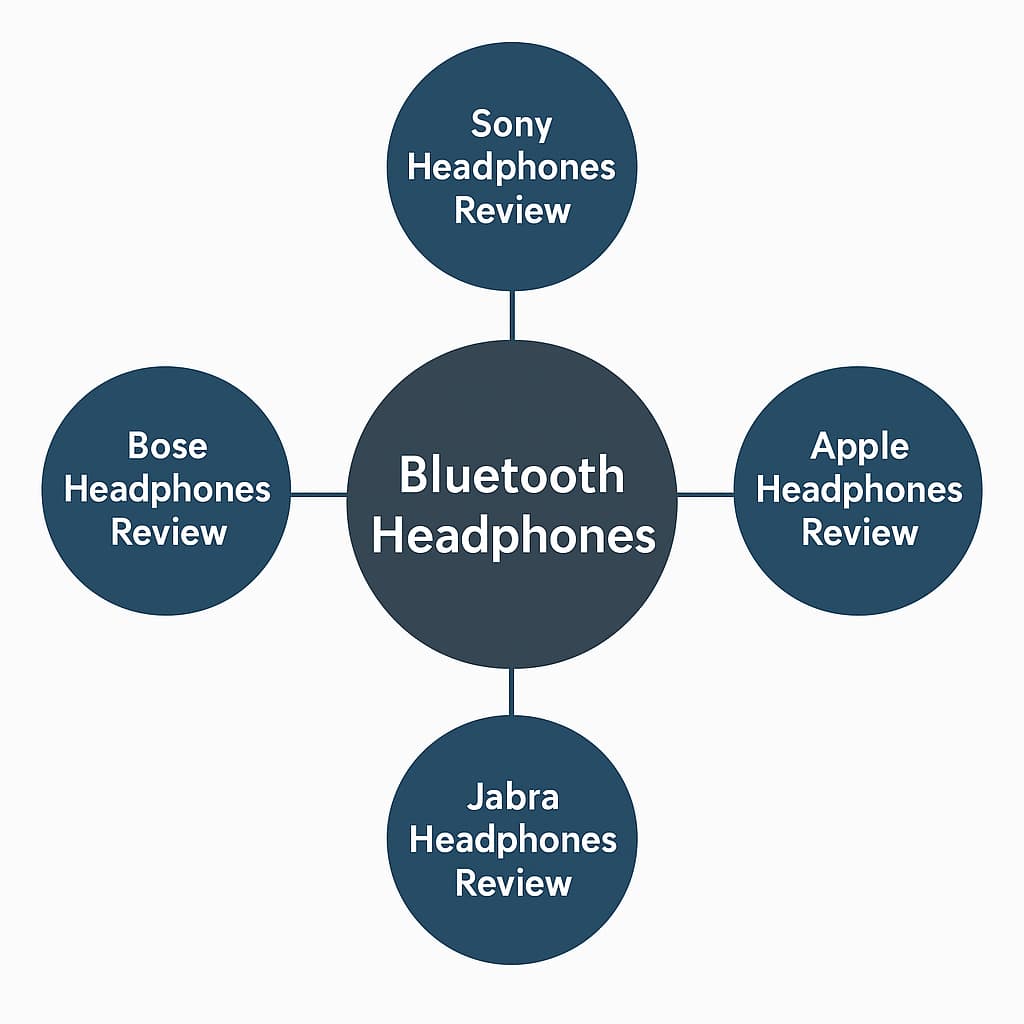

- Semantic similarity: Topical depth and context matter more than optimization for specific keyword phrases. For example, "most reliable Bluetooth headphones under $100" packs a lot more context beyond the words themselves:

- Affordability: The upper-limit cost threshold is $100, so results will weed out premium products from brands such as Sony, Bose, etc.

- Reliability: Although the user is cost-conscious, they expect headphones to last longer than the typical wear-and-tear cycle.

- Bluetooth Headphones: The user prefers wireless connections, so results may emphasize products with stronger connectivity than those with negative feedback.

- Citation likelihood: Unfortunately, the run-of-the-mill ad-heavy affiliate blog is unlikely to be featured in responses curated by LLMs. Well-structured and authoritative content, while being citation-worthy, is more likely to be included in AI-generated summaries.

- Brand Authority: LLMs tend to cite brands that users recognize as trustworthy or authoritative. Building your authority through consistent quality, original research, and a track record of relevance can increase the likelihood that your brand is cited among industry-leading publications. In an increasingly competitive search landscape, having a notable brand can only increase your chances of being visible in AI summaries.

Before you start saying, "Well, JB, isn't this just SEO?" Yes, this is the foundation of semantic SEO, which stresses creating helpful content and ensuring you have topical authority and depth, with the added nuance you need to develop content with AI systems in mind.

Topical authority is no longer just an option

I may sound like a broken record, but topical authority has far more potential for LLMs than "highly optimized" pages focused on exact-match keyword phrases.

Using Moz's Keyword Suggestions by Topics, we can identify several fruitful segments that allow us to cover a topic in-depth, ranging from the type of headphones (over-ear vs earbuds) and affordability ("budget").

In the Bluetooth headphones example highlighted in the previous section, topical coverage and depth at least give your content a fighter's chance to be included in the corpus. Building topical authority isn't just a means to be in AI Overviews of AI Mode, but rather, an opportunity to future-proof your content and get cited in all burgeoning LLMs. If your website lacks signals demonstrating expertise on a subject, your chances of being discovered are slim to none.

How to build topical authority

- Stop thinking of keyword phrases and reorient your strategy around topics and themes.

- Connect your semantically related pages with descriptive internal links.

- Use entities and schema markup where applicable so LLMs can easily digest and review your structured content.

Using keyword research tools to visualize topic gaps can help you identify opportunities to create new content or refresh existing web pages.

Why AI search demands a new SEO strategy

All of the above begs the question...what exactly changes?

The practice of Generative Engine Optimization is fundamentally adapting to how AI processes information, and in order to do that, we need to understand the AI Mode pipeline:

- A user submits a broad or vague query, such as "best family car under $30K".

- Google executes query fan-out, breaking the original search into 8-12 semantically diverse sub-queries.

- Google creates a custom document corpus using the most relevant results based on its interpretation of the question and all semantically adjacent queries.

- The LLM working in the background synthesizes all pertinent information and packages it into a neatly summarized page.

While traditional SEO focuses on maintaining relevance to a broader list of queries, irrelevant content can plague SERPs due to long-tail keyword matching or strong link profiles. However, if your content isn't relevant across multiple interpretations, it won't even be part of the corpus for summarization, which leaves your content out of the arena altogether. Let's break down the original example to illustrate this point:

Original query: “Best family car under $30k”:

- “Most reliable SUVs for families” --> Family cars tend to require more seats, so this eliminates any two-door sedans.

- “Fuel-efficient cars under $30,000” --> The cost threshold indicates that a high cost per mile could be a deal breaker, so focus on fuel-efficient vehicles.

- “Top-rated family cars by safety” --> Safety is always a concern, especially among families with younger children.

Content that addresses these subtopics with depth and clarity will have a better chance of being included in the corpus than vapid, topically thin doorway pages.

How to apply Relevance Engineering to your SEO strategy

A crucial distinction when discussing Relevance Engineering is that it does not replace an existing SEO strategy. The core foundation of SEO still rings true:

- Technical SEO is still paramount to facilitate the discovery of your content.

- Expertise in a given niche is still required to get surfaced by both search engines and LLMs.

- Low-quality AI-generated content will continue to lose out to highly informative, helpful content over the long term.

Consider Relevance Engineering as an upgrade to your existing frameworks, giving you a more robust strategy that yields fruitful dividends from multiple sources. Here's a helpful four-step process for upgrading your SEO:

Step 1: Audit your existing content

Before you create a whole new piece of content, assess what you already have to identify (1) strengths that you can continue building upon and (2) weaknesses that could hinder your visibility with LLMs.

- Map existing content to topic clusters: Identify the topics you cover with existing content and identify any clear hubs with revenue potential. Alternatively, suppose your website contains a lot of siloed content focused on single keywords with few opportunities to connect pages. In that case, you may have to rework the foundation of your content strategy.

- Identify quick wins: After the initial scan, you may unearth several opportunities that fall into one of the following buckets:

- Orphaned content: Do some of your topics have pages that are virtually impossible to reach via your main pages? Internal linking is a vital component, and this presents an opportunity to revisit horizontal (across similar page types) and vertical (within a section) linking.

- Thin content: Depending on the age of your website and how long you've been in SEO, you could have a litany of pages that serve no purpose other than being optimized for low-volume long-tail keywords. Pages with relatively thin content can be reworked or consolidated into main pages.

- Overly specific content: While having a hub-and-spoke strategy for covering topics is essential, consolidating content into one meaty, comprehensive article may help alleviate page bloat and focus search engines and LLMs on your core pages. Merging semantically related content will provide a richer experience for users than navigating multiple pages to find an answer to one specific question.

- Assess passage-level clarity: Does your older content allow LLMs to easily extract a concise answer for a particular question or user intent? Aligning each section of your content to address a distinct sub-intent or question is critical.

Step 2: Cluster and organize content

Now that you have a complete inventory of your existing content, you can shift your focus to organizing and clustering pages for topical completeness.

- Use keyword clustering tools to identify subtopics: Organizing your content by topic will allow you to utilize tools like Moz's Keyword Suggestions by Topics to unearth semantically related queries, questions, and subtopics that will enable you to achieve topical authority.

- Example: Moz.com has an expansive inventory of informational content related to various topics. Our team has been working on segmenting all of our content into different buckets such as “Brand Authority,” “Local SEO,” “Competitive Research,” “Analytics,” and more.

- Build interconnected hub-and-spoke structures:

- Pillar content (hub): These pages are gateway content for an entire supporting resource library. The main page should provide a broad overview of a significant topic and link to your content cluster.

- Example: Using Moz.com as our example, the Keyword Research page from The Beginner’s Guide to SEO is our pillar page due to the depth with which we have covered the topic and its current performance.

- Content cluster (spokes): Facilitating discovery from the main pillar page, your supporting content spokes are more detailed pages that cover subtopics with enough depth to (1) encourage users to take the following desired action and (2) surface any individual passage of content to an LLM or SERP feature. These pages should answer additional questions via FAQs, contain helpful videos, and link back to the cluster's central pillar and other subtopics.

- Pillar content (hub): These pages are gateway content for an entire supporting resource library. The main page should provide a broad overview of a significant topic and link to your content cluster.

- Map topics to the customer journey: Optimizing for keyword searches isn't enough anymore. Think of the questions, pain points, and solutions at the top of your targeted audience's minds during each stage of the purchasing process (awareness, consideration, desire, and action). Mapping topics to each stage will unearth potential questions you can proactively answer with your content.

Hub & spokes example as it relates to Bluetooth Headphones -- main page can go into budget vs. feature decisions, and spokes are supporting product reviews.

Step 3: Write for both humans & LLMs

Content creation with LLMs is becoming prevalent and requires a dual focus on engaging human readers with informative content and structuring your pages for LLM retrieval. Here are some helpful tips for writing for both human minds & T-1000 (ok, ok, I'll keep the Terminator references to a minimum):

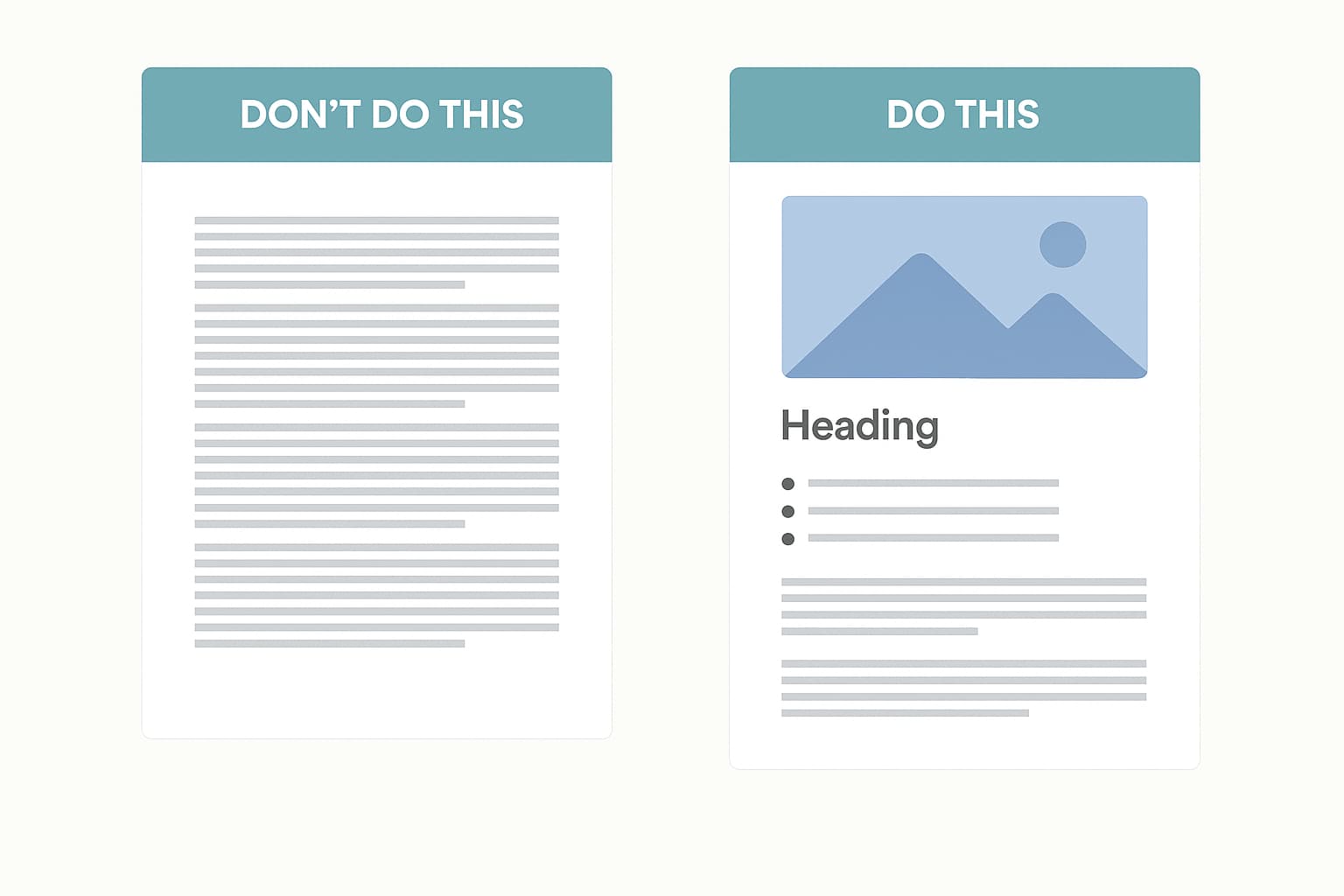

- Break content into digestible, intent-focused sections: Structure your articles with clear headings describing the covered content. Each section should ideally address a single, well-defined sub-intent or answer a specific question. Breaking down your content makes it easier for LLMs to extract particular bits of information to match sub-intents from query fan-out.

- Bonus tip: To enhance the UX and AI parsing, use a modular structure with collapsible FAQs or jump links.

- Be clear and concise: Individual passages need to be clear and to the point. While long-form content is valuable for topical authority, avoid burying key points and answers to specific questions within a sea of incoherent rambling.

- Include content everyone loves: Incorporating answer snippets into your content makes it digestible for readers and are the types of passages LLMs love to surface. Examples include:

- Clear definition: Explicitly defining key terms (e.g. "Relevance Engineering is...")

- Supporting statistics and data: "According to a study by X....". Don't forget to cite sources when appropriate.

- Actionable examples: Illustrate concepts with concrete examples.

- Bulleted & numbered lists: No one wants to sift through walls of text. Lists make content easier to consume and more likely for passage retrieval if it's relevant.

Step 4: Implement schema & internal linking

Organizing your content and displaying the relationship between pages can provide powerful signals to LLMs (in addition to search engines).

- Internal linking: SEOs regularly recommend a better internal linking strategy as a quick win. Not only does a highly strategic and effective internal linking plan provide easier pathways for your content to get discovered, but you can also use anchor text that accurately reflects the target page's core topic and user intent when clicking the link.

- Implement relevant schema markup: Structured data is one way to provide context to search engines and LLMs about the meaning of your content. Depending on your content, you can incorporate any of the given types of schema:

- Article: Defines the piece as an article, with properties for author, publish date, etc.

- FAQPage: For pages primarily consisting of question-answer pairs.

- HowTo: For content that provides step-by-step instructions.

- BreadcrumbList: Shows the page's position in the site hierarchy.

Organization: Provides information about your brand. - Person: For author pages or expert mentions.

- If applicable, provide a specific schema for products, recipes, events, etc. This markup helps LLMs correctly categorize and understand the entities and relationships featured within your web pages.

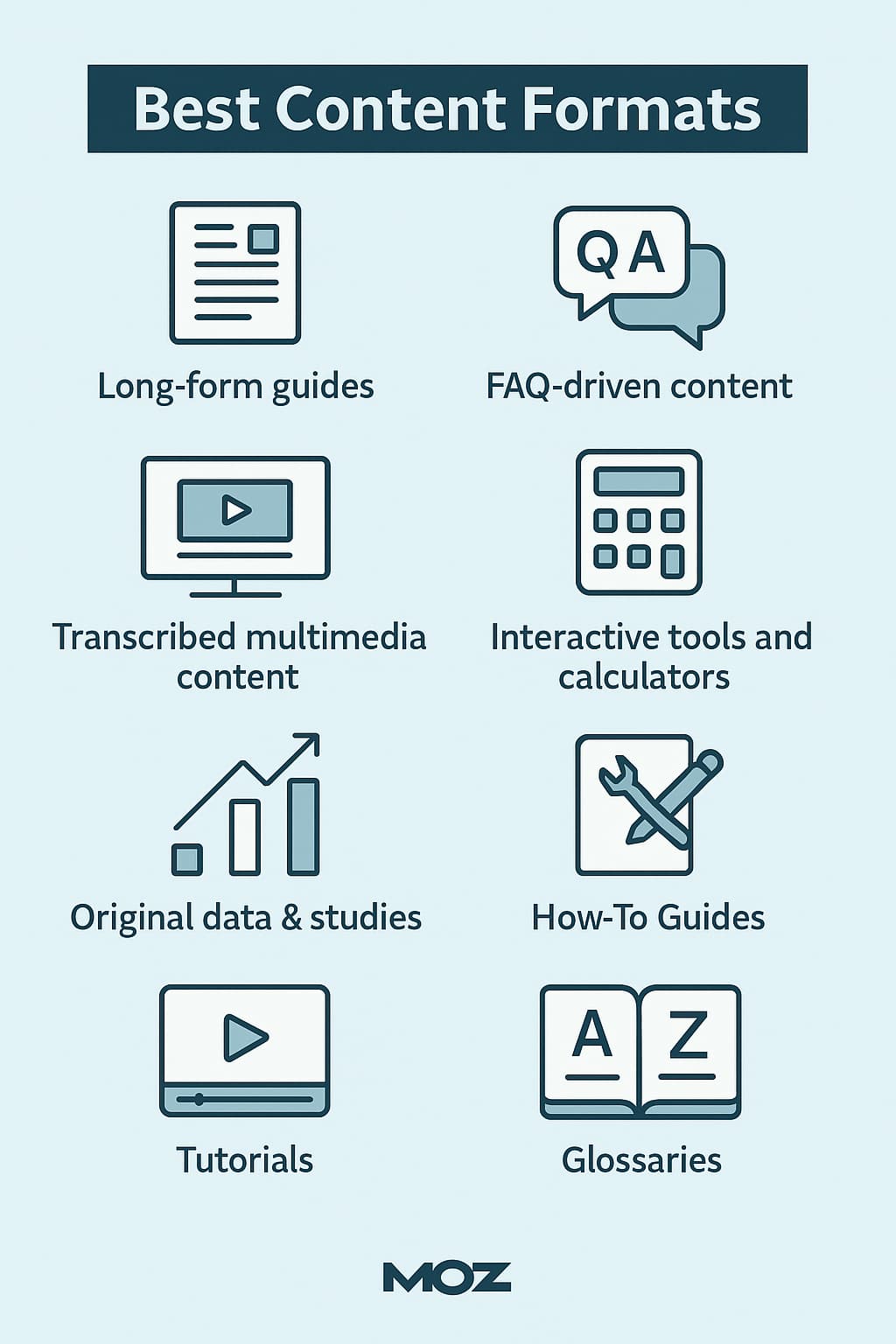

Content formats that win in Relevance Engineering

It's essential to note that the following recommendations are content formats besides critical pages: product pages, home pages, contact us, etc. Nothing would tank your revenue more than replacing product pages with FAQs, but think of these as content enhancements that help you convert inbound traffic and facilitate retrieval from LLMs.

- Long-form guides: Remember the hub-and-spoke strategy? Sometimes, your pillar pages from the clustering exercise will result in vibrant and informative long-form guides. Use a modular approach to structuring your content and ensure each section caters to specific sub-intents and user questions. Long-form guides with modular content become a rich source of interconnected passages for LLMs.

- FAQ-driven articles & sections: Content explicitly designed to answer a broad array of specific questions on a topic is highly effective. At Moz, some of our best-performing content focused on informational searches tends to get picked up by both LLMs and AI Overviews. You can incorporate FAQs as part of longer-form content or create dedicated pages for a site's educational resource section.

- Transcribed multimedia content: Repurposing content has never been cooler! Hyperbole aside, you can repurpose some of your strongest video assets into transcribed content that gets surfaced to LLMs. If your podcasts, webinars, and other video content (*ahem* Whiteboard Friday) contain unique perspectives and opportunities to highlight your brand, this is one way to expand the surface area of your content.

- Interactive tools and calculators: As a performance marketer, I've seen firsthand the revenue-driving impact of interactive widgets and calculators. Although the tool won't be read and surfaced by LLMs in a chat, the surrounding explanatory content can be cited and referenced in passages. For example, a page providing a mortgage payment calculator tool may have enough noteworthy and authentic content to answer critical questions that LLMs may include in their document corpus.

- Original data & studies: It wasn't long ago that people lamented that ChatGPT couldn't pull real-time data. With users desiring the most up-to-date information, feeding LLMs and search engines with original data on newer topics can go a long way to surfacing your brand to new audiences. Publishing original research, industry surveys, or intriguing data positions your content and brand as authoritative primary sources, making it highly attractive for citation among LLMs.

In addition to the above, you can create How-to Guides, tutorials, glossaries, and more. These content formats don’t just contribute to topical depth; they also help build your Brand Authority by positioning your company as the primary source of insight, not just repurposing someone else’s content. The common success factor among these content formats is their usability and reusability, especially if the information is kept fresh and current.

What you should stop doing

While the focus has been on what you should do to surface content to LLMs, here are the things to avoid or stop doing unless you want to sit in the invisible vortex of purgatory:

- Don't chase every specific keyword; target it with a separate page. This tactic was already outdated, dating back to the Rankbrain and even Panda days, but there are still relatively thin pages that slip through the index on both Google and Bing that are focused on a single keyword (e.g., doorway pages). Thin content will not survive the upcoming AI and LLM evolution.

- Don't ignore semantic relationships. Evolving keyword research into user research will be critical for survival in AI Mode and other LLMs. The framework shifts from specific keywords to covering topics in depth requiring you to think of related phrases and questions related to pillar topics.

- Don't forget technical SEO and internal linking. Content structure is essential, but getting that content found in the first place by LLMs is highly critical.

Turn AI disruption into a strategic advantage

Relevance Engineering isn't a massive divergence from SEO. Rather than saying, "It's just SEO," I think it's fair to say this is an evolution of organic search. While terms like GEO (generative engine optimization) may become more common, Rand said it best: It's still SEO: Search Everywhere Optimization.

Owning the content generation vehicle for your brand as an SEO means you already have the fundamentals down pat:

- User intent analysis and search behavior

- Content structure and markup

- Technical SEO & onsite optimization

- Navigating the evolution of search engines

Relevance Engineering extends these skills into a world where AI Models, not users, consume your content to find the best answers possible. Fortunately, this isn't a complete reinvention of search and inbound marketing. The ones who will succeed in the future are the ones who have experience building content hubs, optimizing their pages for semantic search, and implementing structured data. Future-proofing your marketing by adapting to LLMs will help you get better at all of the following tactics:

- Auditing your content with a focus on relevancy and sub-intents.

- Clustering your keywords into topics and subtopics.

- Optimizing passages based on intent, not just blanket onsite page optimization.

- Creating more citation-worthy content for AI and humans.

Relevance Engineering allows you to lead the charge in a volatile search landscape. Focus on evergreen tactics with a revenue lens and an eye toward the future.