Poor Website Loading Times Irritate People, Matter to Search Engines, Plus Can Cost you Money

This YouMoz entry was submitted by one of our community members. The author’s views are entirely their own (excluding an unlikely case of hypnosis) and may not reflect the views of Moz.

Remember the late 90s? Those were the times! I remember staying awake late at night, just because the internet connection was slightly better. I was actually happy to download larger files without getting disconnected. People were not so obsessed with speed and loading times, but with the availability in general.

Do you remember the sound of your trustworthy modem connecting you to the World?

In this day and age, website availability is still a major problem. Most of us experience this on a daily basis. We don’t get all cranky about loading times overnight but we do get more pretentious with each technology leap. Would the modern man wait 20-30 seconds for a page to load completely? Yep, nor would Google.

Your site needs to be in a pretty dark place to get in trouble with search engines, simply due to higher loading times. According to Google, less than 1% of all queries are affected by the site speed signal. In fact, it is far more likely to lose some prospective customers simply because they can get the service elsewhere, without having to wait for a page to load. We tend to take fast loading web resources for granted.

Nearly one-third of the population is online. We live in a world where ~2.27 billion people expect to have a web page completely available in just a few seconds or less. Or do they? Technological advancements are made, but not all can take advantage of them.

“The future is already here — it's just not very evenly distributed.”

This great quote by William Gibson describes pretty well the situation in some geographical regions. If you check your analytics account, I’m pretty sure you will see Africa underperforming in webpage loading times.

Is it worth it to improve your website’s loading time?

Website owners are obsessed with their rankings, quality of traffics, conversions rates, reputation management and the overall well-being of their online presence. It is quite frustrating how website availability is overlooked.

A site might be up and running, but virtually inaccessible to some. Problems do occur and more often than you might think. Unlike the available online resources from the 90s, the majority of websites rely on server side scripting, which on its part relies on properly functioning databases. The point is that a lot more can go wrong with a website nowadays, a lot more than it used to.

I’ve recently came across this question from the Q&A section.

“I was wondering what benefits there are investing the time and money into speeding up an eCommerce site. We are currently averaging 3.4 seconds of load time per page and I know from webmaster tools they hold the mark to be at closer to 1.5 seconds. Is it worth it to get to 1.5 seconds? Any tips for doing this?”

That’s a pretty tough one. No one can tell you. People would usually expend the opinion that it would not hurt to do everything possible to make your site run better and they will be right. This is the perfect scenario. We, however, live in a world where optimal decisions are far more valuable than absolute ones. In this case 3.4 seconds is the average loading time. This means that it could be significantly higher in certain hours or geographical locations.

If you have a local site and sell to the local market, then there is no need for you to serve a page in 1.5 seconds for people on the other end of the world. You simply need to perform that well in your local market.

Measuring the speed

Due to some mild problems with loading speeds with a website I look after, I went to find a proper way to measure:

- Website uptime

- Webpage response time

- Web page loading time

- Availability from various remote locations, worldwide

After trying out a couple of services I decided to go with a combination of two. I figured I need one paid service in order to gain access to more features, detailed reports, sms alerts and a large set of remote locations to test from. I went with websitepulse.com. There are some great alternatives out there. I can’t really call them substitutes because the services are pretty good. I chose to stick with that company because their service has all I currently require. Their homepage looks a little outdated, but that kind of makes me want to think they put their effort and resources in the service itself.

The other way I track the average loading times of my pages is through the Google Analytics site speed report. Last year I had to add one extra line to the GA tracking code to get the feature, but now it is a part of the code. It might be a good idea to update the code at this point. I still use the old code _trackPageLoadTime in my Google Analytics tracking. 10% of the traffic is evaluated and I get the information based on those 10%, which includes only traffic browsers that support the HTML5 Navigation Timing interface or have the Google Toolbar installed.

When you update to the new code to _setSiteSpeedSampleRate(), the sample rate will fall down to 1%. You can always increase it to 10% and that’s about it, at least for now. Here you can learn more about the site speed feature.

I found this feature to be really valuable when explaining loading time issues to the web developers at the company and also presenting the data to the management. The feature seems to keep both parties at bay. It gives all the necessary data to the development team, page by page.

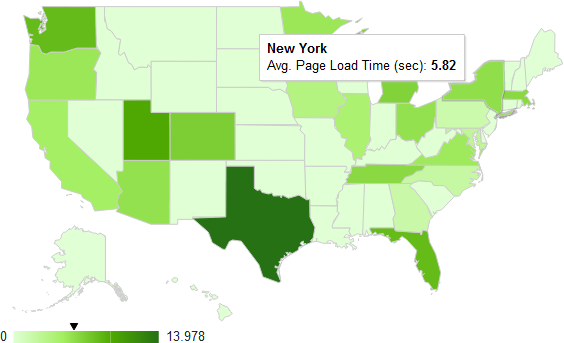

Also the visualizations are pretty great and can make a good addition to your next presentation.

Now, here is why I decided to go with a paid service. While the information from Google Analytics is pretty useful and well organized, it isn’t actually that accurate, mostly due to the limited number of samples. For sites with very high traffic the information might be closer to the actual figures. I don’t want to trash talk a free service I love. It is great for what it says it does.

I did the following test. I’ve sampled my site in January with both Google Analytics and WebSitePulse. I chose the United Kingdom for this test. Here are the results from WebSitePulse.

There is a noticeable change from the 14th of January. This is when we moved the site from our servers to a data center. We had issues with the average loading times for quite some time and I bet this was one of the best decisions in the last couple of years. You can see how the average loading time went down from ~4.5 to ~0.95 overnight. The site had a lot of heavy server side scripts, containing a lot of exceptions and some heavy communication with the data base.

This is the information I have from Google Analytics for January. I’ve expected to see something similar to the chart above. Here I got only 827 samples and in the chart above I have 10 times the amount.

Here you can see a slight improvement, but nothing too dramatic. According to this report I got an improvement of 1.79 seconds. It had no dramatic effect on the bounce rate, but you can see it going down a bit. Then again, it might be something else.

So with 10 times the data I got more accurate results. I’ve tested the site every five minutes, to make sure I have a good enough data set to work with. With Google Analytics all you get is 10%. It is quite useful and pretty good for a free tool, but I won’t close down my paid account just yet. In fact, go out and try any of the remote website monitoring services. Most of them have unlimited 30 days trials, which will give you valuable intel on the current state of your website.

Google Analytics seem to have a great feature in the works and I’m really optimistic about it! I’m curious to know how the Site Speed feature is working out for you.

Tom Critchlow wrote a great coverage on the feature. It might be a good place to read more about Site Speed.

Comments

Please keep your comments TAGFEE by following the community etiquette

Comments are closed. Got a burning question? Head to our Q&A section to start a new conversation.