Reddit, Stumbleupon, Del.icio.us and Hacker News Algorithms Exposed!

The author's views are entirely their own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

It is greatly ironic that algorithms, the quintessential example of all that is not human, would be so fundamental to social media. Last week I wrote a post about how Google gathers user data. This week I continue by exposing how popular social media websites use algorithms to utilize user data.

Although humans power social media, it is algorithms that provide the frameworks that make user input useful. As proven by the countless social sites online, finding the correct mix of participation and rules can be extremely difficult. Below are some of the algorithms that when combined with the right people have proven successful.

Formula:

(p - 1) / (t + 2)^1.5

Description:

Votes divided by age factor

p = votes (points) from users.

t = time since submission in hours.

p is subtracted by 1 to negate submitters vote.

age factor is (time since submission in hours plus two) to the power of 1.5.

Source: Paul Graham, creator of Hacker News

Reddit:

Formula:

Description:

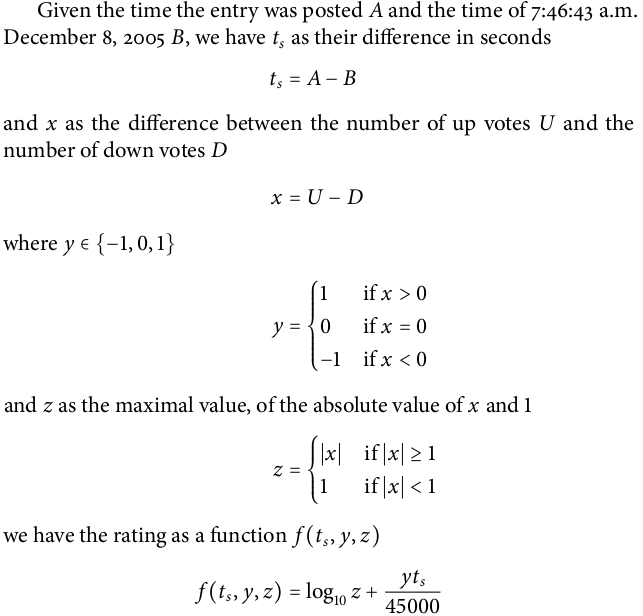

First of all, the time 7:46:43 am on December 8th 2005 is a constant used to determine the relative age of a submission. (It is likely the time the site launched but I have not been able to confirm this) The time the story was submitted minus the constant date is ts. ts works as the force that pulls the stories down the frontpage.

y represents the relationship of up votes to down votes.

45000 is the amount of seconds in 12.5 hours. This constant is used in combination with yts to "water down" votes as they are made farther and farther from the time the article was submitted.

log10 is also used to make early votes carry more weight than late votes. In this case, the first 10 votes have exactly as much weight as votes 11 through 101.

Source: code.reddit.com, Redflavor.com and Hacker News user Aneesh

StumbleUpon:

Formula:

(Initial stumbler audience / # domain) + ((% stumbler audience / # domain) + organic bonus – nonfriend) – (% stumbler audience + organic bonus) + N

Description:

The initial stumbler "power" (Audience of the initial stumbler divided by the amount of times that stumbler has stumbled the given domain) is added to the sum of all the subsequent stumbler's powers.

Subsequent stumbler power is ((Percentage of audience stumbler makes up divided by the number of times given stumbler has stumbled domain) + a predetermined power boost for using the toolbar - a predetermined power drain if stumblers are connected) + (% of the stumbler audience + a predetermined boost for using the toolbar)

N is a "safety variable" so that the assumed algorithm is flexible. It represents a random number.

Source: 2007 Tim Nash at The Venture Skills Blog Please see his blog post for more in depth information

Del.icio.us:

Formula:

Points = (Amount of times story has been bookmarked in the last 3600 seconds)

Description:

Rank on Del.icio.us Popular is determined by comparing points. Points represent the amount of times a story has been bookmarked in the last hour. The higher the rate, the higher the points. Every bookmark counts as one point.

3600 is the seconds in one hour.

Source: Based on my extended observations of Del.icio.us Popular

Digg is different. The company is a lot less transparent than the above mentioned companies. It is fearful of being gamed and in response has created a secritive algorithm that appears to be far more complex than its competition.

At a minimum I expect that Digg's algorithm takes into account the following factors:

Although humans power social media, it is algorithms that provide the frameworks that make user input useful. As proven by the countless social sites online, finding the correct mix of participation and rules can be extremely difficult. Below are some of the algorithms that when combined with the right people have proven successful.

Popular Social Media Algorithms

Y Combinator's Hacker News:

Formula:

(p - 1) / (t + 2)^1.5

Description:

Votes divided by age factor

p = votes (points) from users.

t = time since submission in hours.

p is subtracted by 1 to negate submitters vote.

age factor is (time since submission in hours plus two) to the power of 1.5.

Source: Paul Graham, creator of Hacker News

Reddit:

Formula:

Description:

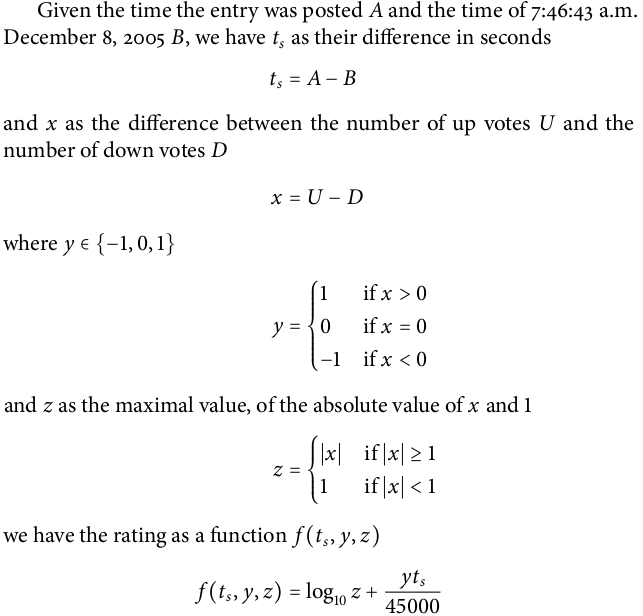

First of all, the time 7:46:43 am on December 8th 2005 is a constant used to determine the relative age of a submission. (It is likely the time the site launched but I have not been able to confirm this) The time the story was submitted minus the constant date is ts. ts works as the force that pulls the stories down the frontpage.

y represents the relationship of up votes to down votes.

45000 is the amount of seconds in 12.5 hours. This constant is used in combination with yts to "water down" votes as they are made farther and farther from the time the article was submitted.

log10 is also used to make early votes carry more weight than late votes. In this case, the first 10 votes have exactly as much weight as votes 11 through 101.

Source: code.reddit.com, Redflavor.com and Hacker News user Aneesh

StumbleUpon:

Formula:

(Initial stumbler audience / # domain) + ((% stumbler audience / # domain) + organic bonus – nonfriend) – (% stumbler audience + organic bonus) + N

Description:

The initial stumbler "power" (Audience of the initial stumbler divided by the amount of times that stumbler has stumbled the given domain) is added to the sum of all the subsequent stumbler's powers.

Subsequent stumbler power is ((Percentage of audience stumbler makes up divided by the number of times given stumbler has stumbled domain) + a predetermined power boost for using the toolbar - a predetermined power drain if stumblers are connected) + (% of the stumbler audience + a predetermined boost for using the toolbar)

N is a "safety variable" so that the assumed algorithm is flexible. It represents a random number.

Source: 2007 Tim Nash at The Venture Skills Blog Please see his blog post for more in depth information

Del.icio.us:

Formula:

Points = (Amount of times story has been bookmarked in the last 3600 seconds)

Description:

Rank on Del.icio.us Popular is determined by comparing points. Points represent the amount of times a story has been bookmarked in the last hour. The higher the rate, the higher the points. Every bookmark counts as one point.

3600 is the seconds in one hour.

Source: Based on my extended observations of Del.icio.us Popular

Avoiding the 10,000 lb Gorilla in the Room (Digg.com)

Digg is different. The company is a lot less transparent than the above mentioned companies. It is fearful of being gamed and in response has created a secritive algorithm that appears to be far more complex than its competition.

At a minimum I expect that Digg's algorithm takes into account the following factors:

- Submission Time

- Submission Category

- Submitter's Digg authority

- Submitter's website wide activity

- Sumbitter's friends and fans

- Subsequent digger's authority

- Subsequent digger's friends and fans

- Subsequent digger's geo location

- Subsequent digger's HTTP referer

If you have any other advice or thoughts that you think is worth sharing, feel free to post it in the comments. This post is very much a work in progress. As always, feel free to e-mail me or send me a private message if you have any suggestions on how I can make my posts more useful. All of my contact information is available on my profile: Danny Thanks!

Comments

Please keep your comments TAGFEE by following the community etiquette

Comments are closed. Got a burning question? Head to our Q&A section to start a new conversation.