Moz Developer Blog

Moz engineers writing about the work we do and the tech we care about.

Introducing RogerOS Part 2

Posted by Ankan Mukherjee on December 7, 2015

Preamble

This is the second in a two-part blog post outlining our work at Moz on RogerOS, our next generation infrastructure platform. The first part gives background on and provides an overview of the system. This post goes deeper into the implementation and provides the juicy technical details. This blog post comes to you courtesy of Ankan Mukherjee and Arunabha Ghosh. We hope you enjoy reading about it as much as we’ve enjoyed working on it.Introduction

In the first part we talked at length about why we set out to build RogerOS, the background that set us up towards this end, and the lofty design goals we had in mind. We also provided a ClusterOS architecture diagram detailing the various subsystems that worked together to form RogerOS. Let's now get into more details on these fronts.Design Goals

In the first part we summarized our overall design goals. Here are more details on some of our salient technical design goals -Developer oriented abstractions

Traditionally, interfaces and abstractions offered by infrastructure systems have been heavily focused on the operational side. Virtualization systems, for example provide a lot of operational flexibility. However, they offer the same fundamental abstraction as a physical machine. On the other hand, most developers intuitively think in terms of different abstractions like processes, jobs, services, etc. To a large degree, they care more about the fact that their stuff runs and is reachable than the particulars of which machine they run on. As software systems become larger, more distributed and decoupled, the implications of this abstraction gap become more and more apparent. The growing capabilities and complexity of orchestration systems like Puppet and Ansible are a visible manifestation of the increasing gap and attempts to bridge it. One of our core design principles was to provide developers with the abstractions they needed and used daily. This undoubtedly increased the complexity as it was now up to the system to make the necessary translations into the underlying machine abstractions, but in hindsight, this was the right choice.Completely automated lifecycle management

As an immediate consequence of the previous goal, we wanted to provide as much automation as possible, providing not just orchestration but complete lifecycle management. This was also necessary for some of the other goals, like providing higher resiliency.Highly resilient, no single point of failure

Given that a lot of developers would be depending on the system and failures are a regular feature at scale, we explicitly wanted to have ample redundancy and no single points of failure.High degree of automation and self healing

As mentioned above, failures are an expected feature vs. an anomaly at scale. The expectation was we would be able to handle most routine failures like machine and process failure with no manual intervention.Ability to run multiple workloads

As mentioned before, any solution we picked had to cater to not only new development but also be able to handle existing applications. Specifically, we wanted to be able to handle application workloads using containers, legacy applications using virtual machines, recurring ‘cron’ jobs, and large scale batch workloads (Spark et al).Efficiency

While not the primary concern, for a system like RogerOS, it is vital that efficiency is a major criteria. Indeed, external evidence from systems like Borg indicate significant cost reduction can be gained by using such systems. Google estimates the savings to be on the order of not needing an entire data center due to efficiency gains!Ease of operation

Last but not the least, we wanted the system to be easy to operate. Some of the design criteria for this were -- Integrated monitoring and resource tracking

- Procurement friendly

- Ability to use existing resources (no need to buy new hardware to get the system going)

- Easy to add resources with minimal or no disruption

Design

In the first part we showed an architecture diagram that provides an overview of the various systems that form the ClusterOS. As stated already, the core of RogerOS is Apache Mesos. Mesos corresponds to the ‘ClusterOS Kernel’ in the architecture diagram mentioned above.The Core Components

We now describe the core components that make up RogerOS.Zookeeper

Apache Zookeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. Mesos uses Zookeeper for master leader election, coordinating activity across the cluster and for letting slaves join the cluster. We also use Zookeeper for some of our internal apps running on RogerOS for distributed locking and process coordination.Mesos

We run Mesos masters in high availability mode with three or five nodes that coordinate to select one active leader using Zookeeper. We run Mesos slaves with the docker containerizer enabled. Mesos slaves are the worker nodes and keep talking to the Mesos master providing resource details available for running tasks. Mesos frameworks (see below) talk to the master to get information on these details and decide which slaves to run tasks on.Mesos Frameworks

Mesos Frameworks provide a higher level of abstraction on top of Mesos and provides features that make it easy to manage what’s running on Mesos. The ability to add and create your own frameworks also makes this system highly extensible. We started with the Marathon framework which runs quite a few of our tasks at present. Marathon provides the ability to start and manage long-running applications on Mesos and corresponds to the Job/Task Schedulers in the architecture diagram mentioned above. If you consider Mesos as the kernel of this ClusterOS, Marathon is the upstart/init daemon. As we moved forward, there was a need to schedule periodic and run one-off tasks which led us to add Chronos, which also corresponds to Job/Task Schedulers and is essentially distributed cron. We have a few backup jobs running on Chronos, and there are new jobs getting added. We are also considering getting other frameworks like Apache Aurora (that supports both long-running as well as distributed cron jobs), and Apache Spark (for large scale data processing), up and running in the future.Load Balancer & DNS Server

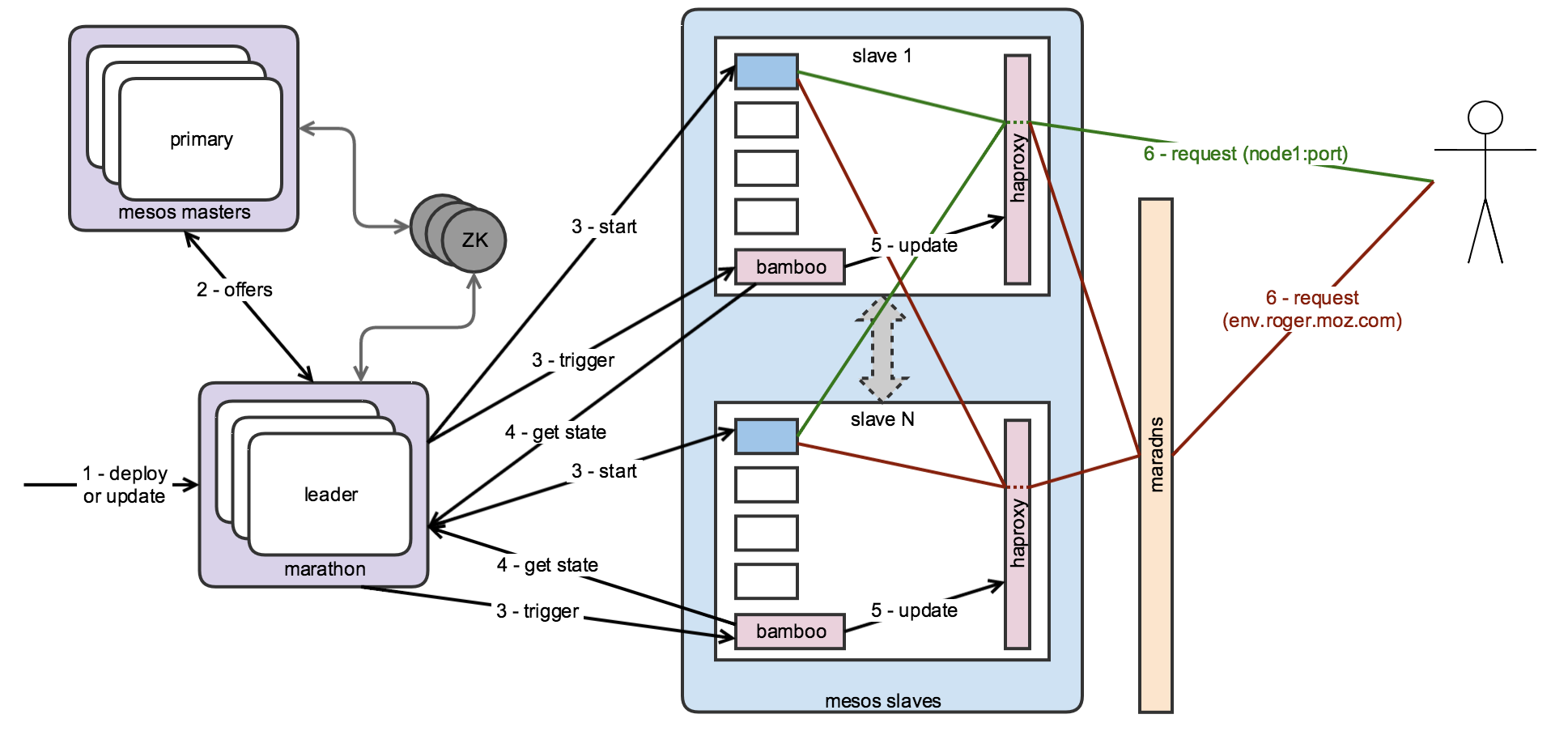

We use roger-bamboo, which along with haproxy acts as the service discovery and distributed load balancing system for RogerOS. It is based on bamboo and adds several features including flexible TCP and HTTP port specification, HTTP web path specification, etc. Each node on RogerOS has roger-bamboo and haproxy running. Any changes on RogerOS triggers an update to bamboo, which in turn updates and reloads haproxy configuration. This essentially provides configuration-free, automatic load balancing across all instances of an application running on the cluster. We also use maradns as a dns server to provide common and simple names on RogerOS.System Layout

The following diagram shows an overall layout of the core components in action.

The Monitoring Stack

We realized integrated monitoring is an essential component for this system to succeed. This corresponds to the Monitoring and Log Aggregation components in the architecture diagram mentioned above. There is a need to monitor both the variety of components that constitute the system as well as the tasks and services that run on the system.Goals

Our monitoring system is based on the following principles -- Monitoring data should be easy to collect, store and query.

- Creating dashboards and graphs from this data should take minimal effort.

- Alerts should be easy to create and take action on.

- The underlying query semantics should be the same so that it's easy to manage and maintain.

- It should be possible to query and alert across metrics and components when required.

Components

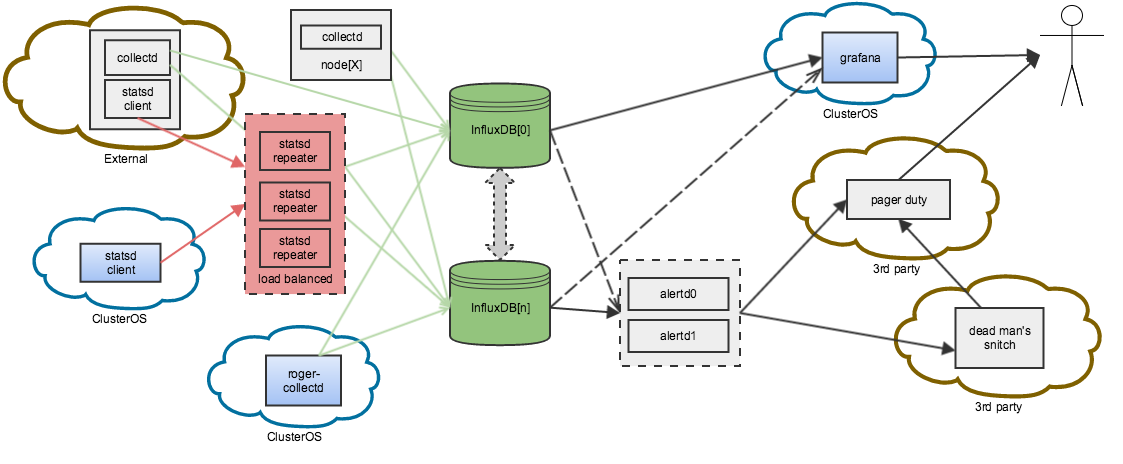

To achieve this end we use the following components -- We collect system metrics using collectd. We also use collectd plugins to collect metrics from the core components above (mesos, marathon, zookeeper, haproxy, bamboo, etc.) These run on each of the nodes on RogerOS.

- In some cases we also run dockerized collectd instances on RogerOS itself to collect metrics from applications running on RogerOS.

- For custom application metrics we provide a statsd interface to send metrics data to.

- We use InfluxDB which is an open source distributed time series database to store all our monitoring metrics.

- Grafana that has built in support for influxdb, which provides us with the ability to create dashboards.

- For alerting we wrote our own daemon that uses influxdb queries, integrates with PagerDuty, and provides the ability to create alerts.

Internal monitoring

We monitor all machines that are part of RogerOS for cpu usage, memory usage, network usage, disk space, etc., and have appropriate alerts set up. We also monitor our own internal applications, services, daemons, and have alerts set up as required.Application monitoring

- We automatically collect metrics like cpu usage, memory usage, network usage etc. for all docker containers running on the system.

- We also have dashboards that chart out these metrics automatically without the need of any configuration or manual effort.

- In addition apps on RogerOS may send custom metrics using any stastd client. Our statsd interface is via telegraf (with the statsd service plugin for influxdb) which adds the ability to tag metrics and makes it easier to store and query with influxdb.

- Although we set up the monitoring stack for RogerOS there is no requirement that the system to be monitored be running on or be a part of RogerOS. This essentially means that we can support common monitoring needs of applications across the company.

- Needless to say custom dashboards can be created using any of the metrics collected above.

- Application logs from docker can be sent to a logstash setup that is already available across the company.

Setting up Alerts

- We have our custom alerter that integrates with pagerduty and allows alerts to be set up on any metrics.

- Alerting and charting use the same underlying query semantics. So, if you can chart something you can alert on it.

- Common alerts like less than, greater than, etc., are available on any influxdb aggregation queries.

- Additional alert types, like checking for presence/absence, consistency, etc., are also available.

Monitoring the Monitor

“Quis custodiet ipsos custodiet?,” you ask. We use deadmanssnitch to ensure that our alerter is up, running, and healthy.System Layout

The following diagram provides an overview of the monitoring system we have.

The CLI

No matter how good a system is, without ease of usage, it’s of no use. We recently started creating a command line interface (CLI) with this in mind. We have the following goals for the CLI which is targeted towards developers as the primary users -- Make it absolutely painless to get code from source control to deployment.

- Create a standardized way of declaring application configuration and create commands that are similar to tools that developers already use.

- Avoid abstracting out or hiding tools and features that are already available. Instead, utilize what’s already available and add tools that enhance the overall experience.

- Rapidly develop features, release, and actively act on feedback from developers.

- init - Creates basic configuration and templates to get started.

- deploy - This is a one stop shop. It gets code from the github repository, builds it and pushes it to docker repository, creates configuration files for the target environment, and then pushes it into RogerOS - all in one go.

- git-pull - Pulls code from a github repo.

- build - Builds the application and optionally pushes it to the docker registry (if applicable).

- push - Pushes the application into RogerOS.

- shell - Sets up an interactive bash session to a task running on RogerOS.

Experience

As can be imagined from the design details, RogerOS is a fairly complex system and has been under development for quite a while. Today, a small cluster of 8 (albeit beefy) machines runs a wide variety of applications for Moz -- 8 nsqd instances along with nsqlookupd and nsqadmin instances

- 2 mongodb replica sets with 3 replicas each

- A few redis instances

- About 20 different apps with numerous (over 150) instances that support Moz Content

- Over 70 instances of various apps that support products that are either in beta or are launching in the future (hush hush!!)

- Numerous tasks that are used internally by RogerOS.