Moz Developer Blog

Moz engineers writing about the work we do and the tech we care about.

Near-Duplicate Detection

Posted by Dan Lecocq on January 27, 2015

Duplication of content on the Internet is inevitable, whether it be intentional or accidental, malicious or coincidental. There are a number of reasons that it's important to identify duplication, and almost as many approaches. We've historically used a small handful of techniques, and here we'll talk about them and how we've come to use what we use today.

Why Does Duplication Matter?

The primary reason to identify duplication is that cause problems with search engine rankings. From the search engines' view, it can represent cruft on the Internet and make it difficult to determine what is the definitive source. If you have several different URLs that have effectively the same content, it can also dilute your link juice across many pages, rather than aggregating it to a single higher-ranking page. The following diagram shows how using a rel canonical tag helps you to help the search engines by identifying the definitive source for your content and concentrate your link juice at the same time. Duplicate content is an important issue not only because of its importance for rankings, but also because on-site issues are something over which we have concrete control. Web site owners can make any necessary changes to effect the correct behavior.

Unintentional duplication can happen in a lot of ways, but some of the more common causes are:

Duplicate content is an important issue not only because of its importance for rankings, but also because on-site issues are something over which we have concrete control. Web site owners can make any necessary changes to effect the correct behavior.

Unintentional duplication can happen in a lot of ways, but some of the more common causes are:

- 'www' vs. 'non-www' versions of the same page

- pagination

- pages that differ only by a parameter, especially sort order

- different parameter orders, like '?a=1&b=2' and '?b=1&a=2'

What to Do About It?

Once you've detected duplicated content, typically you'll decide one of two things: First, the pages might really be intended to be unique content and perhaps they only need to be better-differentiated; alternatively, you might discover that the pages are essentially the same with only subtle changes, in which case you would designate one the primary source using rel canonical. While Moz tools do a good job of providing you insight into your duplicates over time, when you're actively fixing these issues it can be helpful to get more-immediate feedback with spot checks using tools like webconfs.How to Identify Duplication

There are many different ways that machines (that is, search engines and Moz) can attempt to identify duplicate content.- Bag of Words - Comparing the words and frequency of those words on a page with those on another page.

- Shingling - This method improves on the "Bag of Words" approach by comparing short phrases, providing some context to the words.

- Hashing - This method further improves the process by eliminating the need to store copies of all of the content. The phrases are hashed into numbers, which can then be compared to identify duplication.

- MinHash - Helps streamline the process of storing content hashes.

- SimHash - Further streamlines the process of storing content hashes and duplication detection.

Bag of Words

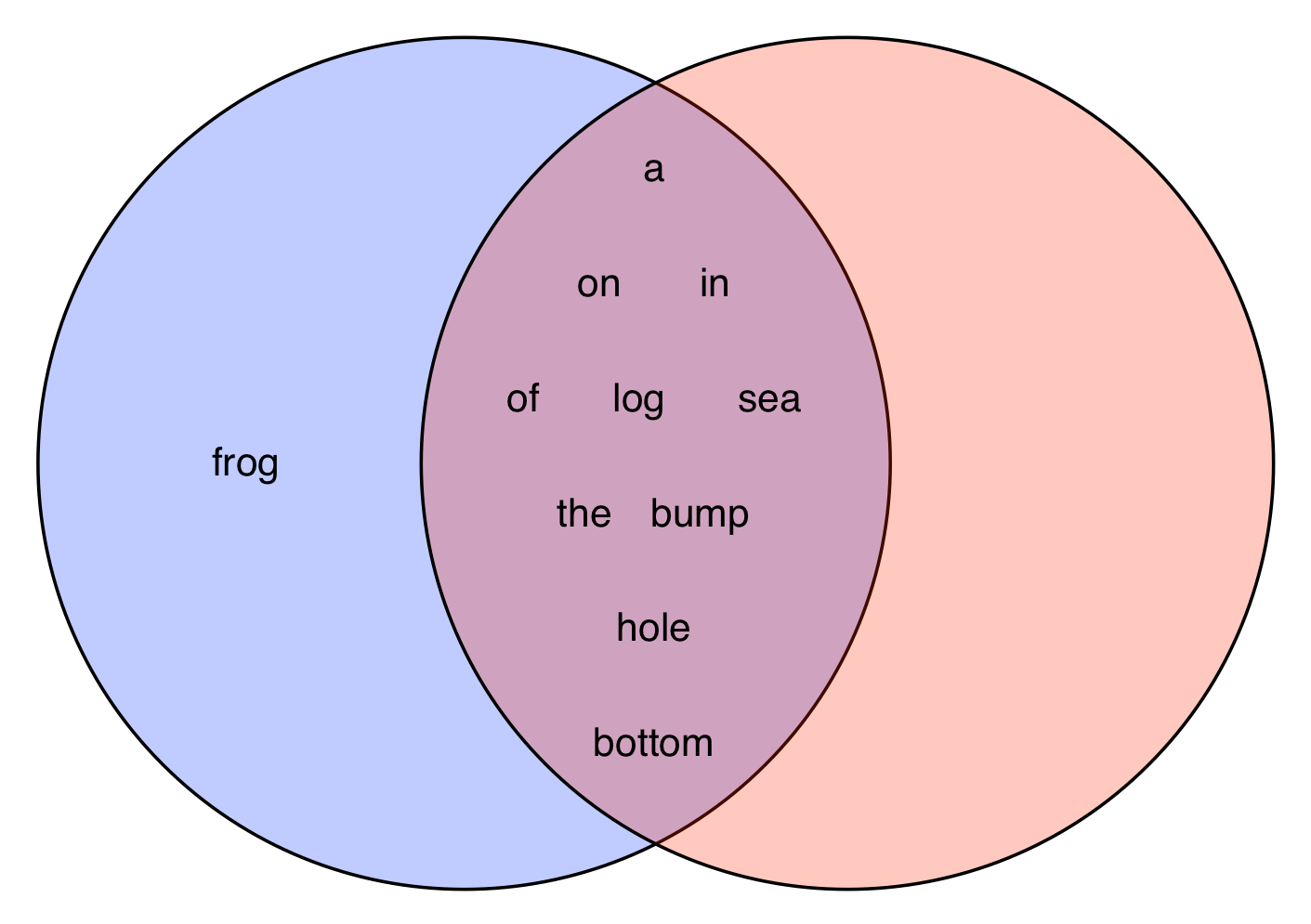

Let's suppose that we've got a set of documents and for each one, we'd like to be able to find its duplicates. For this, we'd go to each document and then check every other to see if it's a match, so let's describe what we'll mean by a 'match.' Naïvely, we might begin by treating each document as a bag of words. So, a document like "a bump on the log in the hole in the bottom of the sea" is transformed to the set of the words it contains:{a, in, of, on, the, log, sea, bump, hole, bottom}

To compare two documents, we figure out how many words they have in common and compare that to the number of words that appear in both sets. For those playing at home, this is called the Jaccard similarity coefficient.

So if we compare our example document to another one, "a frog on the bump on the log in the hole in the bottom of the sea", we see that they have lots of words in common relative to the total number of words. These, then, are considered very similar.

Alternatively, if we compared it to a different document, "your mother drives you in the car", then we find that there aren't very many words in common relative to the total number of words. These, then, are considered very dissimilar.

Alternatively, if we compared it to a different document, "your mother drives you in the car", then we find that there aren't very many words in common relative to the total number of words. These, then, are considered very dissimilar.

This is all well and good, but it has some problems. While it worked well for identifying the similar documents above, this approach would also identify these two documents as being similar: "Your mother drives you in the car" and "In mother Russia, car drives you!" These documents are completely different, and yet they use mostly the same words. Clearly a better approach is needed.

This is all well and good, but it has some problems. While it worked well for identifying the similar documents above, this approach would also identify these two documents as being similar: "Your mother drives you in the car" and "In mother Russia, car drives you!" These documents are completely different, and yet they use mostly the same words. Clearly a better approach is needed.

Shingling

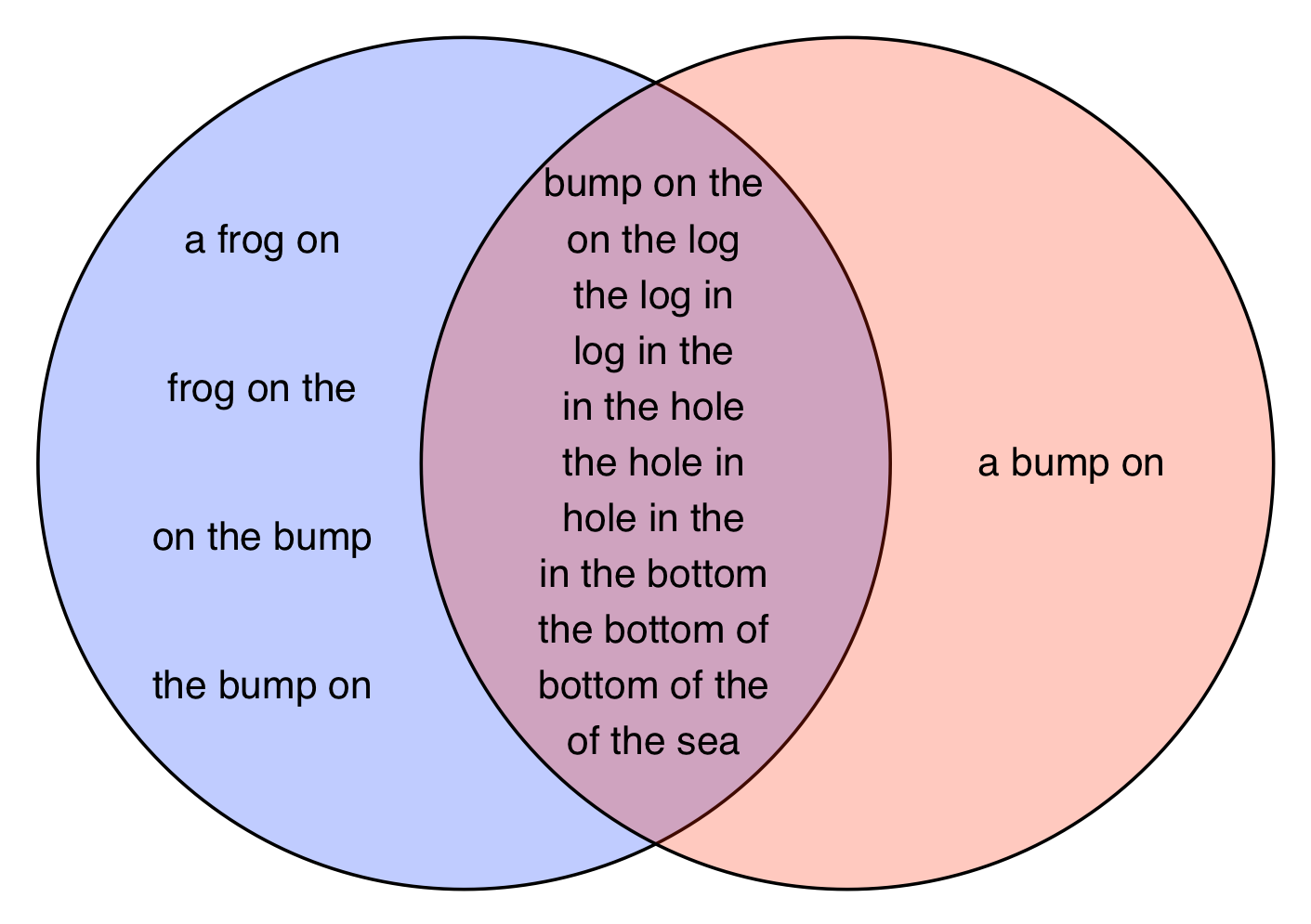

The problem with the "Bag of Words" approach is that it ignores the context of words. In particular, the words that surround any given word. As the above example illustrates, this is important. So, instead of just treating each document as a bag of words, let's instead turn each document into a set of overlapping phrases of a few words at a time. This approach is known as shingling because each phrase overlaps its neighbors, much like shingles on a roof. So for, "Your mother drives you in the car," we'd get a set like this:{

'your mother drives',

'mother drives you',

'drives you in',

'you in the',

'in the car'

}

Applying this approach and comparing the number of phrases shared by our sentences relative to the total number of phrases, we see that they actually are quite different:

Returning to our original arboreal herpetology example, we again find that the documents are relatively similar:

Returning to our original arboreal herpetology example, we again find that the documents are relatively similar:

Looks like we're making some progress.

Looks like we're making some progress.

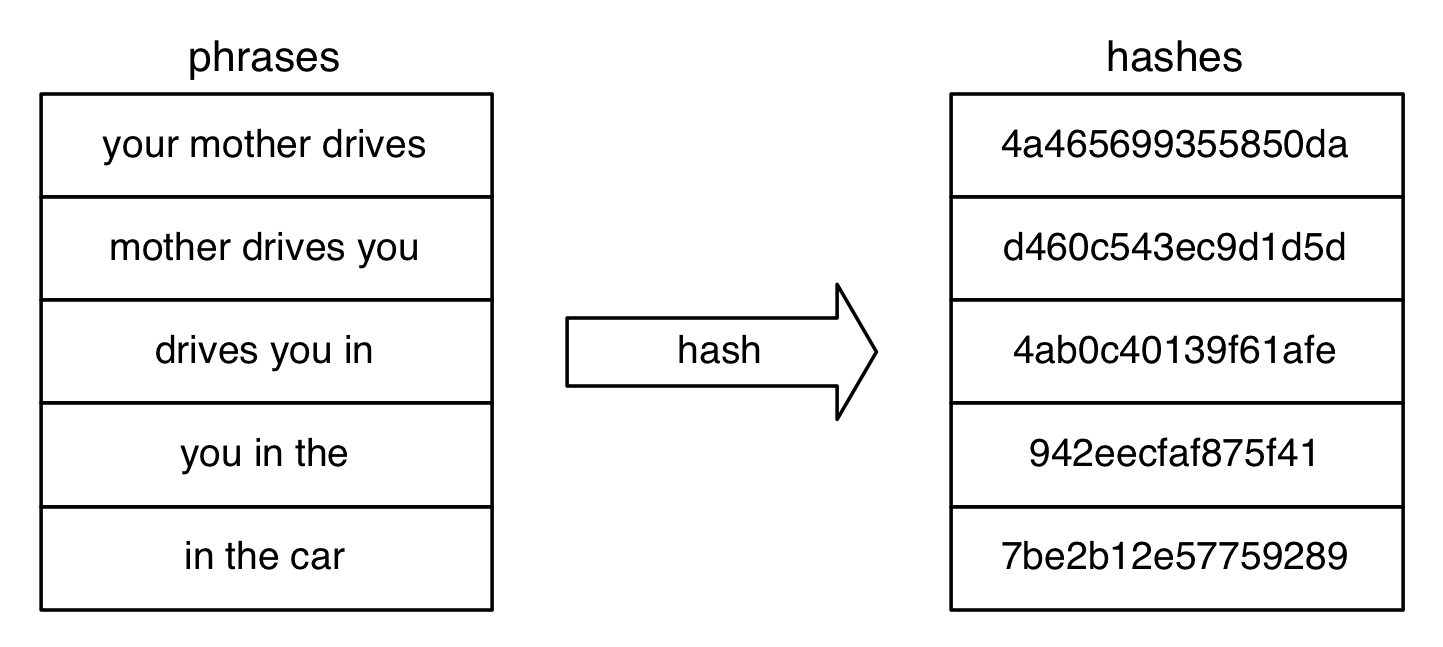

Hashing

One of the problems with this bag of phrases approach is that we actually have to store the document several times over. In particular, if we use phrases of k words, then each word will appear in k phrases (except for words at the beginning and end), making the storage requirements O(nk). To make our lives easier, we'll turn each phrase into a number representative of the phrase using a hash function. So now instead of a bag of phrases, we have a bag of numbers on which we can still perform the same set intersection. To avoid confusion, we'll refer to these as 'phrase hashes.' MinHash

MinHash

While hashing reduces our per-document storage requirements down to O(n) in the number of documents, it's still too much. A lot of pages are really big, and when comparing two documents to determine the overlap, it must take at least O(n + m) time.

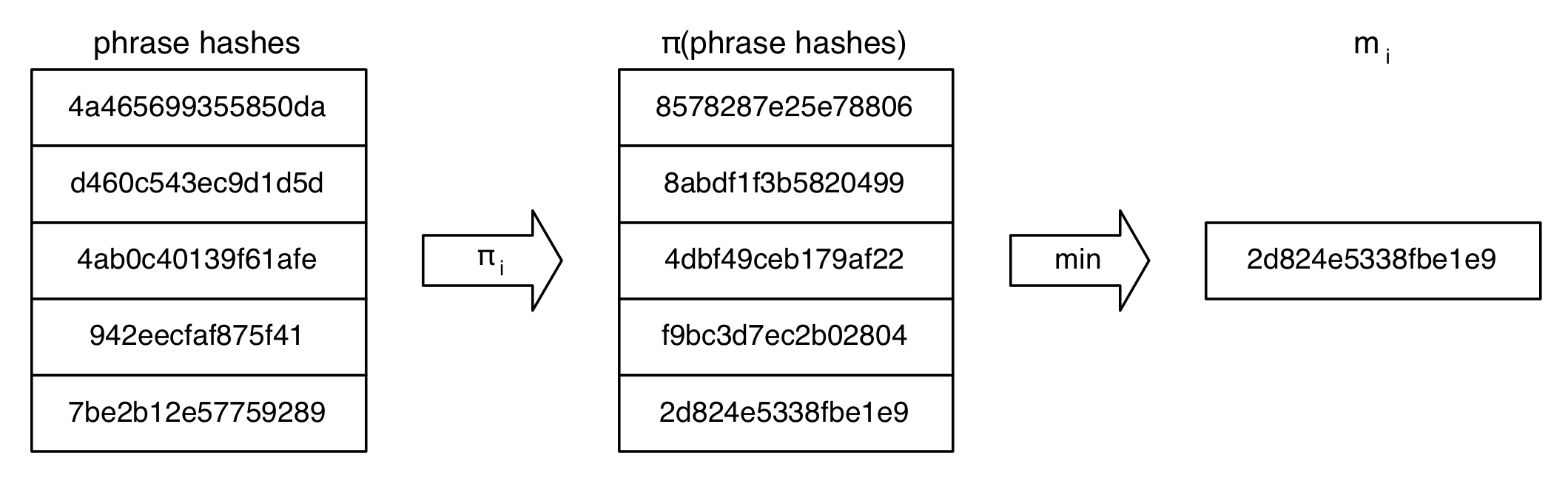

MinHash is an approach that is capable of using constant storage independent of the document length and producing a good estimate of our similarity measure. This approach distills down each document to a fixed-size set of hashes as a rough signature of this document. This feat is accomplished by using a set of k randomizing hash functions. For each randomizing hash function denoted πi, we pass the entire document's phrase hashes through to get a minimum hash denoted mi.

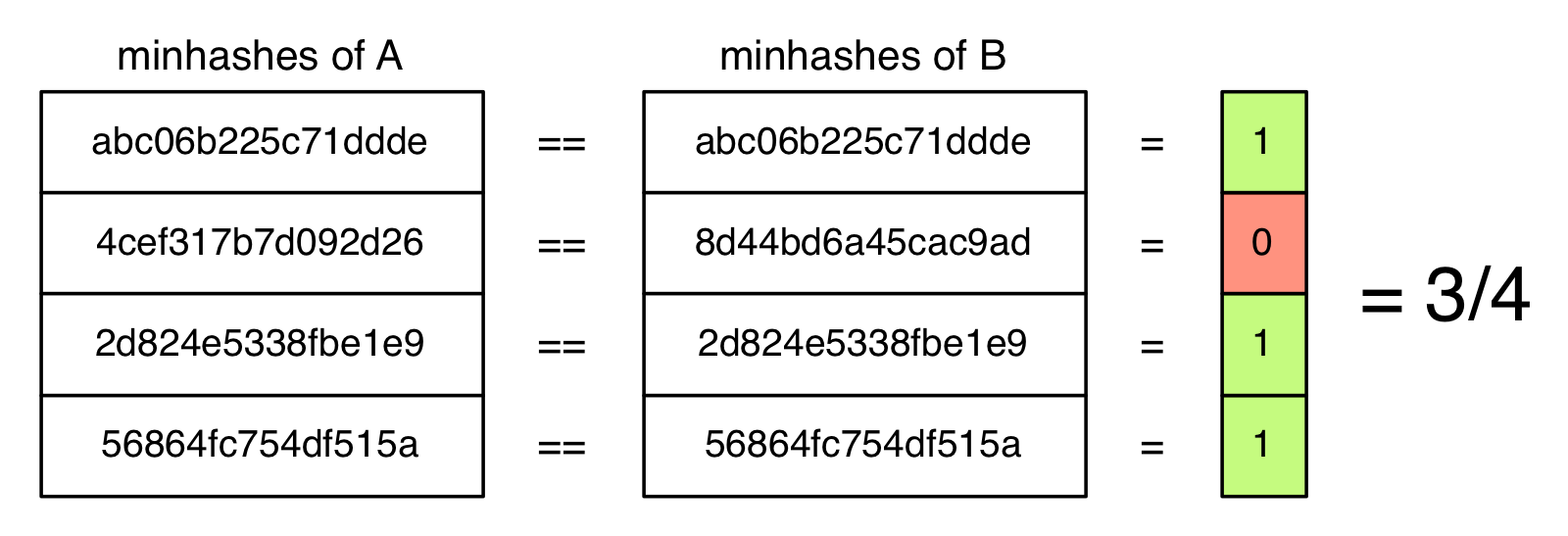

The signature of the document is now the ordered list of these minimum hashes m0 through mk-1. This method achieves an approximation to Jaccard similarity by comparing pairwise each of the document's minimim hashes and giving a portion that are identical.

The signature of the document is now the ordered list of these minimum hashes m0 through mk-1. This method achieves an approximation to Jaccard similarity by comparing pairwise each of the document's minimim hashes and giving a portion that are identical.

This is a big win in two ways: the storage required for each document is now O(1), and our pairwise document comparison is now also O(1), an improvement over O(n) and O(m + n) respectively.

While this improves the complexity for comparing any two documents, we're still faced with the problem of finding any (or all) duplicates of a query document given a set of known documents. Using this method, we have to do a linear traversal of all the known documents in O(n) time. For campaign crawls, we crawl every page of a site and determine all duplicates of each page within the site. For many campaigns, this is not particularly burdensome, but a number of campaigns have hundreds of thousands or a million pages, at which point this becomes prohibitive.

This is a big win in two ways: the storage required for each document is now O(1), and our pairwise document comparison is now also O(1), an improvement over O(n) and O(m + n) respectively.

While this improves the complexity for comparing any two documents, we're still faced with the problem of finding any (or all) duplicates of a query document given a set of known documents. Using this method, we have to do a linear traversal of all the known documents in O(n) time. For campaign crawls, we crawl every page of a site and determine all duplicates of each page within the site. For many campaigns, this is not particularly burdensome, but a number of campaigns have hundreds of thousands or a million pages, at which point this becomes prohibitive.

Simhash

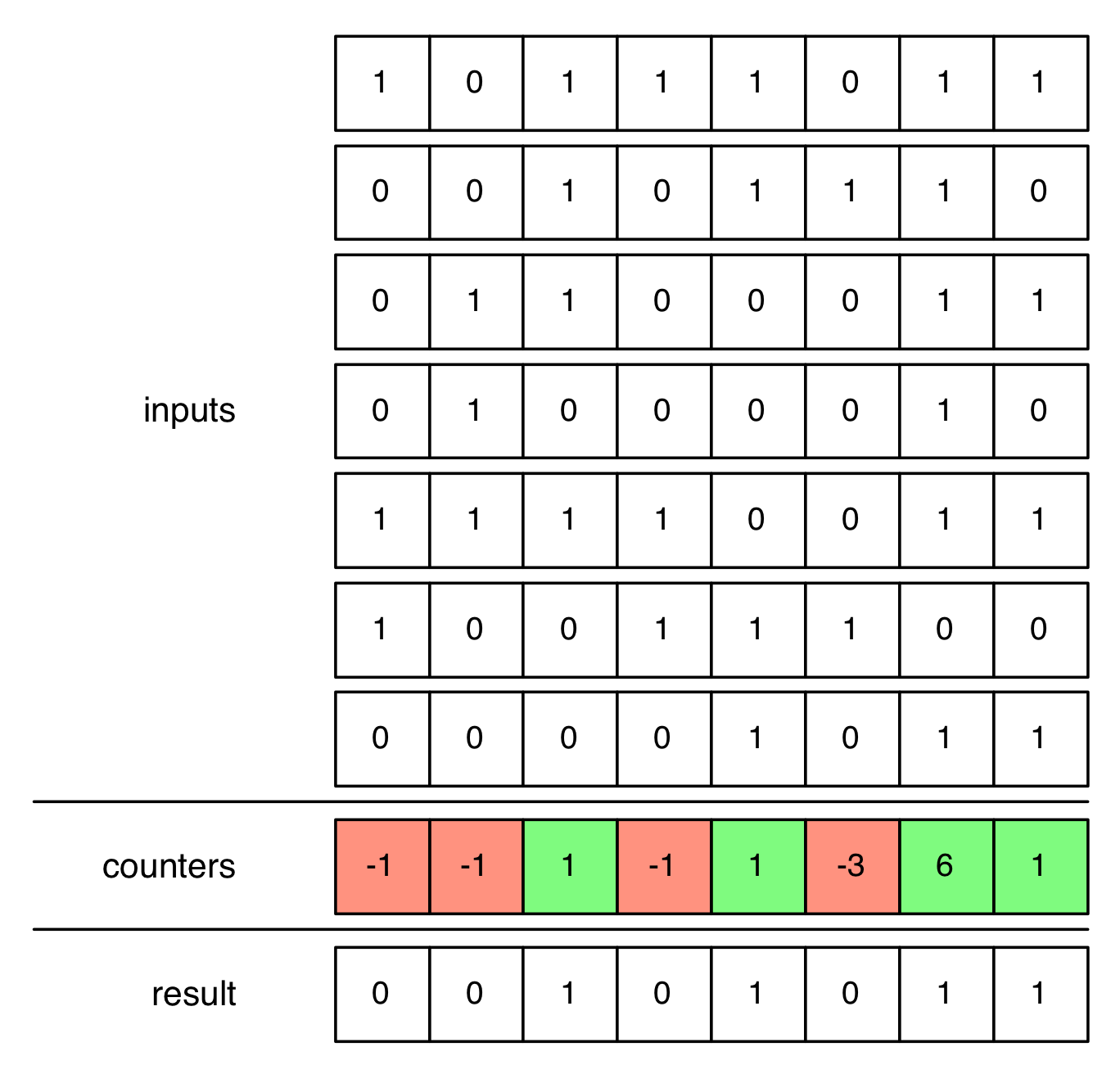

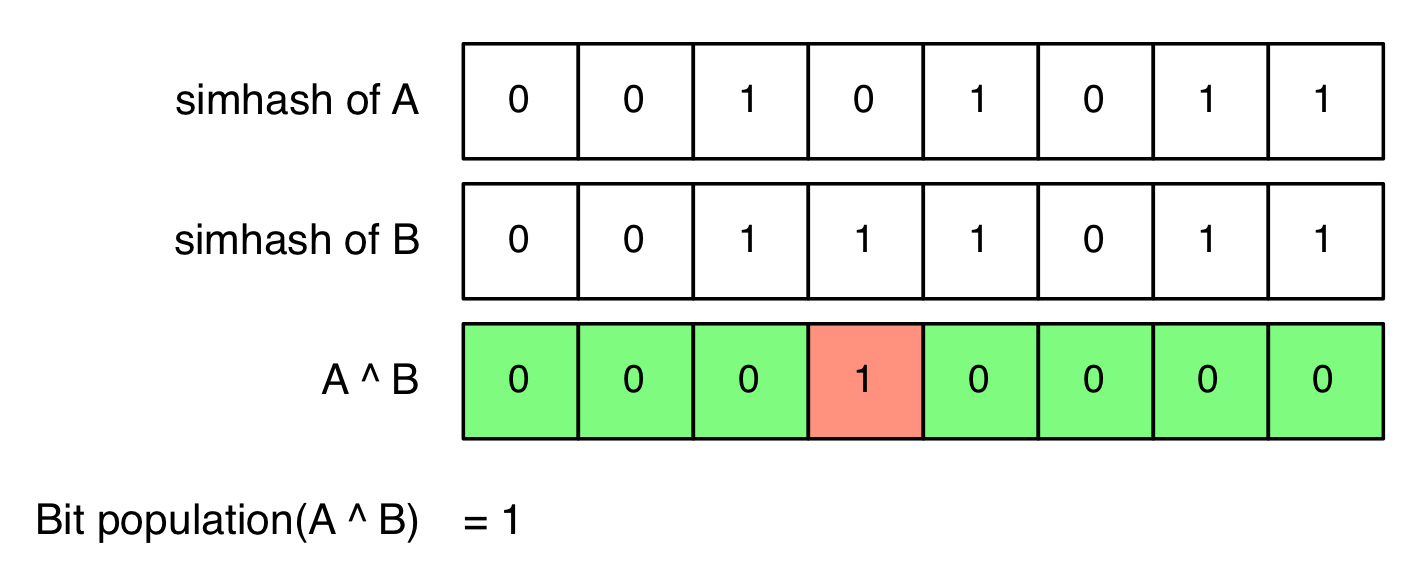

Simhashing is a technique that helps us to overcome this problem. Given a set of input hashes, simhash produces a single hash with an interesting property -- two similar sets of input hashes produce similar resultant hashes. Most hashing functions are chosen for the property that slightly different inputs have potentially very-different outputs, which makes simhash unique. For each bit position, we count the number of input hashes with that bit set and subtract the number of input hashes with that bit not set. Once done, every position with a negative counter is set to 0 and every other position is set to 1. To measure the similarity of two simhashes, we count the number of bits that differ between two queries as a measure of dissimilarity. Conversely, the number of bits that are the same is a measure of similarity. This can be concisely computed as bit_population(a ^ b).

To measure the similarity of two simhashes, we count the number of bits that differ between two queries as a measure of dissimilarity. Conversely, the number of bits that are the same is a measure of similarity. This can be concisely computed as bit_population(a ^ b).

This comes with the obvious win of rather than storing 128-or-so hashes for each document, we now only have to store a single integer as a fingerprint. But the bigger win comes with finding a set of near-duplicate documents.

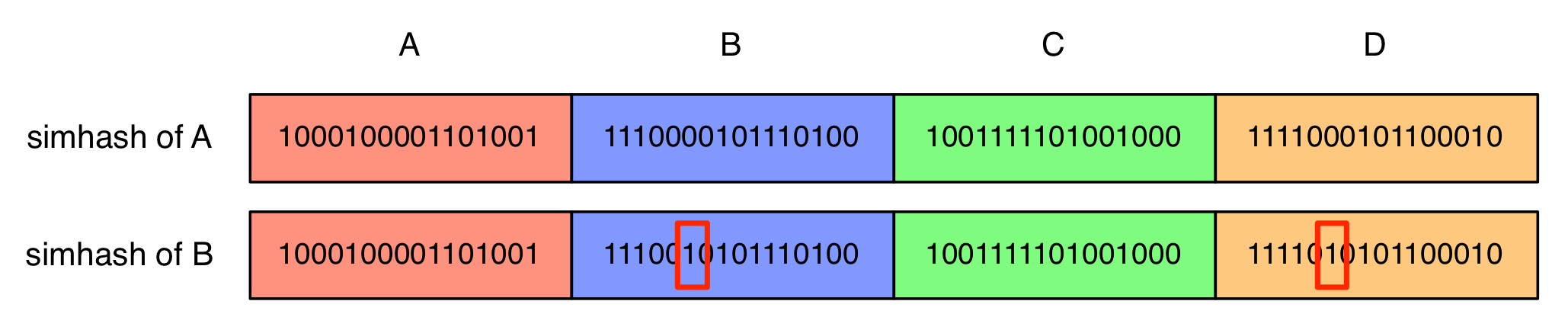

Let's say for example, that in order to be considered duplicates, documents must have, at most, 2 bits that differ. We'll conceptually divide our 64-bit hash into 4 bit ranges of 16 bits called A, B, C and D. If two documents differ by at most two bits, then the different bits appear in, at most, two of these bit ranges. The flip side of this observation is that the remaining bit ranges must be identical.

This comes with the obvious win of rather than storing 128-or-so hashes for each document, we now only have to store a single integer as a fingerprint. But the bigger win comes with finding a set of near-duplicate documents.

Let's say for example, that in order to be considered duplicates, documents must have, at most, 2 bits that differ. We'll conceptually divide our 64-bit hash into 4 bit ranges of 16 bits called A, B, C and D. If two documents differ by at most two bits, then the different bits appear in, at most, two of these bit ranges. The flip side of this observation is that the remaining bit ranges must be identical.

It could be A and C that are identical, or B and C, but let's pretend for a moment that we know that it will always be A and B that are identical. We could keep all the simhashes in sorted order, and then for a query we can find the range of elements that share that same AB prefix and only compare to those elements. We can find this range in logarithmic time, which is the big win.

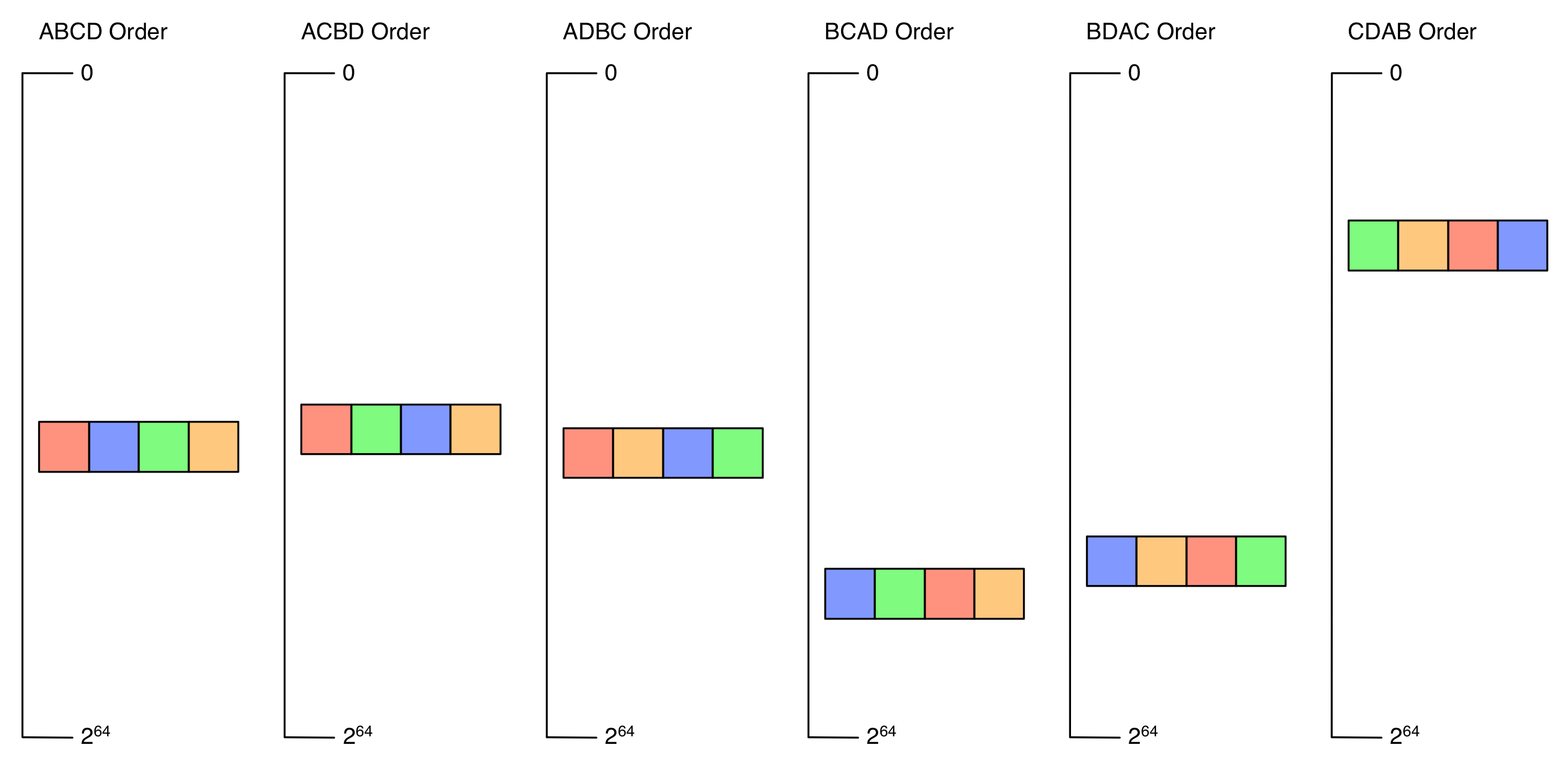

Since we don't know a priori which bit ranges will match, we simply maintain a few different sorted orders based on all the possibilities where bit ranges might match. For this example, we know that any of these combinations might match: AB, AC, AD, BC, BD, or CD. So instead of keeping one sorted list, we'll keep 6 sorted lists, each with the simhashes rearranged in one of these permutations: ABCD, ACDB, ADBC, BCAD, BDAC and CDAB.

It could be A and C that are identical, or B and C, but let's pretend for a moment that we know that it will always be A and B that are identical. We could keep all the simhashes in sorted order, and then for a query we can find the range of elements that share that same AB prefix and only compare to those elements. We can find this range in logarithmic time, which is the big win.

Since we don't know a priori which bit ranges will match, we simply maintain a few different sorted orders based on all the possibilities where bit ranges might match. For this example, we know that any of these combinations might match: AB, AC, AD, BC, BD, or CD. So instead of keeping one sorted list, we'll keep 6 sorted lists, each with the simhashes rearranged in one of these permutations: ABCD, ACDB, ADBC, BCAD, BDAC and CDAB.

For any given query, we then check a fixed number of sorted lists, do a O(d * ln(n)) lookup and a small number of comparisons, and we've found all near-duplicates of our query. When conducted across distributed storage, each of these d queries can also be conducted in parallel.

This approach has led to performance improvements for the purposes of campaign crawl, but it's also the way we make near-duplicate-detection tractable for Fresh Web Explorer. We have a number of repos implementing simhash and near-duplicate detection in python, c++, and in a number of database backends.

For any given query, we then check a fixed number of sorted lists, do a O(d * ln(n)) lookup and a small number of comparisons, and we've found all near-duplicates of our query. When conducted across distributed storage, each of these d queries can also be conducted in parallel.

This approach has led to performance improvements for the purposes of campaign crawl, but it's also the way we make near-duplicate-detection tractable for Fresh Web Explorer. We have a number of repos implementing simhash and near-duplicate detection in python, c++, and in a number of database backends.