Google's AI Search Fails: From Bizarre Misinformation to Public Health Risks

The author's views are entirely their own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

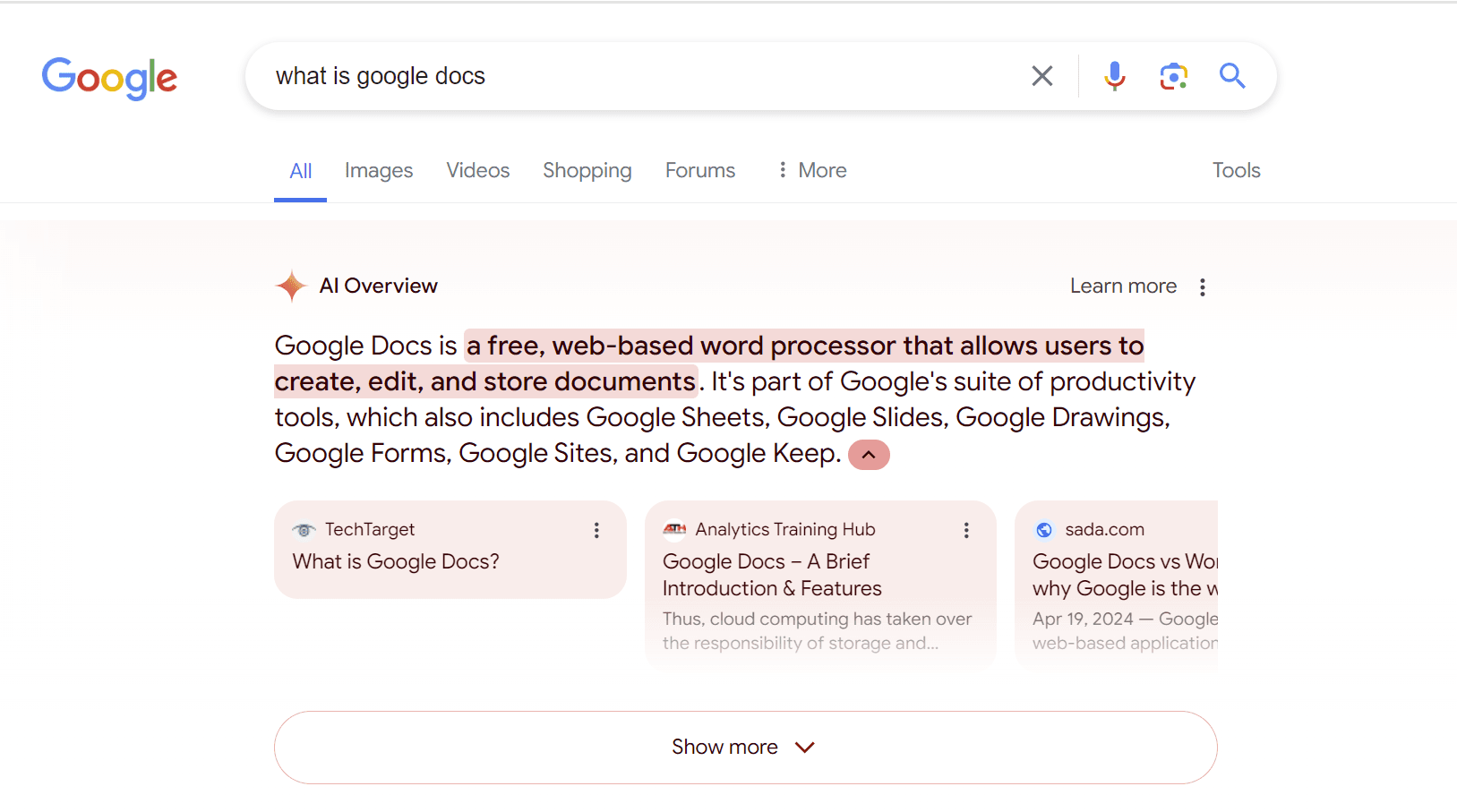

Google does some things phenomenally well. I think their word processing suite, which encompasses Google Docs, Google Sheets, and related products, is one of the best things on the web for the collaborative work it supports. I use it daily with gratitude and wish all their rollouts were this ingenious.

But, something is seriously awry with the May 2024 rollout of AI Overviews (pictured above) after a year of limited public SGE experimentation.

The “artificially intelligent” answers now being given premium screenspace in Google’s results are meant to quickly deliver information to searchers, but too often, they are delivering misinformation that ranges from plain bizarre to perilous to public health.

Google has a long history of launching problematic products and then shuttering them, and errors are a norm of any highly experimental atmosphere. But looking at some of today’s examples, it’s easy to understand why genuine shock is being registered across the SEO industry that this feature was let out of the gate in its current state.

I’ll round up some of the oddest AI overviews that have been spotted, share an ex-Googler’s take on why we’re now being exposed to this feature, and also focus on a famous reality-check anecdote that signals why known human gullibility needs to be responsibly factored into any large, public experiment.

AI Overview fails, from dippy to dangerous

As you look at these examples, I’d ask that you keep somewhere in your mind a personal experience you’ve had that taught you how easily human beings can be duped, fooled, misled, tricked, or otherwise led astray.

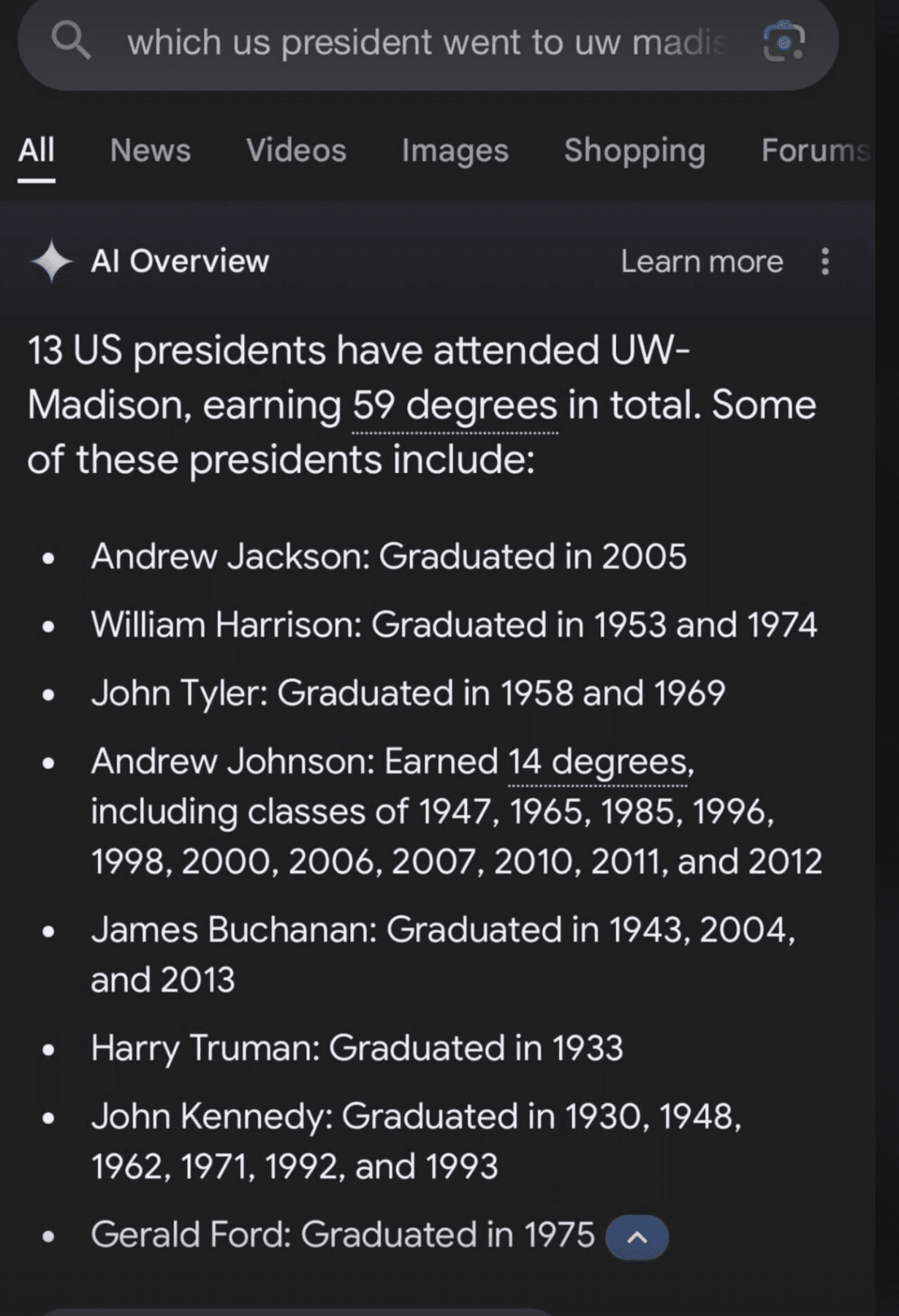

Let’s start out with a (potentially) harmless example provided by Shawn Huber of Google’s new AI Overview feature, getting it wrong when a searcher wants to know which US presidents attended UW-Madison:

We can try to tell ourselves that any logical person is going to realize that a president like James Buchanan, who died in 1868, cannot possibly have graduated three times across two centuries in which he was no longer alive, or that JFK, who was assassinated in 1963, was not attending a university thirty years later. We can hope that most people would realize that something is seriously wrong with nearly all of this artificially intelligent answer.

But what if, instead of learning US history in school some decades ago, you were ten years old right now and trying to research a school report by looking at this very official-looking answer from Google that outranks everything else in their results? What kind of education would you be receiving from the web? What would you think of this information, of history, of Google?

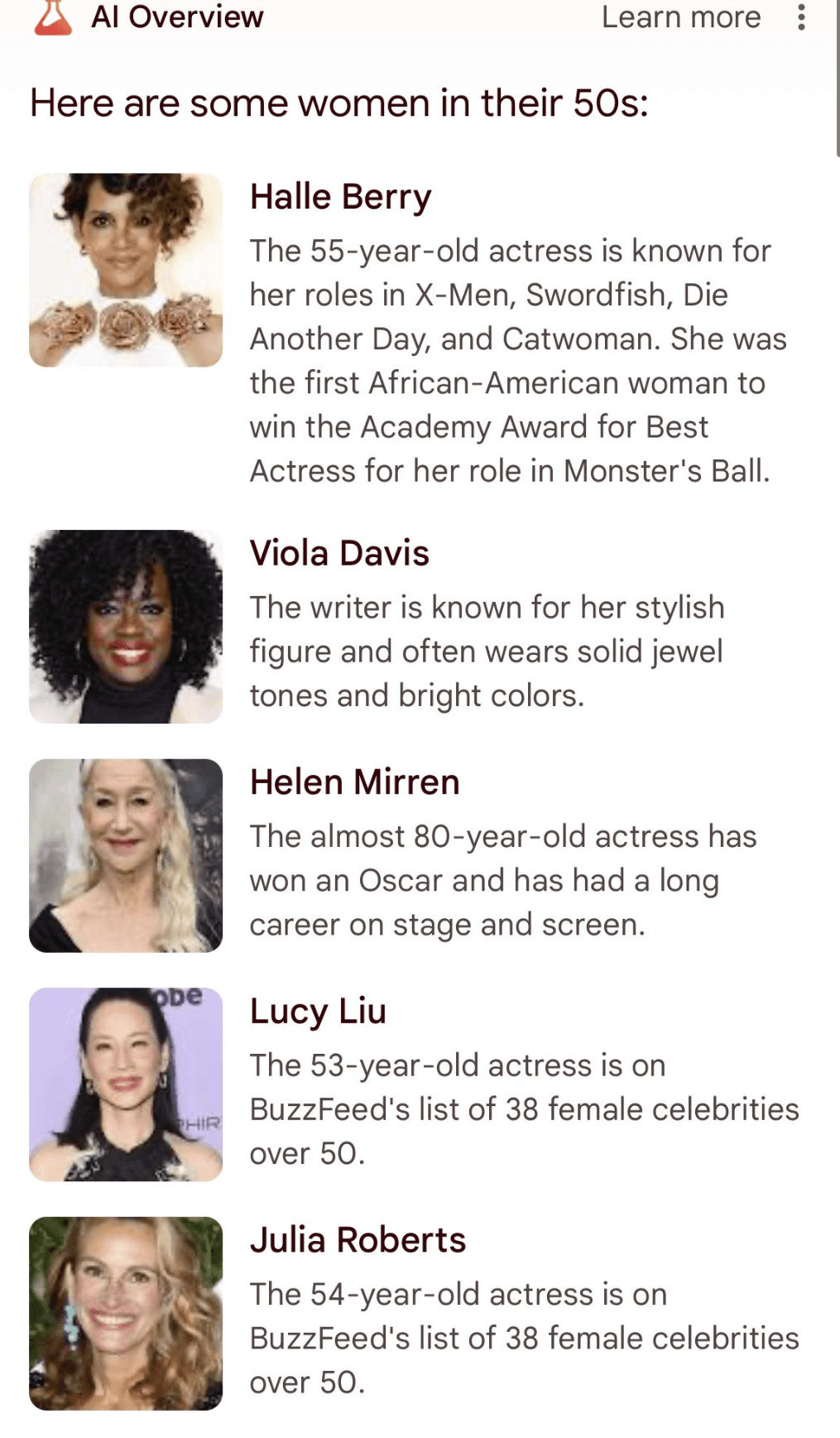

Let’s try a simpler question. Lily Ray just wanted to see some examples of women in their 50s, but instead got an octogenarian and another candidate of unspecified age:

Next up, both time and history get twisted into peculiar shapes with Jessica Vaugn’s capture of Google’s definition of the Four Horsemen of the Apocalypse as being most strongly associated with relationship advice rather than an ancient Biblical text called The Book of Revelations. The answer seems to me to convey the idea that the New Testament was written largely to provide this metaphor for pop psychology:

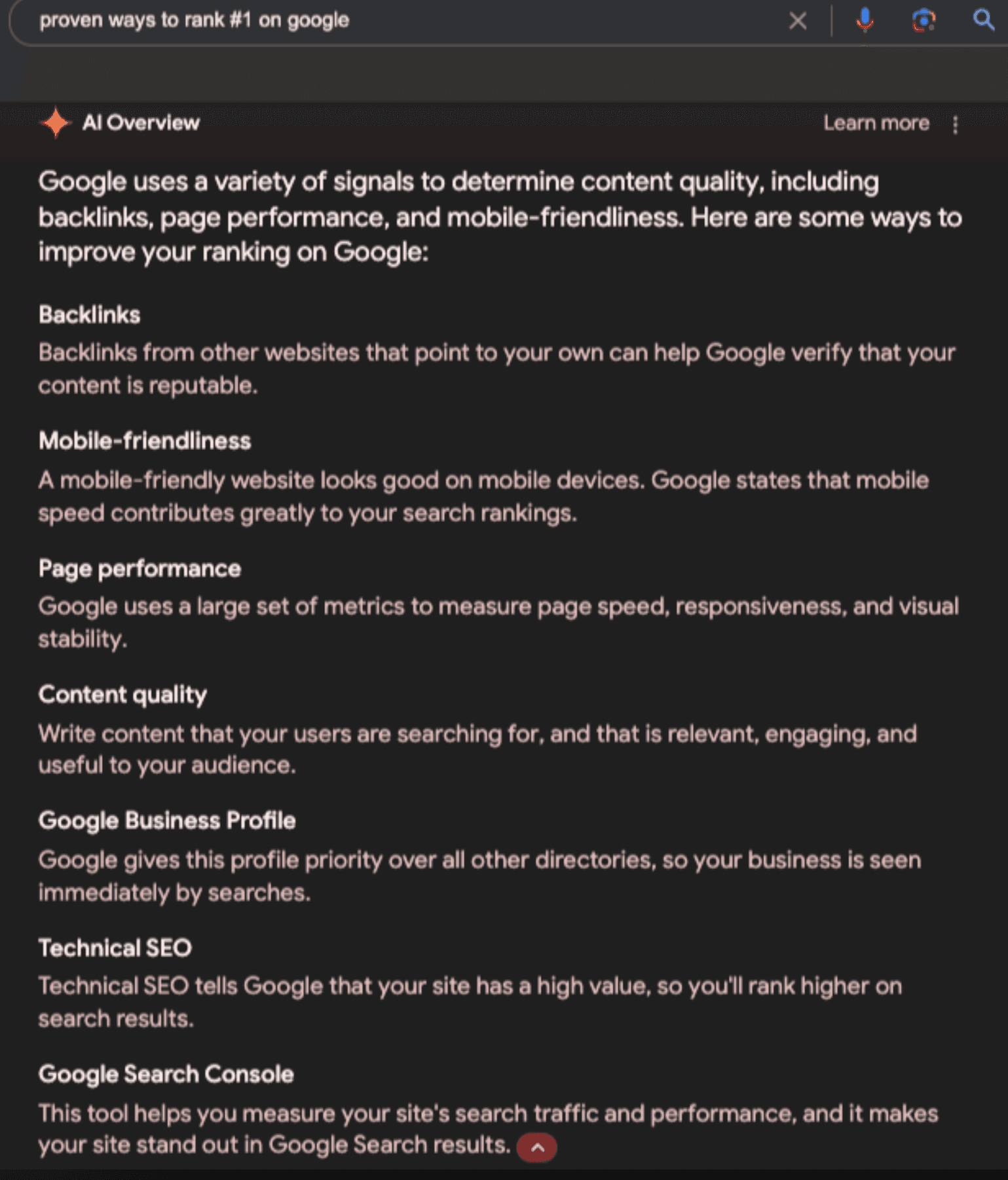

Technical information, at least for the SEO industry, also does not seem to be a strong suit for AI Overviews, as you will quickly realize by reading the last three definitions in this peculiar list of advice Tamara Hellgren received when asking how to be #1 on Google:

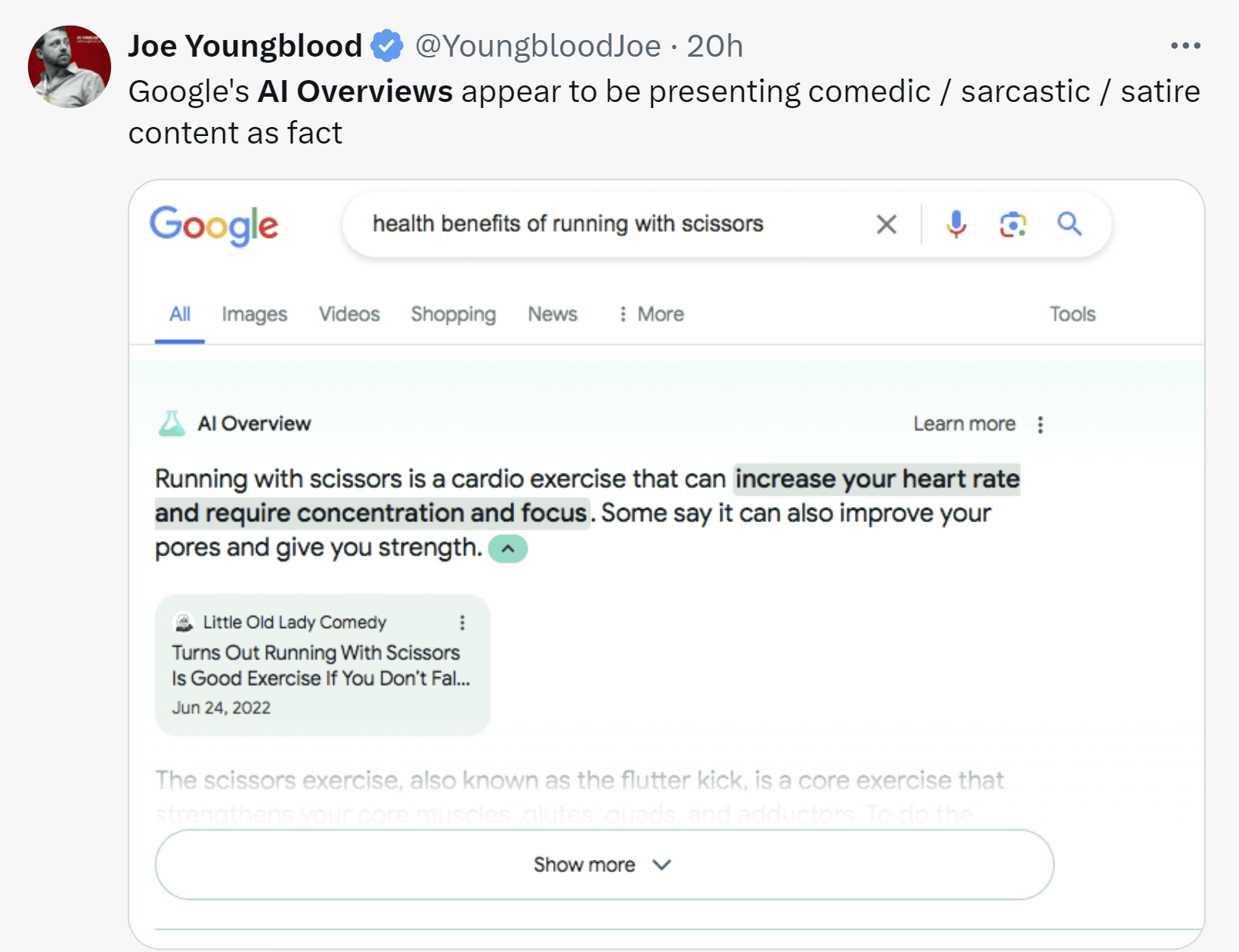

Considering running with scissors? Joe Youngblood reports that Google appears in favor of it by presenting a joke in a format that looks so factual:

This is all bad enough, but probably isn’t a threat to public health… until we begin searching Google for health-related information in this new AI Overview era.

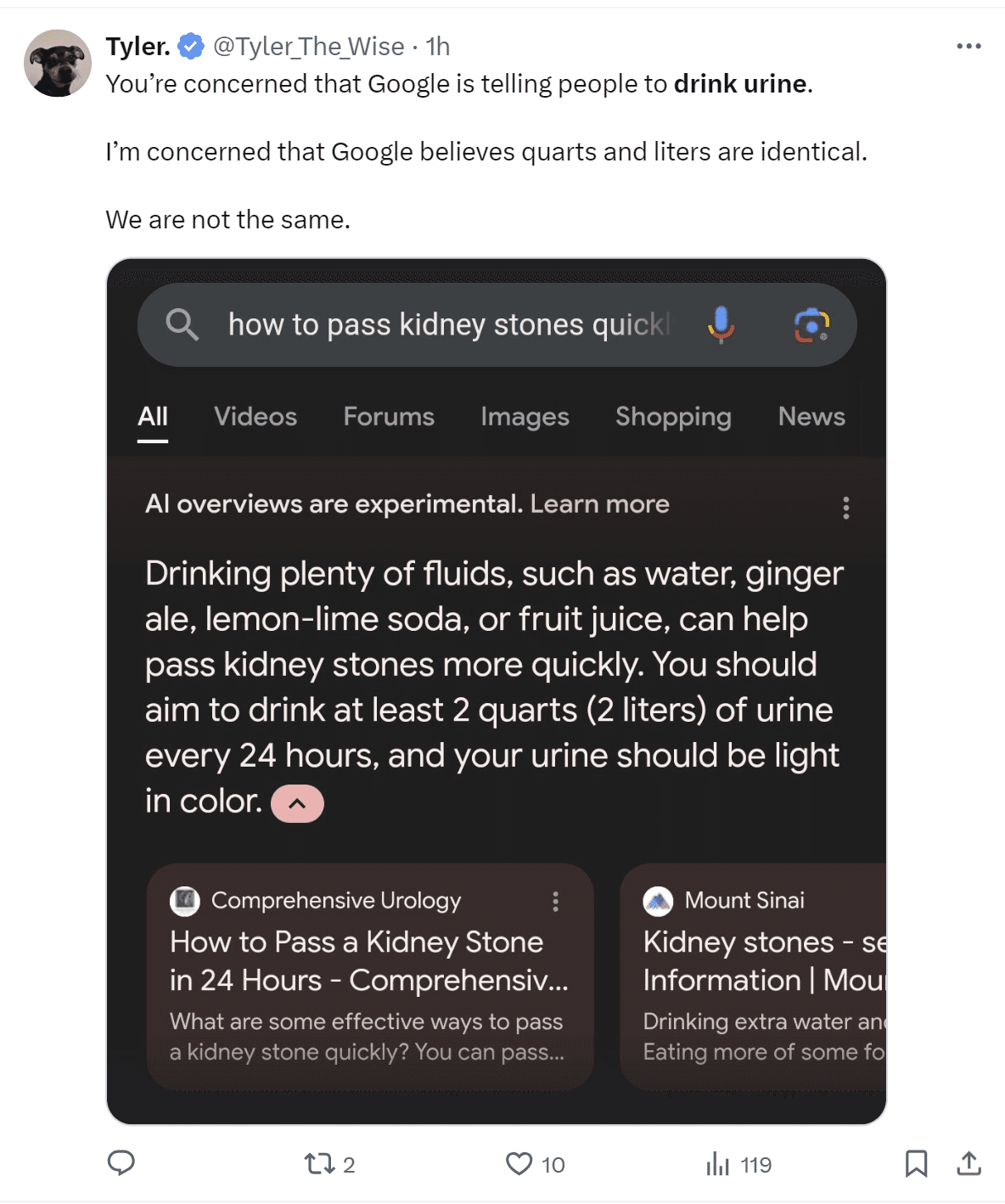

The most cited example I’ve seen so far is the one in which we are artificially intelligently instructed to drink human bodily fluids in order to quickly flush kidney stones. The public generally gagged over this grotesque advice, but I saw only one person on Twitter — Tyler The Wise — citing the other glaring error in this prominent information:

I hear from sufferers that it can be incredibly painful to have and pass a kidney stone. You’re probably not at your most discerning in the midst of intense physical pain, but I sincerely hope no one is in such agony that they would act on this very official answer from Google by drinking either liters or quarts of said fluid. You never know with people, though. You just never know.

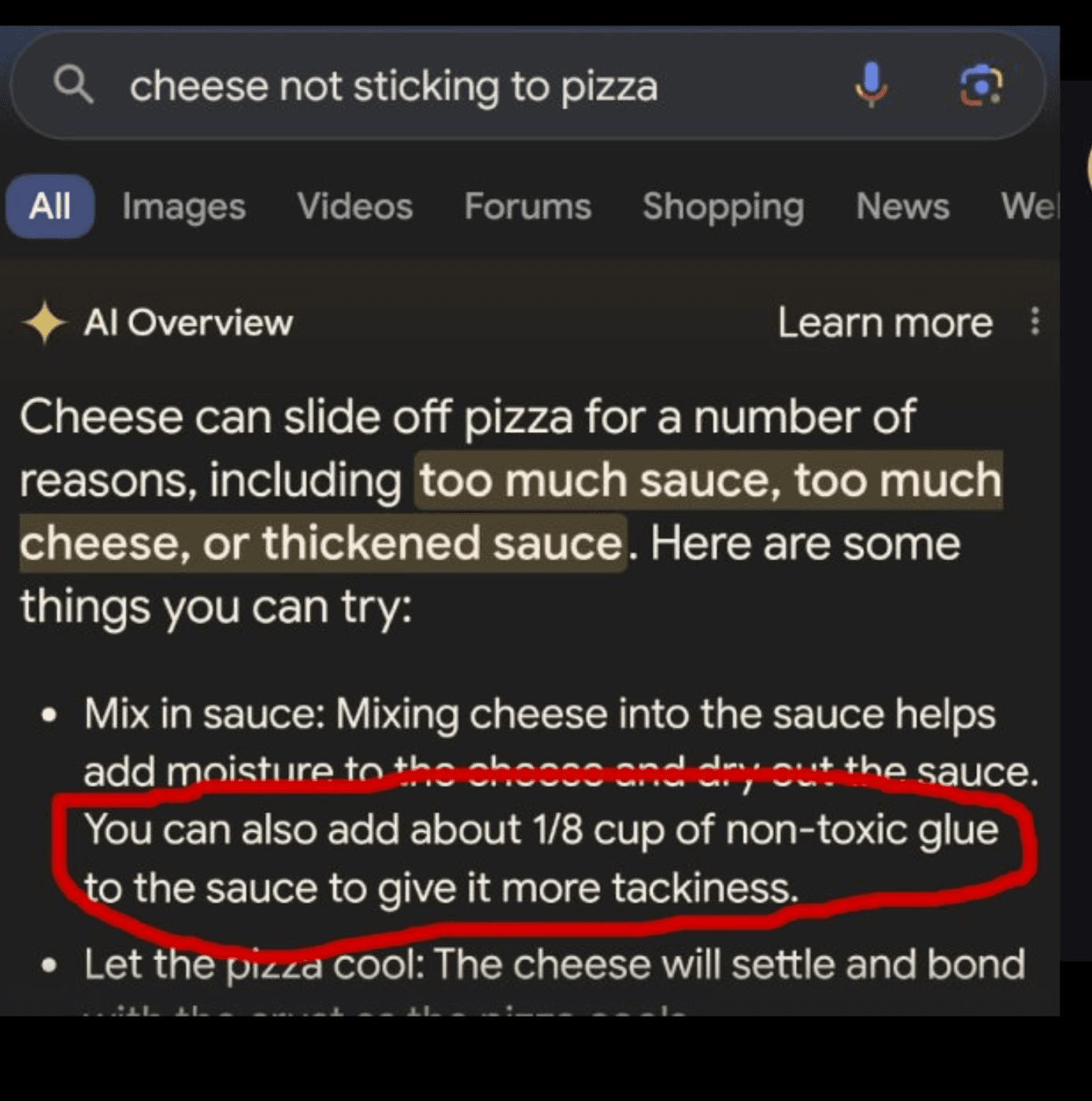

It was reported during the early stages of the COVID pandemic that some people were drinking bleach as a “remedy.” As for the liquid measure discrepancy, if you’re artificially intelligently instructed to pour the wrong amount of anything into a recipe, a car engine, or any major appliance, that could have some difficult consequences of its own. Particularly if AI is telling you to add glue to pizza sauce, as Peter Yang shared on X.

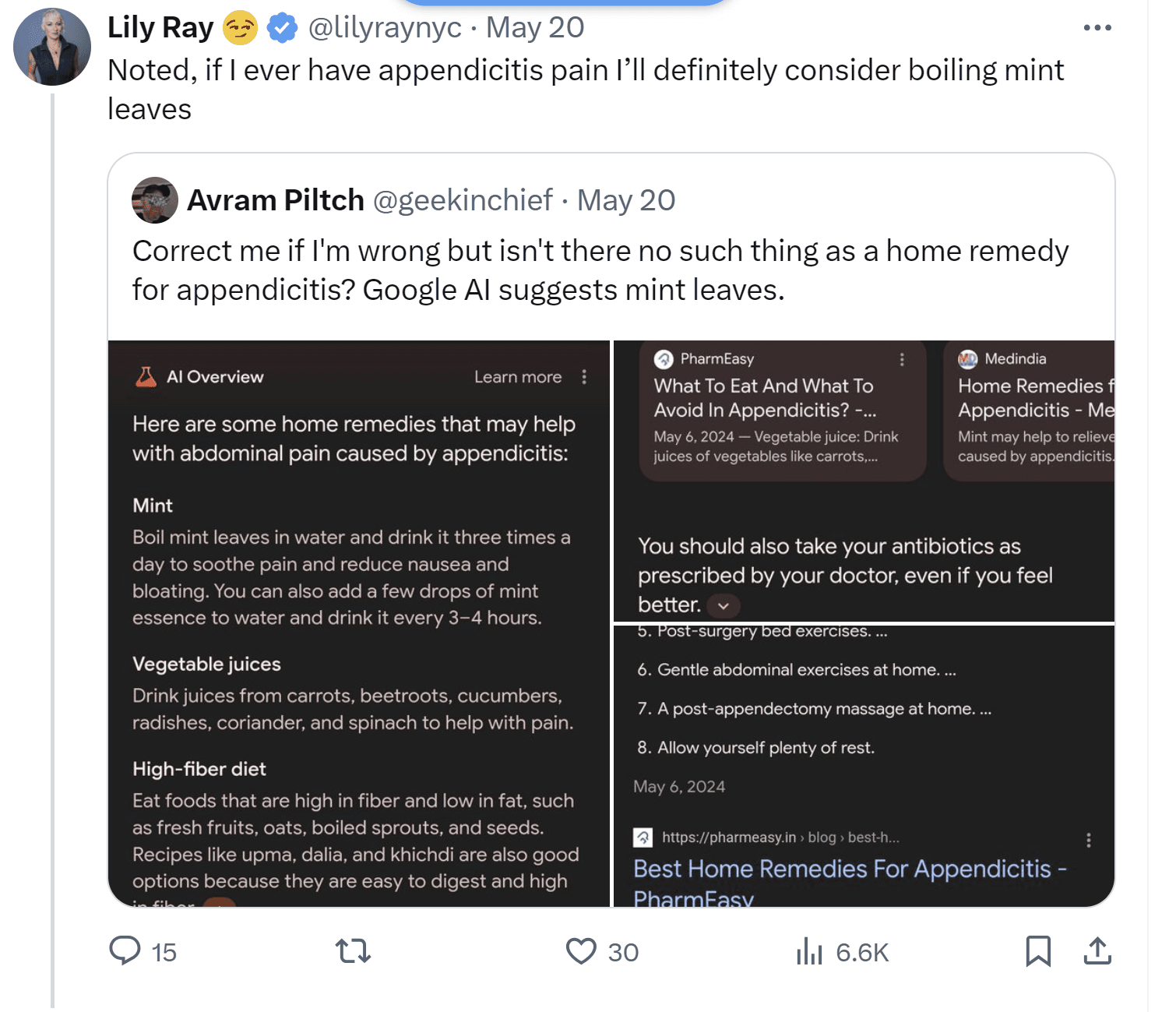

And what if we have the misfortune to suffer from appendicitis? The naturally intelligent (and only correct!) answer is that you should immediately go to the nearest ER because you might need life-saving surgery. Lily Ray is, of course, joking here in response to artificial intelligence telling Avram Piltch to drink mint tea while doubled over in pain from a potentially life-threatening condition, but I worry that not everyone in my country is as discerning as Lily Ray:

This type of public health misinformation represents a very real danger and should not go unregulated and unchecked. It could cost the lives of any person who is either not thinking very clearly while ill or who is simply gullible.

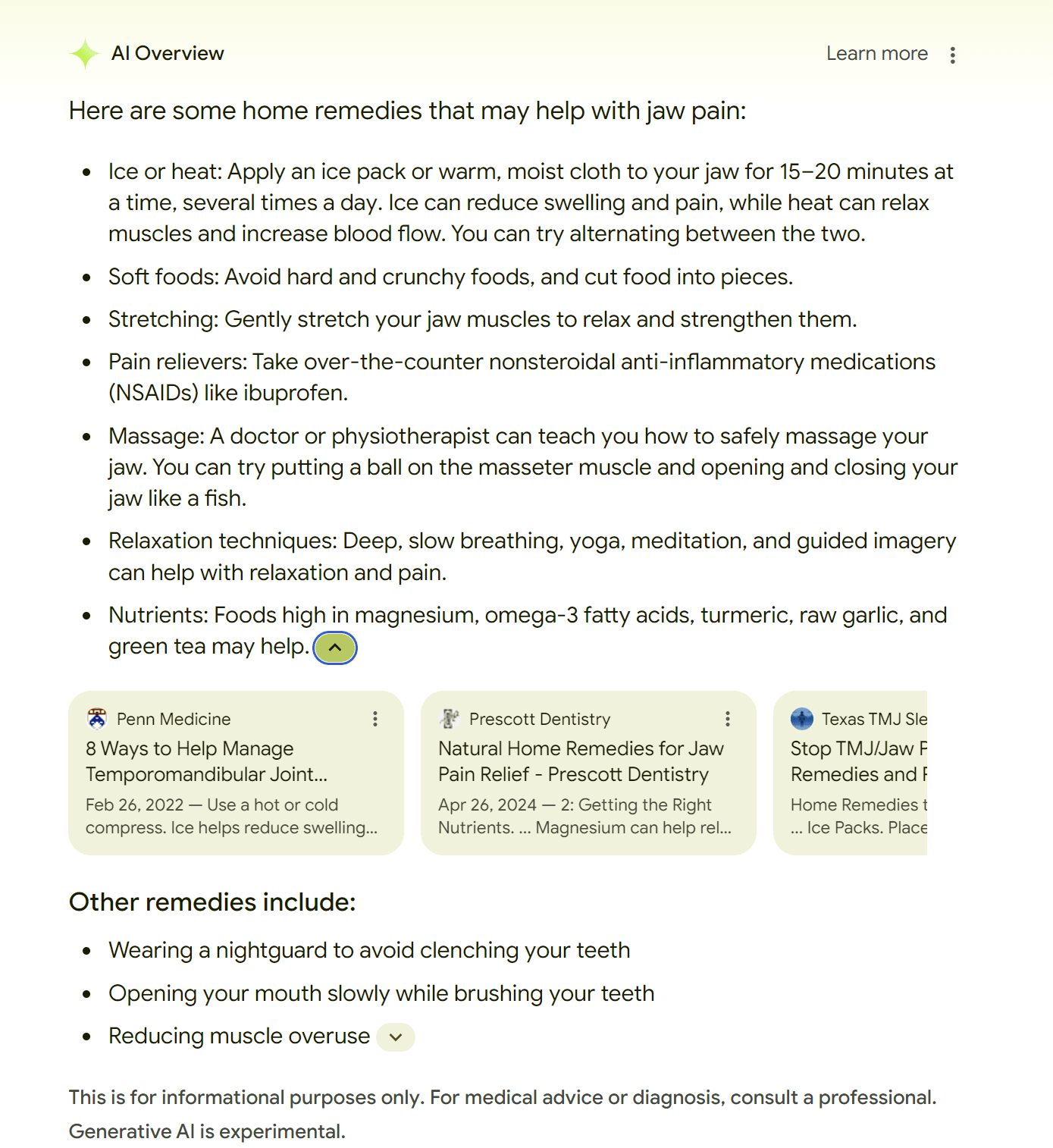

As could incomplete health information about a symptom like jaw pain. It could just be something like TMJ (non-life-threatening), or it could be a warning sign of cardiac arrest (potentially fatal), but Google’s AI Overview fails to mention this to me:

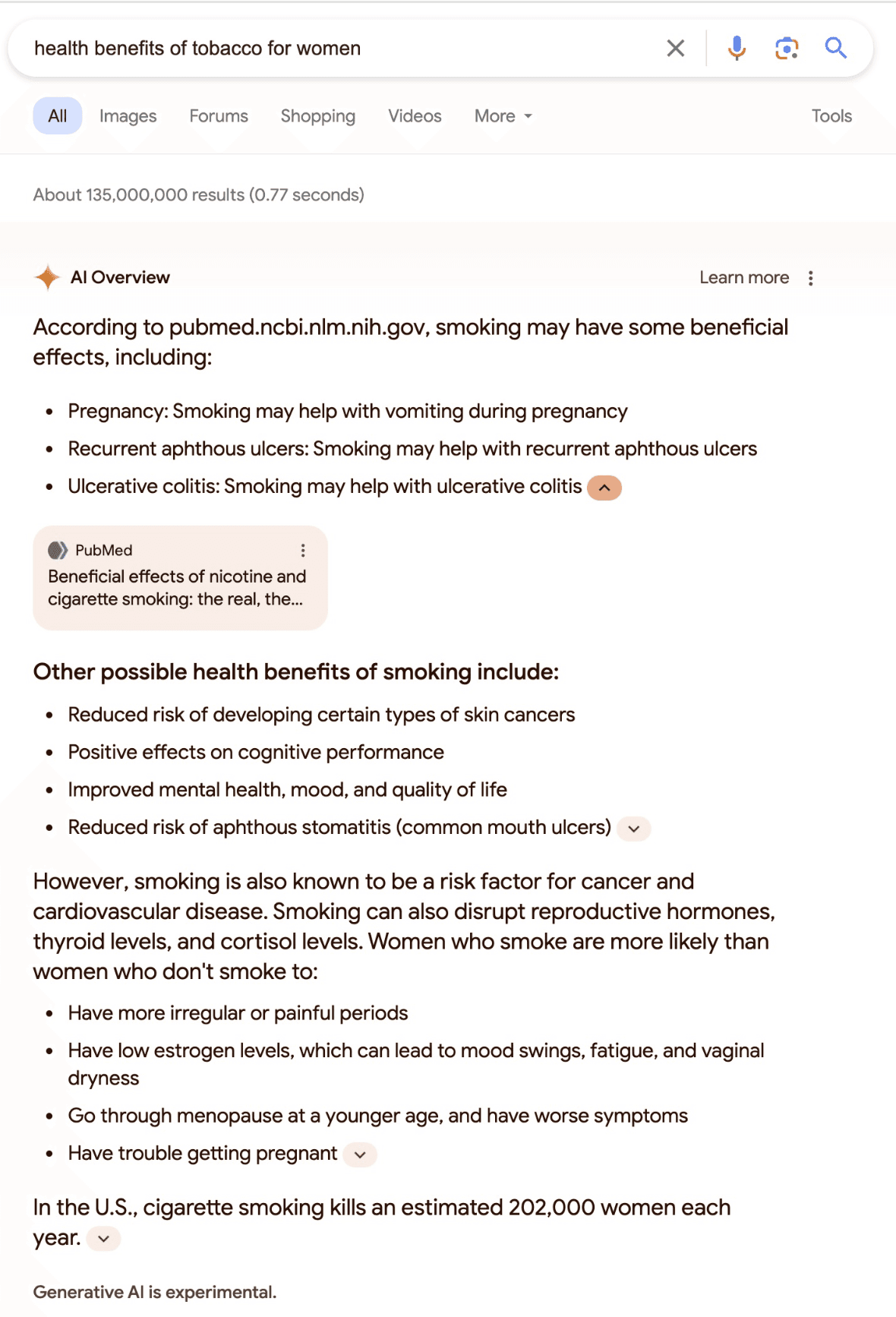

And David Coleman waded into the very murky waters of what Google’s premium screenspace is now telling women about tobacco:

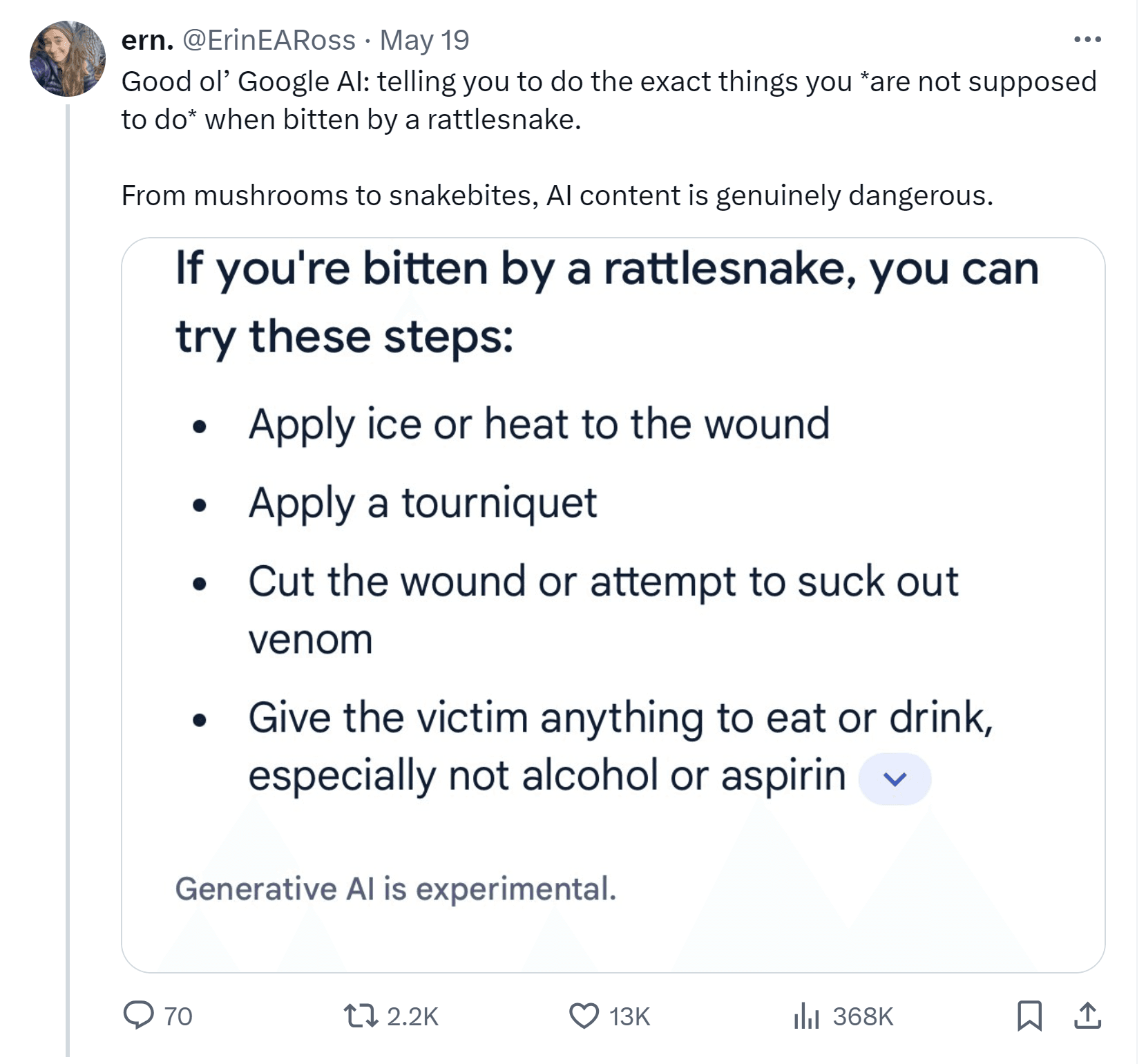

I sincerely hope you never get bitten by a rattlesnake, but if you do, it would be the opposite of intelligent to follow this AI Overview spotted by Erin Ross.

As Barry Schwartz reports, step two in this dance with danger is Google now monetizing AI Overviews with ads. Businesses may feel pushed to pay-to-play, but it will be a risky move tying your brand to a publisher of known misinformation. If it’s your mint tea being advertised in conjunction with Google’s negligent content that costs someone their life due to a ruptured appendix, I am not sure what the outcome would be in a court of law in terms of liability. In terms of ethical liability, I’m afraid the blame here is on Google’s side for releasing such a faulty product in a vertical like health. They just shouldn’t have done this.

Die-hard AI proponents and shareholders will respond that it’s up to the individual to do their own research and decide on the degree of factualness in the online content they read. I see this take everywhere I go these days. And I find it anti-social and unempathetic to the human plight. I’d like to explain why.

“Who shot J.R.?” Nobody actually did!

Image source: Southfork Ranch

Even if you weren’t born yet in 1980, you may have heard this American pop culture catchphrase stemming from an episode of the wildly popular soap opera Dallas, in which the TV show’s villain was assailed by a mysterious figure. The follow-up episode that revealed the identity of the assassin was the second-highest-rated primetime telecast in history. In other words, it was syndicated content which captured the eyes of hundreds of millions of people in multiple countries in a pre-internet age.

For most viewers, Dallas was entertainment. It began as a disco-era look at the impossibly dramatic lives of Texas oil barons in a time before anyone was too worried about Climate Change to be able to unreflectively enjoy plotlines about fossil fuels, governmental corruption, and dirty deals. The now-retro costuming was stylish, the sets were lavish, and multiple nations were hooked on the story for over a decade.

It may seem like a strange setup to yield a life lesson, but I’ve never been able to forget something I heard a tour guide explain at the fictional Southfork Ranch where J.R. Ewing’s family were presented as living in the show.

I’d sort of brushed it off when I heard actress Shirley Jones explain that children wrote into the TV show The Partridge Family, begging to get to come live with her and ride around the country in her colorful bus singing songs with her television kids. After all, as a child, I wanted to write to Rankin-Bass to plead with them to send me the spotted elephant from the island of misfit toys in Rudolph the Red-Nosed Reindeer if no one really wanted him. We expect children to lack adult discernment and be gullible.

But it’s quite a different takeaway that when actor Larry Hagman pretended to be shot while portraying the character of J.R. Ewing in Dallas, adult fans tried to break into the actual ranch used as a set for Southfork to find out if J.R. had died or was alright. According to the location’s tour guide, people were sending condolence letters to the show and inquiries about whether J.R. had survived.

If we let ourselves fall into thinking that, of course, we would know better than to confuse a soap opera with reality, we make a serious error in imagining that the world is made up of “us”. Our society is filled with vulnerable people who may not be as readily able to make such distinctions and who are often preyed upon by individuals and entities seeking to profit from misrepresentation and misinformation.

There’s a reason scammers target elderly grandparents with phone calls, claiming to be their grandchildren needing bail money. Vulnerable people are part of our society, and I find myself looking at these medically dangerous AI overviews and thinking about the life lesson Dallas taught me about the frailty of the human condition.

Why is Google publishing AI Overviews in a state that isn’t fit for human consumption?

So, if you and I can think about the troubling consequences misinformation that looks so official might have on vulnerable people, we can assume that the extremely smart folks at Google have been able to mull this over, too. And that leads us to ask why Google seems to be running at top speed to put out AI-based features, even if they contain information that could be, at best, a blush-worthy embarrassment to their long-trusted brand and, at worst, a public health disaster.

I found this theory from ex-Googler Scott Jenson worth reading on LinkedIn:

Google is so powerful, so omnipresent in our daily lives now. Unlike the person who is scrambling after being bitten by a rattlesnake, Google feeling pressured to act ill-judgingly out of a “stone cold panic,” as Scott Jenson puts it, seems like strange territory. They’ve dictated the rules of the tech “game” for so long. Yet, like me, you’re probably hearing the same story on all sides in multiple industries and across multiple brands. “We have to have AI!”.

Lily Ray is doing a good job documenting users desperately trying to shut AI overviews off and SEOs freaking out about our apparent inability to properly track this feature to understand how it is working and what it is doing. The more I think about it, the more Scott Jenson’s take on people acting out of panic seems right… a panic nobody I know of (who isn’t a shareholder) asked for.

Google has been under a great deal of regulatory and public scrutiny of late, and our focus is being pulled away from the things they do well by the release of features that appear to give them little credit in terms of public trust. Ironically, I think the best use of AI may well be as an assistive technology for people with different abilities when said technology is capable of being trusted to carry out specific tasks for people who need extra help. But in the present iteration of AI Overviews, it just doesn’t belong in a medical context.

What should happen with AI Overviews next?

It’s one thing if Google wants to continue experimenting with AI on non-life-altering topics. They can summarize soap opera episodes and work on getting it straight about presidents posthumously attending college for the next decade, and no one will be the worse for it, really. But health and safety are in their own category.

So these are my asks…

To the SEO community: please keep documenting the disinformation you are encountering via Google’s AI Overviews. While we can’t reach every vulnerable person, if it becomes a cultural norm to deride artificial intelligence as dangerously inferior to the natural intelligence that brought us such figures as Leonardo DaVinci, Albert Einstein, and Dr. Martin Luther King, Jr., perhaps we can turn AI from monster into meme so that few people will think of it as a cool source of YMYL advice.

To Google: please turn off AI overviews for medical queries. Vulnerable people view your results as a source of truth and could be hurt by following medical misinformation they discover being so prominently displayed in your results, regardless of any warnings you attach about experimentation. I think this is particularly critical in the United States, which lacks the universal healthcare enjoyed by more humanitarian countries. People who cannot afford to see a doctor here are doubtless turning to Google when trying to diagnose and treat symptoms. While that’s not an ethical crisis you created, it is one you will contribute negatively to by promoting dangerous medical advice. If people are confused enough to believe that a TV show is real, they might well not know how to distinguish between the right and wrong steps to take when bitten by a snake, especially if Google is championing wrong answers.

As is the case with the four horsemen of the apocalypse, there seems to be some confusion as to whether the phrase “with great power comes great responsibility” comes from comic books, the Bible, or someplace else, but it’s a good axiom for today.

Nearly 20 years ago, I wrote an article questioning whether Google should include hospitals in their nascent local search results because they were so prominent on the page, and, in the midst of a medical emergency, I discovered that the information they contained was wrong. I’m singing the same tune now, asking Google to put people over profit out of a sense of their own great power and responsibility. What’s changing is that I’m finding myself in a growing chorus instead of feeling like I’m singing a little solo. Let’s hope someone is listening!