Why Site Speed Optimisation Should Be Part of Your SEO Strategy

This YouMoz entry was submitted by one of our community members. The author’s views are entirely their own (excluding an unlikely case of hypnosis) and may not reflect the views of Moz.

In late 2010, Google publicly announced that site speed was a new signal introduced into their organic search-ranking algorithm. Despite the announcement, has not been an integral part of the SEO agenda and is still given a much lower priority than it deserves. Certainly, out of the box thinking in 2013 cannot be just about great content and natural links.

Improving a site’s page loading time can be a rather laborious task; it requires thoughtful planning, significant resources, benchmarking, flawless execution, thorough testing and evaluation. Particularly for enterprise sites planning and testing can take several months and requires collaboration between different teams. In many cases, it requires a great deal of technical skills which fall on web developers, system administrators, network specialists and other technically minded professionals. But does all that signify that page speed optimisation is a waste of time?

Why Page Speed Is Important For SEO

With the direction SEO has taken since Google released the Panda and Penguin updates, scalable link building is becoming increasingly costly and unsustainable. At the same time there has been a great shift to content marketing, however, the point should not be whether producing link-worthy content is the best SEO strategy moving forwards.

Even though I find most of Matt Cutt’s comments a bit flamboyant, one thing I 100% agree with is that digital marketers should think of SEO as being Search Experience Optimisation. That means that SEOs should no longer be optimising thinking of bots but users. Offering the best possible user experience is what engines are interested in, value and reward.

SEOs have an incredibly versatile skill-set to draw from when helping online businesses increase their revenues. They understand the way search engines crawl, index and rank content, how users behave when searching for or visiting a site. They can carry out correlation studies, prioritise keywords that convert better, devise and implement content strategies and detect issues in the source code that hinder spiderability. Last but not least, they can (or should) optimise websites for speed.

Site speed optimisation has traditionally been led by web developers and CRO experts, most of whom have little interest, knowledge or experience about search engine spiders’ behaviour. In addition to the obvious fact that enhancing user experience increases engagement, fast loading pages can also have some unique and essential SEO benefits.

How Page Speed Affects Indexation, Traffic & Rankings

In general, slow loading pages suffer from low user engagement (increased bounce rates, low average time on page) and if it is true that Google takes into account user behaviour data to influence search rankings, it can be argued that bouncing off a slow loading site could potentially decrease its rankings.

Slow loading pages can also have a direct negative impact on indexation. When search engines cannot crawl a site for a few days or weeks, rankings associated with the keywords of the inaccessible pages will start suffering, sooner or later.

Conversely, fast loading pages can improve indexation as Googlebot spends its time more efficiently when crawling a fast-loading site. Because Googlebot allocates each website a specific crawl budget (depending on various factors such as authority and trust), being able to download and crawl pages faster means that it will ultimately download and (in most cases) index a higher number of pages.

For large websites, indexation is key to success in Google’s organic search. Ecommerce websites with thousands of products and often millions of (duplicate) pages struggle to gain visibility for all their products. News websites that manage to index their latest articles fast usually cap the top rankings and see their traffic levels soaring.

Crawl budget optimisation is about:

- Increasing the number of pages Googlebot crawls when visiting a site

- Decreasing the size of each page Googlebot visits and downloads

Both of these two parameters can be heavily influenced by site speed optimisation. The idea behind the first argument is that if pages are loading faster, Googlebot will crawl (and hopefully index) more pages in each visit. Furthermore, making sure that Googlebot can download pages faster will allow it to visit and download more pages before using up its entire dedicated crawl budget and leave the site.

The bottom line is that getting more pages in Google’s index has the potential to increase traffic. Having more pages indexed means that there will be more keywords with ranking potential. On large, authoritative sites increasing the number of indexed pages alone is enough to bring more long tail keywords straight on to the first page of Google’s SERPs and because the tail on these sites consists of thousands of keywords, this is a big win.

Looking into this from a different perspective, increasing indexation on enterprise websites can be more beneficial than any link building campaign in terms of traffic and revenue gains. Page speed optimisation is scalable and improving the page loading times of the most common types of pages (e.g. homepage, category page, product page) will almost certainly benefit the entire site.

Slow Pages Kill Conversions

Several case studies have demonstrated that fast loading sites can significantly increase conversions and boost sales. In 2012, Walmart announced that conversions peak at around 2 seconds and then progressively drop as page loading time increases. In the follow graph, notice how dramatically conversion rate drops when page loading time increases from 0-1 to 3-4 seconds.

Source: Walmart

Source: Walmart

Walmart’s findings were confirmed by another study that was published by Tagman just a few months later. Notice the similar trends in conversions between the below and above graphs:

Source: Tagman

Source: Tagman

These two studies also agree with Akamai’s survey that found that 47% of online users were expecting pages on retail sites to load in 2 seconds or less back in 2009. In 2012, the percentage of tablet users expecting a website to load in 2 seconds or less reached 70%.

Many other organisations including Google, Yahoo, Facebook and Amazon have carried out their own site performance tests, all of which with very interesting findings. All these have been included into the more in-depth white paper ‘How Site Performance Optimisation Can Increase Revenue on Desktop and Mobile Sites’, which can be downloaded directly from the iCrossing UK site.

Site Performance on Mobile Devices Matters Even More

According to a report published by The Search Agency Google saw 25.9% of total paid clicks coming from mobile devices in Q4 2012. What is even more important is that the mobile phone industry is still growing and mobile search share is expected to keep rising. Compared to desktops, page loading times on mobile devices are higher due to latency, lower processing power, smaller memory and limited battery life.

In 2012 the size of the average web page exceeded 1MB. Our Research & Insight specialist Gregory Lyons estimated that at the beginning of 2016 the average web page size will have doubled, exceeding 2MBs. This means that site performance on mobile devices needs to be addressed separately, otherwise trying to download such big files on smartphone or tablet devices will increase latency further. With more and more websites adopting Google's recommended responsive design approach, page loading times of content heavy pages on mobile devices could increase to the point that users would just give up waiting and try a different SERP. Given that Google has acknowledged site speed as a ranking signal, it wouldn't be surprising if, overtime, it gets even more ranking weight in mobile SERPs. At the end of the day it is in Google's best interest to prioritise signals that make for a better user experience.

How to Identify Site Performance Optimisation Opportunities

Several tools and services can help with simulating or even monitoring site performance based on the actual visitors of a site. The former ones are known as Synthetic Measurement services and the latter as Real User Measurement (RUM) services.

Synthetic Measurement Services

These services are often free and available to anyone making them ideal to measure site speed on own as well as competitors sites.

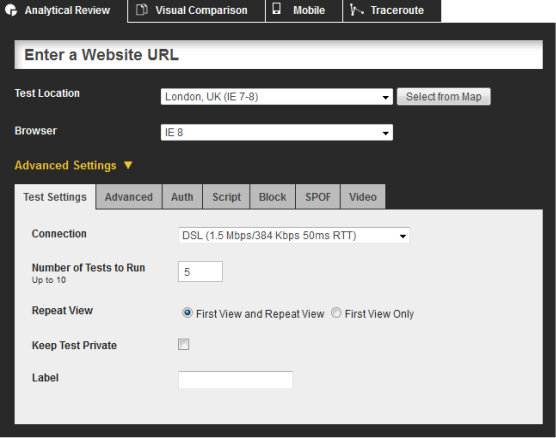

WebPageTest.org is the most widely used service and within reason. It allows for multiple URL-specific tests from:

- Multiple geographic locations (ideally this choice should be made based on your users’ most common locations)

- Different browsers

- Different connection types

- Mobile devices

- JavaScript enabled/disabled clients

The better the above parameters match the characteristics of the most common visitors, the closer you can get to what they experience when visiting your site.

Once the test is completed you get a summary table like the one below:

- First view loading times include all measurements for first-time visitors (no cookies/no caching)

- Repeat view loading times include measurements for visitors who have been previously on the same page (with cookies and caching enabled)

In order to assess which view is more important to speed up, you need to take into consideration the new Vs returning visitors split from analytics.

The ultimate objective is to reduce the time, number of requests and filesize so the page loads faster. Although getting into the details of the various ways to increase site performance is outside the scope of this post, there is a dedicated section in the aforementioned white paper (pages 9-11).

WebPageTest.org even makes specific recommendations and scores a site’s performance so even non -technically savvy SEOs can easily identify the main weaknesses of a website and start a conversation with the web developers.

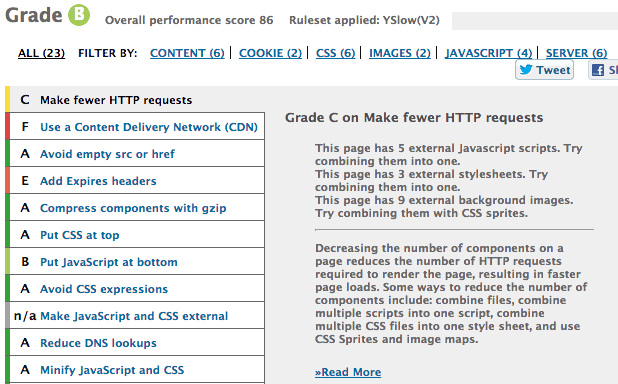

Google’s PageSpeed Insights and Yahoo’s Yslow (both available for Chrome and Firefox) can also be useful if you need to run a quick site performance test on a specific page and figure out what may be missing. However, they do not go into as much detail as WebPageTest.org does.

Yslow performance score and recommendations

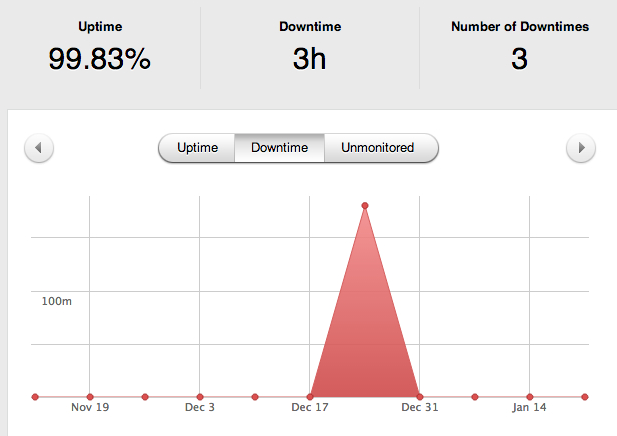

Pingdom Toold is another popular synthetic measurement service which runs regular checks on a list of given websites and monitors uptime and downtime. When outages do occur, the service sends email and SMS notifications.

Pingdom Tools monitored website downtime

Real User Monitoring Services

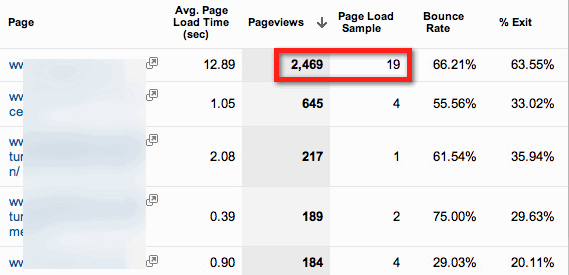

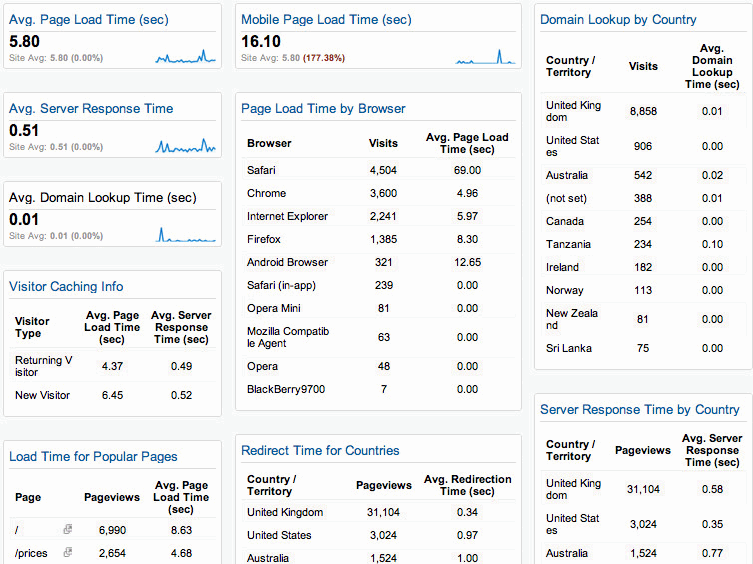

RUM services offer site speed measurements based on a site's actual visitors page loading times. These are tracked by adding some proprietary JavaScript code on every single page of a site, hence measurements are not possible for competitors sites. There are several (Free and Paid) RUM services available with the most common one being Google Analytics. Undoubtedly, combining site speed with business metrics such as pageviews , entrances, bounce and exit rates can provide some unique insights, which is the biggest strength of Google Analytics' site speed service.

However, the Google Analytics site speed feature comes with a few flaws with the main ones being the following:

- The Navigation Timing API, which Google Analytics relies on to collect its site performance data, is not supported by all browser vendors. Some desktop browsers (e.g. Safari) as well as browsers for popular mobile devices (e.g. iOS) do not support the API, which is not ideal given the increasing popularity of mobile devices. The API seems to currently support around 65% of global users.

- By default, sampled page timings come from 1% of the site’s visitors. For high traffic sites the maximum amount of sampled data would be 10K per day. However, for websites with less than 100K visits per day the default sample size may not be ideal.

For instance, according to the below sampled data the homepage of a site with low traffic levels seems to take 12.89 seconds to load. Because the page load sample comes from 19 visits out of 2469 pageviews the data accuracy is questionable.

Improving Google Analytics Site Speed Sampled Data

The good news is that it is possible to increase the site speed sample rate by specifying a higher percentage value for the _setSiteSpeedSampleRate() parameter. For instance, to increase the site speed sample rate from the default 1% to 50% the modified async code would be:

_gaq.push(['_setSiteSpeedSampleRate', 50]);

_gaq.push(['_trackPageview']);

There is a very useful and quick to install Site Performance dashboard for Google that provides a quick overview of the most useful page speed metrics.

For more accurate results some of the most popular 3d party RUM (yet not free) services include:

These services can offer real time site speed data with a lot more granularity on the actual visitors’ page loading times.

Page Loading Times Histogram (Torbit Insight)

Drill Down Interactive Graph (Torbit Insight)

Conclusion

Web Performance Optimisation or WPO (both terms formed by Google's Head Performance Engineer Steve Sauders) takes time and requires thorough planning and resources. However, as several case studies have shown faster loading pages strongly correlate to higher revenue. Because the ultimate goal in every SEO campaign is to maximise revenue, web performance optimisation does not fall out of scope.

As explained earlier, fast loading pages can increase traffic, pageviews and conversions as well as make users happy. Website owners who do not invest in site performance optimisation and treat it as an integral part of their overall digital strategy, run the risk of missing out on a big revenue opportunity. A fast loading site will not only help SEO traffic but also traffic from all other channels (direct, PPC and referring). If, you are still unconvinced, try Tagman's revenue loss calculator which can help forecast the estimated loss in revenue when page loading time is reduced from 1 to 3 seconds.

Revenue Gain Estimator (Credit: Tagman)

Note: For more facts, statistics and case studies as well as tips on the 10 best common areas to pay attention to when optimising for speed please refer to our ‘Slow Pages Lose Customers’ POV.

Update: Useful Wordpress Plugins To Improve Site Performance

Due to popular demand I’ve put together a list of plugins that can help improve site performance and reduce page loading times on Wordpress sites. You should definitely read the available documentation as well as thoroughly test each one of the plugins you’re planning to use to avoid conflicts with other plugins.

- Cache - W3 Total Cache is highly recommended although both WP Super Cache and Quick Cache can all improve caching and therefore reduce page loading times.

- Compress - WP HTTP Compression allows outputting your site’s pages compressed in gzip format if a browser supports compression.

- Minimise - WP Minify helps compressing CSS and JS files to improve page loading time.

- Reduce HTTP requests - JS and CSS Optimiser is a great plugin to reduce the number of HTTP requests. It combines all JavaScript or CSS code in just one file, where possible, which can significantly improve performance. SpriteMe.org offers a bookmarklet that helps grouping images into CSS sprites so the number of HTTP requests reduces further.

- Reduce image sizes - WP-Smush.it uses the Smush.it API to reduce image file sizes and improve performance each time you add a new page or post. Other plugins to reduce file sizes include EWWW Image Optimizer and CW Image Optimizer.

- Optimise the database - WP-DB Manager can optimise the database, which in large sites can speed things up a bit.

- Check plugins performance - P3 Performance Profiler monitors all plugins and creates a performance report. Plugins that slow page loading times should either be removed or replaced.

- Change order of plugins - Plugin Organizer can change the order plugins are loaded so the slower ones can be loaded last or even be disabled on specific pages to save on loading time.

- Parallelise file downloads - WP Parallel Loading System parallelizes HTTP connections and optimises images to save loading time.

-

Use a CDN – There are quite a few different options when it comes to choosing a Content Delivery Network, some free and some paid.

- jsDelivr.com is a free public Content Delivery Network (CDN) that hosts JavaScript libraries and jQuery plugins. The jsDelivr plugin allows to easily integrate and use the services of jsDelivr.com on any Wordpress site.

- WP Booster is another CDN plugin solution for Wordpress sites which delivers CSS, JavaScript and image files from CDNs in different parts of the world.

- Richard Baxter has written a step-by-step guide on how to use maxcdn with the W3 Total Cache plugin.

Comments

Please keep your comments TAGFEE by following the community etiquette

Comments are closed. Got a burning question? Head to our Q&A section to start a new conversation.