AI-powered keyword organization eliminates guesswork and boosts visibility

Quickly find traffic-driving keywords and content opportunities with the new Keyword Suggestions by Topic feature in Keyword Explorer. This new time-saving feature helps simplify your keyword research so you spend less time sorting data and more time producing great content.

Join us for our upcoming webinar from the SEO Launchpad series

Building an SEO command center puts you in control of your strategy, your progress, and your wins. Learn how to set up a Campaign, track real progress over time, and use your data to make confident, strategic decisions.

Our Latest Updates

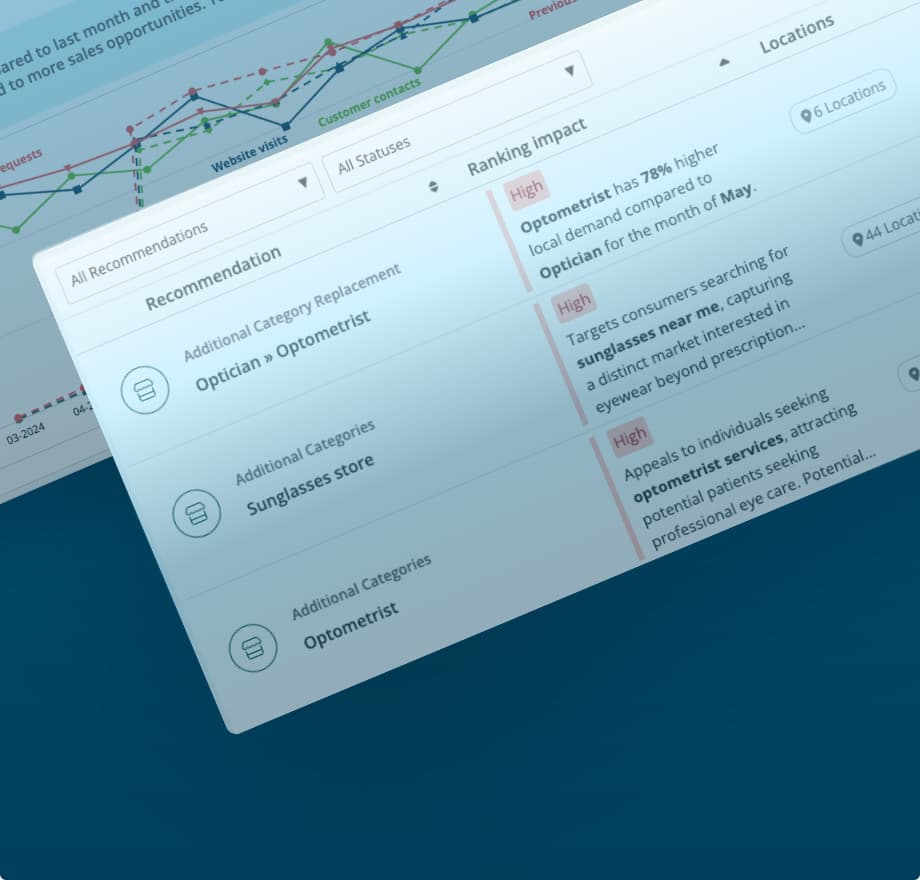

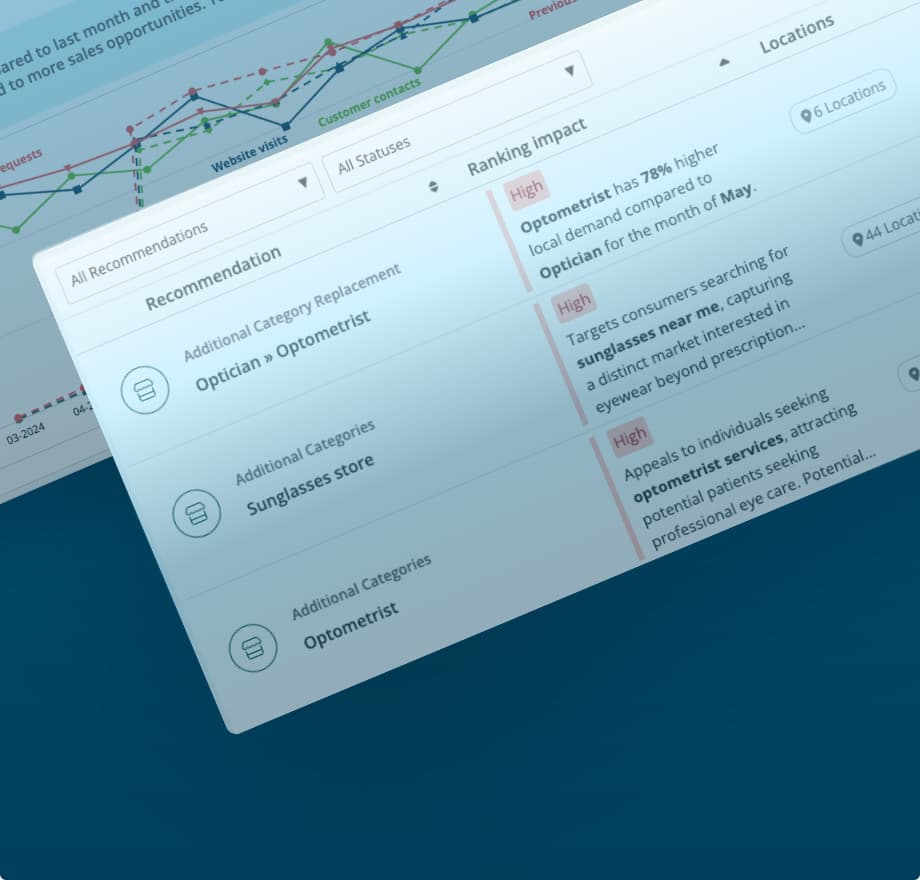

Increase visibility and stay competitive with Moz Local’s Listings AI feature

Listings AI in Moz Local streamlines competitive research and helps you stay ahead with data-driven profile update recommendations. Make sure potential customers can find you with up-to-date suggestions based on your industry and local business landscape.

Increase visibility and stay competitive with Moz Local’s Listings AI feature

Listings AI in Moz Local streamlines competitive research and helps you stay ahead with data-driven profile update recommendations. Make sure potential customers can find you with up-to-date suggestions based on your industry and local business landscape.

Simplify your keyword research with AI-powered keyword suggestions by topic

Keyword Suggestions by Topic in Keyword Explorer will help you cut research time, discover smarter topics, and create content that ranks. This AI-powered feature helps you cut through the noise and eliminates manual sorting so you can spend more time creating great content.

Simplify your keyword research with AI-powered keyword suggestions by topic

Keyword Suggestions by Topic in Keyword Explorer will help you cut research time, discover smarter topics, and create content that ranks. This AI-powered feature helps you cut through the noise and eliminates manual sorting so you can spend more time creating great content.

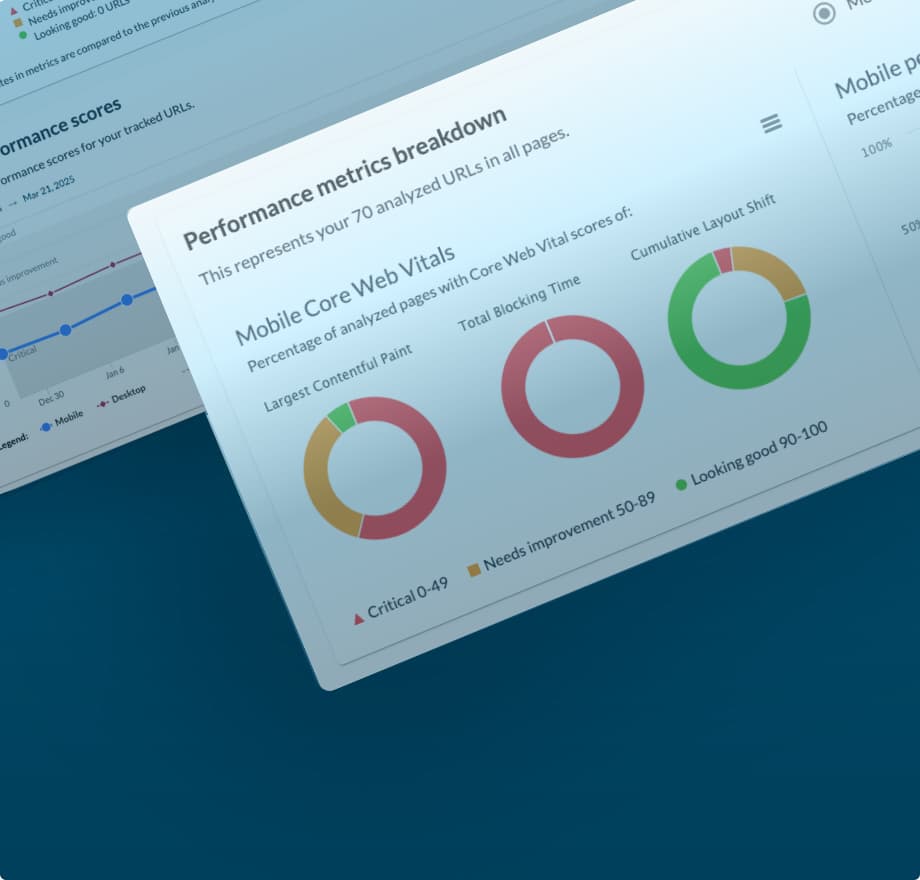

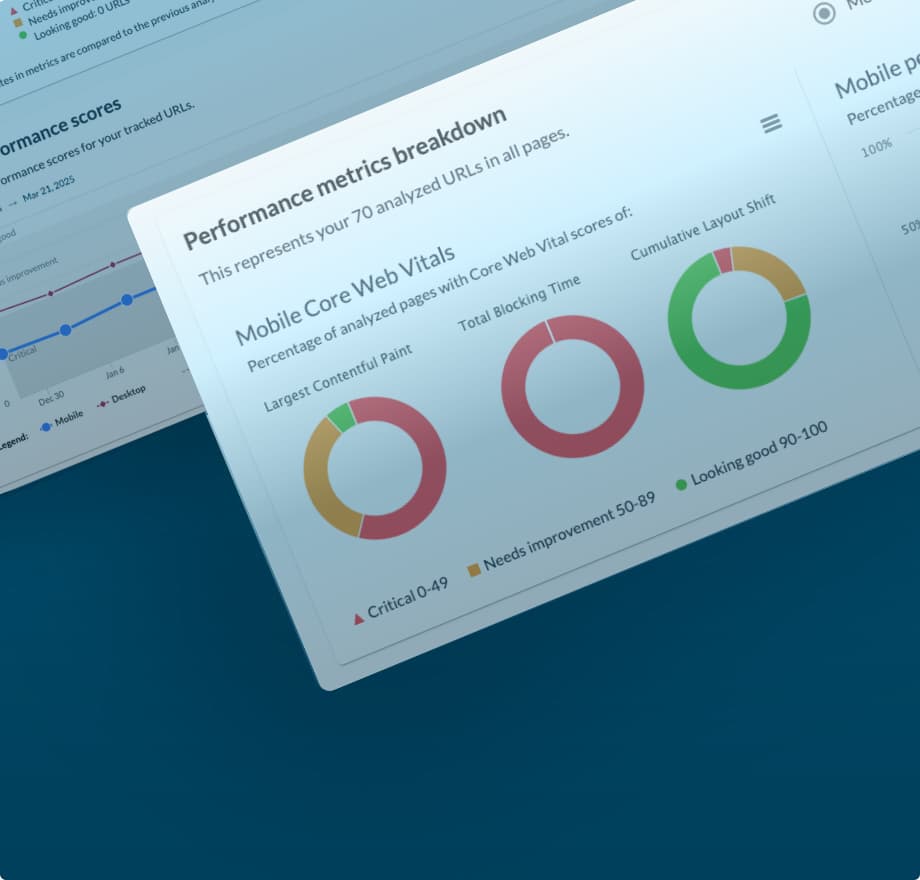

Performance Metrics updated to provide improved accuracy and better insights

We’re happy to announce that we’ve updated our system to provide you with more accurate and reliable performance scores in Performance Metrics in Moz Pro Campaigns. This improvement allows us to show you results that better reflect real-world performance.

Performance Metrics updated to provide improved accuracy and better insights

We’re happy to announce that we’ve updated our system to provide you with more accurate and reliable performance scores in Performance Metrics in Moz Pro Campaigns. This improvement allows us to show you results that better reflect real-world performance.

Identify rankings opportunities and gain competitive advantage with the Keyword Gap

We’re excited to announce the release of Keyword Gap 2.0 into beta! This update offers improved functionality and all-new features to help you gain a competitive advantage.

Identify rankings opportunities and gain competitive advantage with the Keyword Gap

We’re excited to announce the release of Keyword Gap 2.0 into beta! This update offers improved functionality and all-new features to help you gain a competitive advantage.

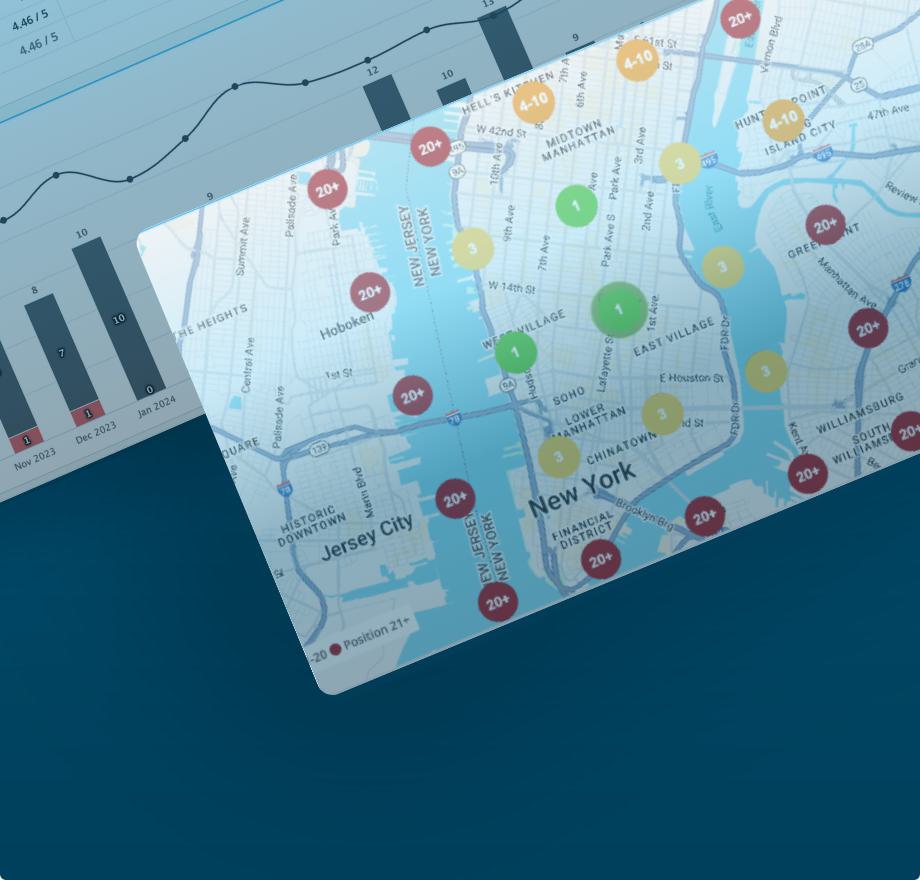

Announcing the new Moz Local – available now

We are excited to announce that the new Moz Local is here! This update to our existing local SEO tool provides an all-new, fully renovated in-app experience for our customers along with new features, AI integration, and more.

Announcing the new Moz Local – available now

We are excited to announce that the new Moz Local is here! This update to our existing local SEO tool provides an all-new, fully renovated in-app experience for our customers along with new features, AI integration, and more.

Brand Authority updated to better assist with competitive positioning

We’re happy to announce that our data set for this helpful metric has been expanded to include the UK, CA, and AU. This update allows for a more holistic view of brand strength across key English-speaking markets and improves the accuracy of this metric for non-US brands.

Brand Authority updated to better assist with competitive positioning

We’re happy to announce that our data set for this helpful metric has been expanded to include the UK, CA, and AU. This update allows for a more holistic view of brand strength across key English-speaking markets and improves the accuracy of this metric for non-US brands.

Ready to discover the Moz tools?

With a new user experience, enhanced UI, and the introduction of Moz AI, it’s now even easier to keep an eye on your competition and dominate the SERPs. Give it a try with a free month of Moz Pro, on us.

![How To Launch, Grow, and Scale a Community That Supports Your Brand [MozCon 2025 Speaker Series]](https://moz.rankious.com/_moz/images/blog/banners/Mozcon2025_SpeakerBlogHeader_1180x400_Areej-abuali_London.png?w=1180&h=400&auto=compress%2Cformat&fit=crop&dm=1747732165&s=4d44cc57ccab5d0de24c776c5dab204a)

![Clicks Don’t Pay the Bills: Use This Audit Framework To Prove Content Revenue [Mozcon 2025 Speaker Series]](https://moz.rankious.com/_moz/images/blog/banners/Mozcon2025_SpeakerBlogHeader_1180x400_Hellen_London.png?w=1180&h=400&auto=compress%2Cformat&fit=crop&dm=1747758249&s=cad8e6fa671b7e67387c1e7db60823b7)