Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Category: Technical SEO

Discuss site health, structure, and other technical SEO strategies.

-

Is there a reason why a host would be reluctant to give up Cpanel access info?

Granted, a strange question here... My client lost her cpanel login credentials, or never bothered to get them (she didn't even know she had a hosting account). Apparently she has a friend who is hosting her website for her, free of charge. I need to get into the cpanel, but they are being extremely difficult. The client asked them and they didn't want to give it to her either. Still trying, but is there any reason why they would be so difficult? How does it benefit them? It can't be because they're afraid of losing her account because she isn't paying them anything. Totally confused by this. Any ideas?

| Masbro1 -

How to stop google from indexing specific sections of a page?

I'm currently trying to find a way to stop googlebot from indexing specific areas of a page, long ago Yahoo search created this tag class=”robots-nocontent” and I'm trying to see if there is a similar manner for google or if they have adopted the same tag? Any help would be much appreciated.

| Iamfaramon0 -

Google Cache showing a different URL

Hi all, very weird things happening to us. For the 3 URLs below, Google cache is rendering content from a different URL (sister site) even though there are no redirects between the 2 & live page shows the 'right content' - see: http://webcache.googleusercontent.com/search?q=cache:http://giltedgeafrica.com/tours/ http://webcache.googleusercontent.com/search?q=cache:http://giltedgeafrica.com/about/ http://webcache.googleusercontent.com/search?q=cache:http://giltedgeafrica.com/about/team/ We also have the exact same issue with another domain we owned (but not anymore), only difference is that we 301 redirected those URLs before it changed ownership: http://webcache.googleusercontent.com/search?q=cache:http://www.preferredsafaris.com/Kenya/2 http://webcache.googleusercontent.com/search?q=cache:http://www.preferredsafaris.com/accommodation/Namibia/5 I have gone ahead into the URL removal Tool and got denied for the first case above ("") and it is still pending for the second lists. We are worried that this might be a sign of duplicate content & could be penalising us. Thanks! ps: I went through most questions & the closest one I found was this one (http://moz.com/community/q/page-disappeared-from-google-index-google-cache-shows-page-is-being-redirected) but it didn't provide a clear answer on my question above

| SouthernAfricaTravel0 -

Does Title Tag location in a page's source code matter?

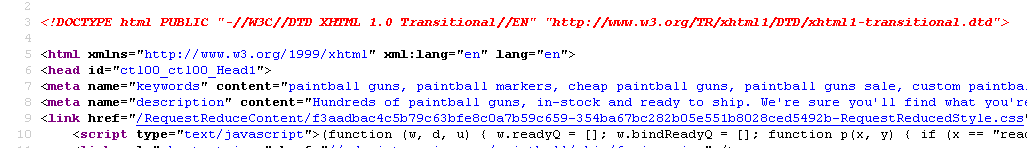

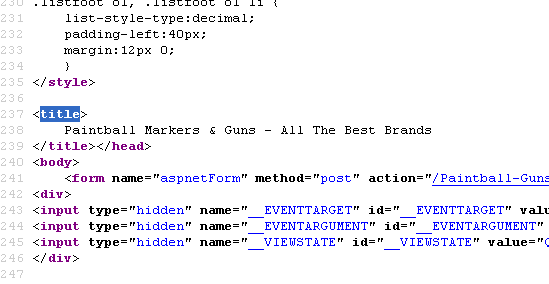

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

| Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

Why are these internal pages not showing any internal links?

If you look at Author profile pages like this one, http://experts.allbusiness.com/author/denise-oberry (THE top contributor on the site with over 82 posts under her belt), or any Author profile page, they show zero internal links or Page Authority. The same goes for most posts for each author on the site. Author pages should show internal links from every post the author has on the site. And specific posts should also have internal links from categories, etc. Yet they show zero. The only posts that show internal links and PA are ones that were either syndicated to the root domain's homepage, or syndicated to Fox Small Business. ZERO internal links. Does anyone know why this is? The root domain does not act this way with Author pages and posts. And I see nothing blocking links or indexing via the robots.txt file or page level nofollow tags. A real head scratcher for this SEO nerd, that I'm sure someone here will have a really simple answer to.

| MiguelSalcido0 -

Why is Google replacing our title tags with URLs in SERP?

Hey guys, We've noticed that Google is replacing a lot of our title tags with URLs in SERP. As far as we know, this has been happening for the last month or so and we can't seem to figure out why. I've attached a screenshot for your reference. What we know: depending on the search query, the title tag may or may not be replaced. this doesn't seem to have any connection to the relevance of the title tag vs the url. results are persistent on desktop and mobile. the length of the title tag doesn't seem to correlate with the replacement. the replacement is happening at mass, to dozens of pages. Any ideas as to why this may be happening? Thanks in advance,

| Mobify

Peter mobify-site-www.mobify.com---Google-Search.png0 -

Last Part Breadcrumb Trail Active or Non-Active

Breadcrumbs have been debated quite a bit in the past. Some claim that the last part of the breadcrumb trail should be non-active to inform users they have reached the end. In other words, Do not link the current page to itself. On the other hand, that portion of the breadcrumb would won't be displayed in the SERPS and if it was may lead to a higher CTR. Foe example: www.website.com/fans/panasonic-modelnumber panasonic-modelnumber would not be active as part of the breadcrumb. What is your take?

| CallMeNicholi0 -

Sudden drop in Rankings after 301 redirect

Greetings to Moz Community. Couple of months back, I have redirected my old blog to a new URL with 301 redirect because of spammy links pointed to my old blog. I have transfer all the posts manually, changed the permalink structure and 301 redirected every individual URL. All the ranking were boosted within couple of weeks and regained the traffic. After a month I have observed, the links pointed to old site are showing up in Webmaster Tools for the new domain. I was shocked (no previous experience) and again Disavowed all links. Today, all the positions went down for new domain. My questions are: 1. Did the Disavow tool worked this time with new domain? All the links pointed to old domain were devaluated? Is this the reason for ranking drop? Or 2. 301 Old domain with Unnatural links causes the issue? 3. Removing 301 will help to regain few keyword positions? I'm taking this as a case study. Already removed the 301 redirect. Looking for solid discussion.Thanks.

| praveen4390 -

Fake Links indexing in google

Hello everyone, I have an interesting situation occurring here, and hoping maybe someone here has seen something of this nature or be able to offer some sort of advice. So, we recently installed a wordpress to a subdomain for our business and have been blogging through it. We added the google webmaster tools meta tag and I've noticed an increase in 404 links. I brought this up to or server admin, and he verified that there were a lot of ip's pinging our server looking for these links that don't exist. We've combed through our server files and nothing seems to be compromised. Today, we noticed that when you do site:ourdomain.com into google the subdomain with wordpress shows hundreds of these fake links, that when you visit them, return a 404 page. Just curious if anyone has seen anything like this, what it may be, how we can stop it, could it negatively impact us in anyway? Should we even worry about it? Here's the link to the google results. https://www.google.com/search?q=site%3Amshowells.com&oq=site%3A&aqs=chrome.0.69i59j69i57j69i58.1905j0j1&sourceid=chrome&es_sm=91&ie=UTF-8 (odd links show up on pages 2-3+)

| mshowells0 -

Do quizzes hurt your site? Thin content?

We did a 10 question quiz awhile back relating to something we were sponsoring, and it had a decent response. However, considering quizzes just aren't that long, does that contribute to making the site's content thin? Obviously, it's not a major problem at the moment, but if we did more of them would this be an issue? If there's no real issue, I'd prefer not to no-index them, but I'd love some feedback to help make the decision. Thanks, Ruben

| KempRugeLawGroup0 -

Flat vs Hierarchical URL Structure

Hi, We are redoing our site structure and I was wondering what are the benefits of having a flat url structure. For example store.com/product instead of doing store.com/category/product. I noticed sites doing it both ways, even moz.com has both structures ex: moz.com/learn/seo and when you clck on something it brings you to moz.com/seo-expert-quiz (even though following the previous logic it should be moz.com/learn/seo/seo-expert-quiz) Please advise, Thanks!

| WSteven0 -

How to inform Google to remove 404 Pages of my website?

Hi, I want to remove more than 6,000 pages of my website because of bad keywords, I am going to drop all these pages and making them ‘404’ I want to know how can I inform google that these pages does not exists so please don’t send me traffic from those bad keywords? Also want to know can I use disavow tool of google website to exclude these 6,000 pages of my own website?

| renukishor4 -

Changing menus regularly - will this impact SEO

We are working on an internal project, where the website owner is thinking of making regular changes to one or two items on the top level menu. Assuming they redirect the original pages or navigate to them in other ways, is there any other impact on SEO to changing the menu structure? I assume they'd submit new sitemaps after each change. Many thanks Fiona

| fionah0 -

Schema markup for products is missing "price": Is this bad?

Hey guys, So a current client of mine has an e-commerce shop with a few hundred products. They purposely choose to keep the prices off of their website, which is causing errors in Google Webmaster Tools. Basically the error shows: Error: Structured Data > Product (markup: schema.org) Error type: missing price 208 items with error Is this a huge deal? Or are we allowed to have non-numerical prices for schema ie. "call for quote"

| tbinga1 -

SEO-impact of mouseover text on header pictures

Hi, what do you reckon of taking away the mouseover effect on the header pictures seen on www.viventura.de/reisen/peru?

| viventuraSEO

We are thinking of eliminating the mouseover text to make User Experience even better but are worrying that our ranking might go down when doing so. Any experiences, any help is highly appreciated!

Thanks, Benno0 -

Should we change the publish date in WordPress when updating a post?

Hi everyone, We're going through some of our old posts in our WordPress blog and updating them, adding new information, new links, and photos. My question: If we update the posts significantly, should we also update the "published" date to today? If we only correct some typos or a dead link, we don't touch the date. However, if we've done some real work on the post, we'd like to update the published date in order to bring it to the top of our blog feed and draw new attention to the post. However, I'm a little nervous that this could be seen by Google as spammy, as it's not technically a new post and the URL already exists in Google's index of our site. Here's an example of a post that was published several years ago and then updated a few week's ago with new information (and a new date stamp): http://www.eurocheapo.com/blog/barcelona-tip-five-cheap-eats-under-e6.html Any thoughts on this? Thanks, Tom

| TomNYC0 -

Best strategy to handle over 100,000 404 errors.

I recently been given a site that has over one-hundred thousand 404 error codes listed in Google Webmasters. It is really odd because according to Google Webmasters, the pages that are linking to these 404 pages are also pages that no longer exist (they are 404 pages themselves). These errors were a result of site migration that had occurred. Appreciate any input on how one might go about auditing and repairing large amounts of 404 errors. Thank you.

| SEO_Promenade0 -

Phone number in Meta Description - Is it a good idea?

Is it a best practice to place your company's phone number in the meta description for a page? Are there any rules as to what is acceptable for meta tags? One of our competitors recently started doing this but for some reason I think it might be against Google's guidelines. They (competitor) is also engaging in web spam, plagiarizing our content, and other black hat techniques so I'm leery of anything they do.

| mathamatix0 -

Target: blank. Does it make an SEO difference?

I've notice many sites MOZ included no longer use the target: blank attribute. I think that's what it's called. Basically when a link on your site opens a new tab in the browser as opposed to replacing the browser window you are in. Given that MOZ think of everything, I would love to hear opinions on this.

| wearehappymedia0 -

Do H2 tags carry more weight than h4 tags?

Of course H tags are key signals for relevance in search. Does an h2 tag send a significantly "louder" signal than an h4 tag?

| aj6130 -

Links from PubMed (nlm.nih.gov) not appearing in backlinks for articles

Content from our medical journals gets indexed by the National Library of Medicine / PubMed on a monthly basis. The link to the full article appears in the upper-right corner on PubMed, yet I'm unable to find PubMed (nlm.nih.gov) backlinks in the reporting tools. Example:

| aafpitadmin

Article Title: Respiratory Syncytial Virus Infection in Children (allintitle query)

Article URL: http://www.aafp.org/afp/2011/0115/p141.html

PubMed URL: http://www.ncbi.nlm.nih.gov/pubmed/21243988 The PubMed link is to http://www.aafp.org/link_out?pmid=21243988 ,

a 301 redirect to the article, http://www.aafp.org/afp/2011/0115/p141.html Any idea why this link isn't appearing in backlinks? This isn't just for one article, we have roughly 2,000 articles from 1998 to the present. Articles from the past 12-months are access-restricted, and after 12-months the articles become public.0 -

Changing DNS -- SEO implications?

Hey Moz, We're migrating an old site on an old server over to a new server/DNS. The plan is to keep the same URL structure and reuse our existing URL's. As long as we make minimal changes to each page's content, we should be able to update our DNS entry and get all the pages recreated and assigned to their correct URLs without any reduction in SEO rankings. Is this correct? This site gets a lot of organic traffic and ranks highly on some challenging keywords, so it's key that we retain our rankings as much as possible. I've read that it's wise to lower the DNS time-to-live to one hour, about a day before the move, to help Google crawl the DNS a little quicker. Are there any other recommendations you guys can offer or past experiences?

| stephen_reply0 -

Will Google Recrawl an Indexed URL Which is No Longer Internally Linked?

We accidentally introduced Google to our incomplete site. The end result: thousands of pages indexed which return nothing but a "Sorry, no results" page. I know there are many ways to go about this, but the sheer number of pages makes it frustrating. Ideally, in the interim, I'd love to 404 the offending pages and allow Google to recrawl them, realize they're dead, and begin removing them from the index. Unfortunately, we've removed the initial internal links that lead to this premature indexation from our site. So my question is, will Google revisit these pages based on their own records (as in, this page is indexed, let's go check it out again!), or will they only revisit them by following along a current site structure? We are signed up with WMT if that helps.

| kirmeliux0 -

Reverse IP Lookup

I have a client that has over 90,000 incoming links from a single IP address. I can't figure out who's linking to them. I've used several different reverse IP lookup tools and can tell that the server is in Europe and ISP is AT&T Global Network Services Nederland B.V.. (http://www.ip-adress.com/reverse_ip/194.196.0.36) Says there's 0 hosts on that IP. Any suggestions?

| DonnaDuncan0 -

How to change the woocommerce product page permalink

Sorry Posting it again. How I can change the product URL structure. Please let me know how to fix woocommerce permalink in wordpress. My current URL is http://www.ayurjeewan.com/product/divya-ashmarihar-kwath and I want to like (only post name) http://www.ayurjeewan.com/divya-ashmarihar-kwath Attached is the screenshot of option available. qa2hZMP.jpg

| JordanBrown0 -

Should we nofollow footer social links?

Like most sites today we have a whole raft of social links in our footer, these are on every page of the site and link out to Facebook, Twitter, YouTube, etc Should these links be nofollow to avoid juice leaving our site or would you recommend allowing them to be followed to increase the power of these social sites? Is there a definitive Yay or Na on these social links?

| Twist3600 -

Expired domain 404 crawl error

I recently purchased a Expired domain from auction and after I started my new site on it, I am noticing 500+ "not found" errors in Google Webmaster Tools, which are generating from the previous owner's contents.Should I use a redirection plugin to redirect those non-exist posts to any new post(s) of my site? or I should use a 301 redirect? or I should leave them just as it is without taking further action? Please advise.

| Taswirh1 -

How much will changing IP addresses impact SEO?

So my company is upgrading its Internet bandwidth. However, apparently the vendor has said that part of the upgrade will involve changing our IP address. I've found two links that indicate some care needs to be taken to make sure our SEO isn't harmed: http://followmattcutts.com/2011/07/21/protect-your-seo-when-changing-ip-address-and-server/ http://www.v7n.com/forums/google-forum/275513-changing-ip-affect-seo.html Assuming we don't use an IP address that has been blacklisted by Google for spamming or other black hat tactics, how problematic is it? (Note: The site hasn't really been aggressively optimized yet - I started with the company less than two weeks ago, and just barely got FTP and CMS access yesterday - so honestly I'm not too worried about really messing up the site's optimization, since there isn't a lot to really break.)

| ufmedia0 -

Is there a maximum sitemap size?

Hi all, Over the last month we've included all images, videos, etc. into our sitemap and now its loading time is rather high. (http://www.troteclaser.com/sitemap.xml) Is there any maximum sitemap size that is recommended from Google?

| Troteclaser0 -

International architecture: Country specific subfolders > domain mapping to tld

Hi Ive got a clients dev saying they are setting up with country/language specific subfolders (as i recommended) BUT now they are saying they want to set up on network.domain.com (for example) and then each language will have its own sub-folder BUT will be domain mapped to the TLD as and when they get them. I have asked them to clarify since sounds a bit strange since thought best to have domain.com then /uk and /us etc etc and sure ok to forward country specific TLD's to these subfolders. Its this new subdomain (network.) thats concerning me and mapping rather than forwarding (or is it the same thing) but anyone know off hand if above sounds ok or also thinks a bit strange or know issues with such a set up ? many thanks dan

| Dan-Lawrence0 -

How to handle (internal) search result pages?

Hi Mozers, I'm not quite sure what the best way is to handle internal search pages. In this case it's for an ecommerce website with about 8.000+ products and search pages currently look like: example.com/search.php?search=QUERY+HERE. I'm leaning towards making them follow, noindex. Since pages like this can be easily abused for duplicate content and because I'd rather have the category pages ranked. How would you handle this?

| Qon0 -

Using the Google Remove URL Tool to remove https pages

I have found a way to get a list of 'some' of my 180,000+ garbage URLs now, and I'm going through the tedious task of using the URL removal tool to put them in one at a time. Between that and my robots.txt file and the URL Parameters, I'm hoping to see some change each week. I have noticed when I put URL's starting with https:// in to the removal tool, it adds the http:// main URL at the front. For example, I add to the removal tool:- https://www.mydomain.com/blah.html?search_garbage_url_addition On the confirmation page, the URL actually shows as:- http://www.mydomain.com/https://www.mydomain.com/blah.html?search_garbage_url_addition I don't want to accidentally remove my main URL or cause problems. Is this the right way this should look? AND PART 2 OF MY QUESTION If you see the search description in Google for a page you want removed that says the following in the SERP results, should I still go to the trouble of putting in the removal request? www.domain.com/url.html?xsearch_... A description for this result is not available because of this site's robots.txt – learn more.

| sparrowdog1 -

Omitted results

Hello We are facing a loss in ranking and organic traffic from 3 months on our ecommerce website. Mostly we have lost our ranking on our product pages. These pages are gone in the "omitted results" of google. It all started 3 months ago, when we had to face a duplicate content issue due to a technical priblems with our servers: 2 other domains that we own have been pushed online on google, while they shouldn't have. They have created millions of links to our main domain in a few days, and duplicate version / redirection to our main website. We have fixed this a long time ago now. But in GWT we still see that these domains are bringing links to our main ecommerce. It has dowgraded from 36 millions links to 3 millions.... Even if today there is no link ! We have done a lot of optimizations on site like adding specific content to our most important page, rebuilding the navigation, adding microdatas, adding canonical urls on products pages that we found were very similar (we sell very technical products, and we have products that are very similar. Now we have choosen 1 product to put in canonical each time it was necessary) Bt still our products pages don't rank in google. They stay in the "omitted results". Before they were ranking very well on 1st page of google's results. And we have noticed that some adswe put on ads listing websites are now well ranked in the google's results!... Like if the ads were having more authority on the subject than our own webpages... We started to delete some of these ads. But it's not always possible. And 2-3 of them are still online. Any advice to get our most important webpages at the top on the google's results back? Regards

| Poptafic0 -

Is Google suppressing a page from results - if so why?

UPDATE: It seems the issue was that pages were accessible via multiple URLs (i.e. with and without trailing slash, with and without .aspx extension). Once this issue was resolved, pages started ranking again. Our website used to rank well for a keyword (top 5), though this was over a year ago now. Since then the page no longer ranks at all, but sub pages of that page rank around 40th-60th. I searched for our site and the term on Google (i.e. 'Keyword site:MySite.com') and increased the number of results to 100, again the page isn't in the results. However when I just search for our site (site:MySite.com) then the page is there, appearing higher up the results than the sub pages. I thought this may be down to keyword stuffing; there were around 20-30 instances of the keyword on the page, however roughly the same quantity of keywords were on each sub pages as well. I've now removed some of the excess keywords from all sections as it was getting in the way of usability as well, but I just wanted some thoughts on whether this is a likely cause or if there is something else I should be worried about.

| Datel1 -

"Search Box Optimization"

A client of ours recently received en email from a random SEO "company" claiming they could increase website traffic using a technique known as "search box optimization". Essentially, they are claiming they can insert a company name into the autocomplete results on Google. Clearly, this isn't a legitimate service - however, is it a well known technique? Despite our recommendation to not move forward with it, the client is still very intrigued. Here is a video of a similar service:

| McFaddenGavender

https://www.youtube.com/watch?v=zW2Fz6dy1_A0 -

Why google indexed pages are decreasing?

Hi, my website had around 400 pages indexed but from February, i noticed a huge decrease in indexed numbers and it is continually decreasing. can anyone help me to find out the reason. where i can get solution for that? will it effect my web page ranking ?

| SierraPCB0 -

Sitemap indexed pages dropping

About a month ago I noticed my pages indexed from my sitemap are dropping.There are 134 pages in my sitemap and only 11 are indexed. It used to be 117 pages and just died off quickly. I still seem to be getting consistant search traffic but I'm just not sure whats causing this. There are no warnings or manual actions required in GWT that I can find.

| zenstorageunits0 -

Redirect URLS with 301 twice

Hello, I had asked my client to ask her web developer to move to a more simplified URL structure. There was a folder called "home" after the root which served no purpose. I asked for the URLs to be redirected using 301 to the new URLs which did not have this structure. However, the web developer didn't agree and decided to just rename the "home" folder "p". I don't know why he did this. We argued the case and he then created the URL structure we wanted. Initially he had 301 redirected the old URLS (the one with "Home") to his new version (the one with the "p"). When we asked for the more simplified URL after arguing, he just redirected all the "p" URLS to the PAGE NOT FOUND. However, remember, all the original URLs are now being redirected to the PAGE NOT FOUND as a result. The problems I see are these unless he redirects again: The new simplified URLS have to start from scratch to rank 2)We have duplicated content - two URLs with the same content Customers clicking products in the SERPs will currently find that they are being redirect to the 404 page. I understand that redirection has to occur but my questions are these: Is it ok to redirect twice with 301 - so old URL to the "p" version then to final simplified version. Will link juice be lost doing this twice? If he redirects from the original URLS to the final version missing out the "p" version, what should happen to the "p" version - they are currently indexed. Any help would be appreciated. Thanks

| AL123al0 -

Updating inbound links vs. 301 redirecting the page they link to

Hi everyone, I'm preparing myself for a website redesign and finding conflicting information about inbound links and 301 redirects. If I have a URL (we'll say website.com/website) that is linked to by outside sources, should I get those outside sources to update their links when I change the URL to website.com/webpage? Or is it just as effective from a link juice perspective to simply 301 redirect the old page to the new page? Are there any other implications to this choice that I may want to consider? Thanks!

| Liggins0 -

Multiple H1 tags in Squarespace

Hi. I'm using Squarespace, and I've noticed they assign the page title and site title h1 tag status. So if I add an on-page h1 tag, that's three in total. I've seen what Matt Cutts said about multiple h1 tags being acceptable (although that video was back in 2009 and a lot has changed since then). But I'm still a little concerned that this is perhaps not the best way of structuring for SEO. Could anyone offer me any advice? Thanks.

| The_Word_Department0 -

SEO value of InDesign pages?

Hi there, my company is exploring creating an online magazine built with Adobe's InDesign toolset. If we proceeded with this, could we make these pages "as spiderable" as normal html/css webpages? Or are we limited to them being less spiderable, or not at all spiderable?

| TheaterMania1 -

Error report in Bing Evaluated size of HTML....

Hi Whilst checking Bing's SEO analyser I got this error message for our page www.tidy-books.co.uk/childrens-bookcases "Evaluated size of HTML is estimated to be over 125 KB and risks not being fully cached. (Issue marker for this rule is not visible in the current view)" Just wondering what needs to be done about it and what it actually means? Thanks

| tidybooks0 -

2 Versions of Same Homepage

We want to show new and returning visitors different versions of our homepage (same URL) What, if anything, should we use as the markup to tell Google what we are doing?

| theLotter

Any danger that Google will think we are cloaking? Thanks!0 -

Wordpress versus html and google ranking

My current SEO has always recommended that I take my site to wordpress. I really don't want to move to wordpress. I don't like it... I just like writing code in raw html, css, and script. I feel like I have more control that way. Wordpress just seems like a platform for blogs (I have my blog in wordpress). My question is, do wordpress websites typically rank better? Is there benefit to moving to it?

| CalicoKitty20000 -

Direct link vs 302 redirect

So we have recently relaunched a site that we manage. As part of this we have changed the domain. The webdesign agency that built the new site have implemented a direct link from the old domain to the new domain. What is best practice a direct link or a 302 redirect? Thanks

| cbarron0 -

Is there a tool to see all redirects?

I'm thinking this is a silly question, but I've never had to deal with it I thought I'd ask. Ok is there a tool out there that will show all the redirects to a domain. I'm working on a project that I keep stumbling on urls that redirect to the site I'm studying. They don't show up in Open Site or ahrefs as linking domains, but they keep popping up on me. Any thoughts?

| BCutrer0 -

How Long To Recover Rankings After Multi-Day Site Outage?

Hi, A site we look after for a client was down for almost 3 days at the start of this month (11th - 14th of May, to be exact). This was caused by my client's failure to verify their domain name in accordance with the new ICANN procedures. The details are unimportant, but it took a long while for them to get their domain name registration contact details validated, hence the outage. Very soon after this down time we noticed that the site has slipped back in the Google rankings for most of the target keywords, sometimes quite considerably. I guess this is Google penalizing this client for their failure to keep their site live. (And they really can't have too many complaints about this, in my opinion). The good news is that the rankings show signs of improving again slightly. However, they have not recovered all the way to where they were before the outage, two weeks ago. My question is this ... do you expect that the site will naturally re-gain the previous excellent rankings without us doing anything? If so, how long do you estimate this could take? On the other hand, if Google typically penalizes this kind of error by 'permanently', is there is anything we can do to help signal to Google that the site deserves to get back up to where is used to be? I am keen to get your thoughts, and especially to hear from anyone who has faced a similar problem in the past. Thanks

| smaavie0 -

How to identify orphan pages?

I've read that you can use Screaming Frog to identify orphan pages on your site, but I can't figure out how to do it. Can anyone help? I know that Xenu Link Sleuth works but I'm on a Mac so that's not an option for me. Or are there other ways to identify orphan pages?

| MarieHaynes0 -

Is alt text inside an img tag inside an h1 the same weight as text directly inside the h1?

Right now I use a background image and CSS to tie the h1 tag to my logo on each page. However, I am concerned that may not be best practice. Plus, I am interested in using schema markup on my logo. So, my question is, if I use an image with alt text inside my h1 tag, will the alt text carry as much weight as a text-based h1?

| Avalara0

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.