Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Category: Technical SEO

Discuss site health, structure, and other technical SEO strategies.

-

Special characters in URL

Will registered trademark symbol within a URL be bad? I know some special characters are unsafe (#, >, etc.) but can not find anything that mentions registered trademark. Thanks!

| bonnierSEO0 -

Can a CMS affect SEO?

As the title really, I run www.specialistpaintsonline.co.uk and 6 months ago when I first got it it had bad links which google had put a penalty against it so losts it value. However the penalty was lift in Sept, the site corresponds to all guidelines and seo work has been done and constantly monitored. the issue I have is sales and visits have not gone up, we are failing fast and running on 2 or 3 sales a month isn't enough to cover any sort of cost let alone wages. hence my question can the cms have anything to do with it? Im at a loss and go grey any help or advice would be great. thanks in advance.

| TeamacPaints0 -

SEO-optimized Urls for Japan: English or Japanese Characters

Hi, Anyone got experience with Japanese Urls? I'm currently working on the relaunch of the Japanese site of the troteclaser.com and I wonder if we should use English or Japanese characters for the Urls. I found some topics on the forums about this, but they only tell you that Google can crawl both without problems. The question is if there is a benefit if Japanese characters are used.

| Troteclaser1 -

Blocking Affiliate Links via robots.txt

Hi, I work with a client who has a large affiliate network pointing to their domain which is a large part of their inbound marketing strategy. All of these links point to a subdomain of affiliates.example.com, which then redirects the links through a 301 redirect to the relevant target page for the link. These links have been showing up in Webmaster Tools as top linking domains and also in the latest downloaded links reports. To follow guidelines and ensure that these links aren't counted by Google for either positive or negative impact on the site, we have added a block on the robots.txt of the affiliates.example.com subdomain, blocking search engines from crawling the full subddomain. The robots.txt file is the following code: User-agent: * Disallow: / We have authenticated the subdomain with Google Webmaster Tools and made certain that Google can reach and read the robots.txt file. We know they are being blocked from reading the affiliates subdomain. However, we added this affiliates subdomain block a few weeks ago to the robots.txt, but links are still showing up in the latest downloads report as first being discovered after we added the block. It's been a few weeks already, and we want to make sure that the block was implemented properly and that these links aren't being used to negatively impact the site. Any suggestions or clarification would be helpful - if the subdomain is being blocked for the search engines, why are the search engines following the links and reporting them in the www.example.com subdomain GWMT account as latest links. And if the block is implemented properly, will the total number of links pointing to our site as reported in the links to your site section be reduced, or does this not have an impact on that figure?From a development standpoint, it's a much easier fix for us to adjust the robots.txt file than to change the affiliate linking connection from a 301 to a 302, which is why we decided to go with this option.Any help you can offer will be greatly appreciated.Thanks,Mark

| Mark_Ginsberg0 -

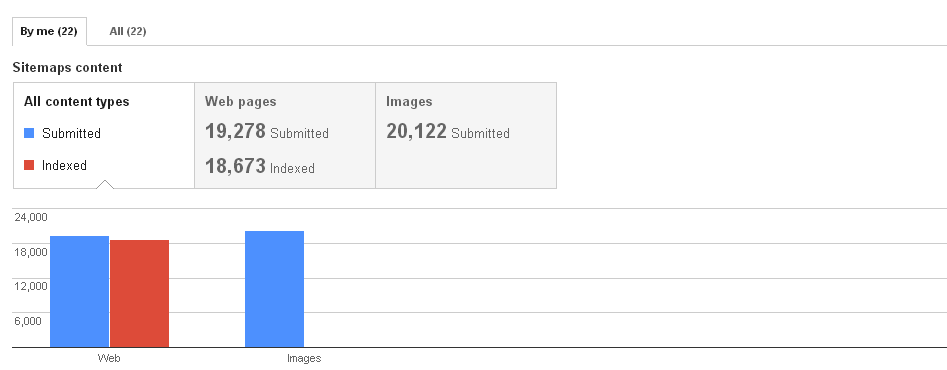

Image Indexing Issue by Google

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

| CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

Should all pagination pages be included in sitemaps

How important is it for a sitemap to include all individual urls for the paginated content. Assuming the rel next and prev tags are set up would it be ok to just have the page 1 in the sitemap ?

| Saijo.George0 -

Templates for Meta Description, Good or Bad?

Hello, We have a website where users can browse photos of different categories. For each photo we are using a meta description template such as: Are you looking for a nice and cool photo? [Photo name] is the photo which might be of interest to you. And in the keywords tags we are using: [Photo name] photos, [Photo name] free photos, [Photo name] best photos. I'm wondering, is this any safe method? it's very difficult to write a manual description when you have 3,000+ photos in the database. Thanks!

| TheSEOGuy10 -

Where to put Schema On Page

What part of my page should I put Schema data? Header? Footer? Also All pages? or just home page?

| bozzie3114 -

ALT attribute keyword on the same image but different pages

Hi there, As i'm sure you're probably aware, moz advises to use a keyword within the ALT attribute on pages... On a new website I am launching, I have the ability to add an alt keyword to image headers. On multiple pages we have the exact same image but with different keywords associated them inside the alt attribute. The image itself is a collage of different images and so the keywords used can, quite sneakily, match the image. My question is therefore, will using different keywords on the same image on different pages have a negative effect on SEO? Thanks, Stuart

| Stuart260 -

Hreflang Tag great for Google, what about Bing or others?

I've read that the Hreflang Tag is all the rave for International solutions on a per page basis. I haven't read much about what International agencies are using for non-Google search engines such as Bing. Is the common language meta tags the only solution? would love to see an article that addresses this

| MikeSEOTruven0 -

New "Static" Site with 302s

Hey all, Came across a bit of an interesting challenge recently, one that I was hoping some of you might have had experience with! We're currently in the process of a website rebuild, for which I'm really excited. The new site is using Markdown to create an entirely static site. Load-times are fantastic, and the code is clean. Life is good, apart from the 302s. One of the weird quirks I've realized is that with oldschool, non-server-generated page content is that every page of the site is an Index.html file in a directory. The resulting in a www.website.com/page-title will 302 to www.website.com/page-title/. My solution off the bat has been to just be super diligent and try to stay on top of the link profile and send lots of helpful emails to the staff reminding them about how to build links, but I know that even the best laid plans often fail. Has anyone had a similar challenge with a static site and found a way to overcome it?

| danny.wood1 -

Removing images from site and Image Sitemap SEO advice

Hello again, I have received an update request where they want me to remove images from this site (as of now its a bunch of thumbnails) current page design: http://1stimpressions.com/portfolio/car-wraps/ and turn it into a new design which utilized a slider (such as this): http://1stimpressions.com/portfolio/ They don't want the thumbnails on the page anymore. My question is since my site has a image sitemap that has been indexed will removing all the images hurt my SEO greatly? What would the recommended steps to take to reduce any SEO damage be, if so? Thank you again for your help, always great and very helpful feedback! 🙂 cheers!

| allstatetransmission0 -

Will blocking the Wayback Machine (archive.org) have any impact on Google crawl and indexing/SEO?

Will blocking the Wayback Machine (archive.org) by adding the code they give have any impact on Google crawl and indexing/SEO? Anyone know? Thanks! ~Brett

| BBuck0 -

Question on noscript tags and indexing

If I have a <noscript>tag on every page of my website with the same sentence over and over saying something to the effect of "Sorry our site uses Javascript, please enable javascript for the full site experience.", Webmaster Tools will tell me that one of the most common words on my site is "Javascript".</p> <p>Is this something to be concerned about from an SEO perspective? My site is obviously not about Javascript and I don't want to dilute my page's topic or authority by repeating words that are not relevant to the topic of my site.</p> <p>Thanks!</p></noscript>

| IrvCo_Interactive0 -

Why is my blog disappearing from Google index?

My Google blogger blog is about 10 months old. In that time i have worked really hard with adding unique content, building relationships with other bloggers in the same niche, and done some inbound marketing. 2 weeks ago I updated the template to something cleaner, with a little more "wordpress" feel to it. This means i've messed about with the code a lot in these weeks, adding social buttons etc. The problem is that from some point late last week thurs/fri my pages started disappearing from Googles index. I have checked webmaster tools and have no manual actions. My link profile is pretty clean as its a new site, and i have manually checked every piece of content published for plagiarism etc. So what is going on? Did i break my blog? Or is something else amiss? Impressions are down 96% comparing Nov 1-5th to previous 5 days. site is here: http://bit.ly/174beVm Thanks for any help in advance.

| Silkstream0 -

Why is Google's cache preview showing different version of webpage (i.e. not displaying content)

My URL is: http://www.fslocal.comRecently, we discovered Google's cached snapshots of our business listings look different from what's displayed to users. The main issue? Our content isn't displayed in cached results (although while the content isn't visible on the front-end of cached pages, the text can be found when you view the page source of that cached result).These listings are structured so everything is coded and contained within 1 page (e.g. http://www.fslocal.com/toronto/auto-vault-canada/). But even though the URL stays the same, we've created separate "pages" of content (e.g. "About," "Additional Info," "Contact," etc.) for each listing, and only 1 "page" of content will ever be displayed to the user at a time. This is controlled by JavaScript and using display:none in CSS. Why do our cached results look different? Why would our content not show up in Google's cache preview, even though the text can be found in the page source? Does it have to do with the way we're using display:none? Are there negative SEO effects with regards to how we're using it (i.e. we're employing it strictly for aesthetics, but is it possible Google thinks we're trying to hide text)? Google's Technical Guidelines recommends against using "fancy features such as JavaScript, cookies, session IDs, frames, DHTML, or Flash." If we were to separate those business listing "pages" into actual separate URLs (e.g. http://www.fslocal.com/toronto/auto-vault-canada/contact/ would be the "Contact" page), and employ static HTML code instead of complicated JavaScript, would that solve the problem? Any insight would be greatly appreciated.Thanks!

| fslocal0 -

Should i index or noindex a contact page

Im wondering if i should noindex the contact page im doing SEO for a website just wondering if by noindexing the contact page would it help SEO or hurt SEO for that website

| aronwp0 -

301 redirect relative or absolute path?

Hello everyone, Recently we've changed the URL structure on our website, and of course we had to 301 redirect the old urls to the coresponding new ones. The way the technical guys did this is: "http://www.domain.com/old-url.html" 301 redirect to "/new-url.html"

| Silviu

meaning as a relative redirect path, not an absolute one like this:

"http://www.domain.com/old-url.html" 301 redirect to "http://www.domain.com/new-url.html" This happened for few thousands urls, and the fact is the organic traffic dropped for those pages after this change. (no other changes were made on these pages and the new urls are as seo friendly as possible, A grade on On-Page Grader). The question is: does the relative redirect negatively affects seo, or it counts the same as an absolute path redirect? Thanks,

S.0 -

Permalink structure for Wordpress Getshopped Plugin

Hi everyone, has anyone had any experience with Getshopped eCommerce plugin for Wordpress and it's permalink structure? Currently the permalink reads something like http://www.sitename.com/products-page/product-category/product-description/ I would like to modify it to be http://www.sitename.com/product-description/ but the SEO Plugin I am using All-in-One SEO only allows me to edit the "product-description" part of the permalink and not the "products-page/product-category/" part of it The permalink structure is set to /%postname%/ under Settings in Wordpress. Any help/comments will be greatly appreciated Thanks

| webseoservices0 -

Noindex user profile

I have a social networking site with user- and company profiles. Some profiles have little to no content. One of the users here at moz suggested noindex-ing these profiles. I am still investigating this issue and have some follow up questions: What is the possible gain of no-indexing uninteresting profiles? Especially interested in this since these profiles do bring in long-tail traffic atm. How "irreversable" is introducing a noindex directive? Would everything "return to normal" if I remove te noindex directive? When determining the treshold for having profiles indexed, how should the following items be weighed Sum of number of words on the page (comprised of one or more of the following: full name, city, 0 to N company names, bio, activity) (unique) Profile picture (Nofollowed) Links to user's profiles on social networks or user's own site. Embedded Google Map Thanks!

| thomasvanderkleij0 -

302 redirect used, submit old sitemap?

The website of a partner of mine was recently migrated to a new platform. Even though the content on the pages mostly stayed the same, both the HTML source (divs, meta data, headers, etc.) and URLs (removed index.php, removed capitalization, etc) changed heavily. Unfortunately, the URLs of ALL forum posts (150K+) were redirected using a 302 redirect, which was only recently discovered and swiftly changed to a 301 after the discovery. Several other important content pages (150+) weren't redirected at all at first, but most now have a 301 redirect as well. The 302 redirects and 404 content pages had been live for over 2 weeks at that point, and judging by the consistent day/day drop in organic traffic, I'm guessing Google didn't like the way this migration went. My best guess would be that Google is currently treating all these content pages as 'new' (after all, the source code changed 50%+, most of the meta data changed, the URL changed, and a 302 redirect was used). On top of that, the large number of 404's they've encountered (40K+) probably also fueled their belief of a now non-worthy-of-traffic website. Given that some of these pages had been online for almost a decade, I would love Google to see that these pages are actually new versions of the old page, and therefore pass on any link juice & authority. I had the idea of submitting a sitemap containing the most important URLs of the old website (as harvested from the Top Visited Pages from Google Analytics, because no old sitemap was ever generated...), thereby re-pointing Google to all these old pages, but presenting them with a nice 301 redirect this time instead, hopefully causing them to regain their rankings. To your best knowledge, would that help the problems I've outlined above? Could it hurt? Any other tips are welcome as well.

| Theo-NL0 -

403s vs 404s

Hey all, Recently launched a new site on S3, and old pages that I haven't been able to redirect yet are showing up as 403s instead of 404s. Is a 403 worse than a 404? They're both just basically dead-ends, right? (I have read the status code guides, yes.)

| danny.wood1 -

Phone Number In Meta Description

People are more likely to call us, than email us. However, if they're using a mobile device, there's a click to call button on that site. My question is this: google does not include our phone number in our meta description. I could try to get the description changed, but it doesn't seem like it would make that big of a deal for just the desktop site. Am I missing something about the importance of the phone number on a desktop site? Any experience with this situation? Thanks, Ruben

| KempRugeLawGroup3 -

Googlebot Crawl Rate causing site slowdown

I am hearing from my IT department that Googlebot is causing as massive slowdown/crash our site. We get 3.5 to 4 million pageviews a month and add 70-100 new articles on the website each day. We provide daily stock research and marke analysis, so its all high quality relevant content. Here are the crawl stats from WMT: http://imgur.com/dyIbf I have not worked with a lot of high volume high traffic sites before, but these crawl stats do not seem to be out of line. My team is getting pressure from the sysadmins to slow down the crawl rate, or block some or all of the site from GoogleBot. Do these crawl stats seem in line with sites? Would slowing down crawl rates have a big effect on rankings? Thanks

| SuperMikeLewis0 -

Can Google Read schema.org markup within Ajax?

Hi All, as a local business directory, we also display Openinghours on a business listing page. ex. http://www.goudengids.be/napoli-kontich-2550/

| TruvoDirectories

At the same time I also have schema.org markup for Openinghours implemented.

But, for technical reasons (performance), the openinghours (and the markup alongside) are displayed using AJAX. I'm wondering if google is able to read the markup. The rich snippet tool and markup plugings like Semantic Inspector can't "see" the markup for openinghours. Any advice here?0 -

Can you noindex a page, but still index an image on that page?

If a blog is centered around visual images, and we have specific pages with high quality content that we plan to index and drive our traffic, but we have many pages with our images...what is the best way to go about getting these images indexed? We want to noindex all the pages with just images because they are thin content... Can you noindex,follow a page, but still index the images on that page? Please explain how to go about this concept.....

| WebServiceConsulting.com0 -

Can I use a 410'd page again at a later time?

I have old pages on my site that I want to 410 so they are totally removed, but later down the road if I want to utilize that URL again, can I just remove the 410 error code and put new content on that page and have it indexed again?

| WebServiceConsulting.com0 -

How to Remove a website from your Bing Webmaster Tools account

I have a site in Bing Webmaster Tools that I no longer work on. I can't seem to find where to delete this website from my webmaster tools account. Anyone know how (there doesn't seem to be anything obvious under Bing Help or on a Google Search).

| TopFloor0 -

Umbrella company and multiple domains

I'm really sorry for asking this question yet again. I have searched through previous answers but couldn't see something exactly like this I think. There is a website called example .com. It is a sort of umbrella company for 4 other separate domains within it - 4 separate companies. The Home page of the "umbrella" company website is example.com. It is just an image with no content except navigation on it to direct to the 4 company websites. The other pages of website example.com are the 4 separate companies domains. So on the navigation bar there is : Home page = example.com company1page = company1domain.com company2page= company2domain.com etc. etc. Clicking "home" will take you back to example.com (which is just an image). How bad or good is this structure for SEO? Would you recommend any changes to help them rank better? The "home" page has no authority or links, and neither do 3 out of the 4 other domains. The 4 companies websites are independent in content (although theme is the same). What's bringing them altogether is under this umbrella website - example.com. Thank you

| AL123al0 -

Image Height/Width attributes, how important are they and should a best practice site include this as std

Hi How important are the image height/width attributes and would you expect a best practice site to have them included ? I hear not having them can slow down a page load time is that correct ? Any other issues from not having them ? I know some re social sharing (i know bufferapp prefers images with h/w attributes to draw into their selection of image options when you post) Most importantly though would you expect them to be intrinsic to sites that have been designed according to best practice guidelines ? Thanks

| Dan-Lawrence0 -

XML Sitemap without PHP

Is it possible to generate an XML sitemap for a site without PHP? If so, how?

| jeffreytrull11 -

How do I find which pages are being deindexed on a large site?

Is there an easy way or any way to get a list of all deindexed pages? Thanks for reading!

| DA20130 -

Can I have an H1 tag below an H2?

Quick question for you all - Is there an issue with me having an H1 tag physically below an H2 tag on a web page??

| Pete40 -

Does using data-href="" work more effectively than href="" rel="nofollow"?

I've been looking at some bigger enterprise sites and noticed some of them used HTML like this: <a <="" span="">data-href="http://www.otherodmain.com/" class="nofollow" rel="nofollow" target="_blank"></a> <a <="" span="">Instead of a regular href="" Does using data-href and some javascript help with shaping internal links, rather than just using a strict nofollow?</a>

| JDatSB0 -

Adding multi-language sitemaps to robots.txt

I am working on a revamped multi-language site that has moved to Magento. Each language runs off the core coding so there are no sub-directories per language. The developer has created sitemaps which have been uploaded to their respective GWT accounts. They have placed the sitemaps in new directories such as: /sitemap/uk/sitemap.xml /sitemap/de/sitemap.xml I want to add the sitemaps to the robots.txt but can't figure out how to do it. Also should they have placed the sitemaps in a single location with the file identifying each language: /sitemap/uk-sitemap.xml /sitemap/de-sitemap.xml What is the cleanest way of handling these sitemaps and can/should I get them on robots.txt?

| MickEdwards0 -

Http to https - is a '302 object moved' redirect losing me link juice?

Hi guys, I'm looking at a new site that's completely under https - when I look at the http variant it redirects to the https site with "302 object moved" within the code. I got this by loading the http and https variants into webmaster tools as separate sites, and then doing a 'fetch as google' across both. There is some traffic coming through the http option, and as people start linking to the new site I'm worried they'll link to the http variant, and the 302 redirect to the https site losing me ranking juice from that link. Is this a correct scenario, and if so, should I prioritise moving the 302 to a 301? Cheers, Jez

| jez0000 -

Staging site and "live" site have both been indexed by Google

While creating a site we forgot to password protect the staging site while it was being built. Now that the site has been moved to the new domain, it has come to my attention that both the staging site (site.staging.com) and the "live" site (site.com) are both being indexed. What is the best way to solve this problem? I was thinking about adding a 301 redirect from the staging site to the live site via HTACCESS. Any recommendations?

| melen0 -

Title tag not showing on google? Please Help!

I've read the FAQs and searched the help center. My URL is: http://www.webygeeks.comI have updated title tags of my client's website 10-15 days ago, still the title on google is coming as the company name 😞 Why so??Description is correct but title is incorrect, can you please recommend me something guys?Also, i am wondering why the google cache is showing date of september 5 and we have changed the titles around 10 - 15 days before that http://webcache.googleusercontent.com/search?q=cache:P45GOiHRaIUJ:www.webygeeks.com/+&cd=1&hl=en&ct=clnk Really appreciate your suggestion.

| lvp11380 -

How do I handle duplicate content of the same product in Multiple product categories?

I am building a BigCommerce store for selling framed art. Many of the pieces of art will fall in more than one product category. Let's say I have a framed print of a photograph of a western landscape. This piece of art would fit into these categories; "western", "landscape", and "photography". I would have three pages with duplicate content for just this one framed print. Will google give me less page rank due to this? Can all the link juice be given to just one of the three categories by use of rel=canonical? If so, does anyone know how to do this for a bigcommerce site? I would appreciate any feedback. Thanks, Kelly

| Kelly_S0 -

Can I mark up breadcrumbs without showing them? (responsive design)

I am working on a site that has responsive design. We use faceted search for the desktop version but implemented a style of breadcrumbs for the mobile version as sidebars take up too much screen real estate. On the desktop design we are putting a display:none in front of the breadcrumbs. If we mark up those breadcrumbs and they are behind a display none, can we still get the rich snippets? Will Google see this is cloaking? In follow up, is there a way to markup breadcrumbs in the or somewhere else that is constant?

| MarloSchneider0 -

Disallow: /404/ - Best Practice?

Hello Moz Community, My developer has added this to my robots.txt file: Disallow: /404/ Is this considered good practice in the world of SEO? Would you do it with your clients? I feel he has great development knowledge but isn't too well versed in SEO. Thank you in advanced, Nico.

| niconico1011 -

Spaces (actual spaces) in URL

Hi all, Is there a huge loss of SEO performance if a URL shows spaces with an actual space (i.e. %20) in the URL rather than a "-" (or indeed a "_")? I know the preferred option is to have a "-", but I am just wondering if it is worth our effort to manually change the "%20" to a "-" in all the instances? Thanks 🙂 Diana

| Diana.varbanescu0 -

Meta Description VS Rich Snippets

Hello everyone, I have one question: there is a way to tell Google to take the meta description for the search results instead of the rich snippets? I already read some posts here in moz, but no answer was found. In the post was said that if you have keywords in the meta google may take this information instead, but it's not like this as i have keywords in the meta tags. The fact is that, in this way, the descriptions are not compelling at all, as they were intended to be. If it's not worth for ranking, so why google does not allow at least to have it's own website descriptions in their search results? I undestand that spam issues may be an answer, but in this way it penalizes also not spammy websites that may convert more if with a much more compelling description than the snippets. What do you think? and there is any way to fix this problem? Thanks!

| socialengaged

Eugenio0 -

Is there a way for me to automatically download a website's sitemap.xml every month?

From now on we want to store all our sitemap.xml over the next years. Its a nice archive to have that allows us to analyse how many pages we have on our website and which ones were removed/redirected. Any suggestions? Thanks

| DeptAgency0 -

How can I block incoming links from a bad web site ?

Hello all, We got a new client recently who had a warning from Google Webmasters tools for manual soft penalty. I did a lot of search and I found out one particular site that sounds roughly 100k links to one page and has been potentialy a high risk site. I wish to block those links from coming in to my site but their webmaster is nowhere to be seen and I do not want to use the disavow tool. Is there a way I can use code to our htaccess file or any other method? Would appreciate anyone's immediate response. Kind Regards

| artdivision0 -

Mobile site ranking instead of/as well as desktop site in desktop SERPS

I have just noticed that the mobile version of my site is sometimes ranking in the desktop serps either instead of as well as the desktop site. It is not something that I have noticed in the past as it doesn't happen with the keywords that I track, which are highly competitive. It is happening for results that include our brand name, e.g '[brand name][search term]'. The mobile site is served with mobile optimised content from another URL. e.g wwww.domain.com/productpage redirects to m.domain.com/productpage for mobile. Sometimes I am only seen the mobile URL in the desktop SERPS, other times I am seeing both the desktop and mobile URL for the same product. My understanding is that the mobile URL should not be ranking at all in desktop SERPS, could we be being penalised for either bad redirects or duplicate content? Any ideas as to how I could further diagnose and solve the problem if you do believe that it could be harming rankings?

| pugh0 -

Staging & Development areas should be not indexable (i.e. no followed/no index in meta robots etc)

Hi I take it if theres a staging or development area on a subdomain for a site, who's content is hence usually duplicate then this should not be indexable i.e. (no-indexed & nofollowed in metarobots) ? In order to prevent dupe content probs as well as non project related people seeing work in progress or finding accidentally in search engine listings ? Also if theres no such info in meta robots is there any other way it may have been made non-indexable, or at least dupe content prob removed by canonicalising the page to the equivalent page on the live site ? In the case in question i am finding it listed in serps when i search for the staging/dev area url, so i presume this needs urgent attention ? Cheers Dan

| Dan-Lawrence0 -

CNAME vs 301 redirect

Hi all, Recently I created a website for a new client and my next job is trying to get them higher in Google. I added them in OSE and noticed some strange backlinks. To my surprise the client has about 20 domain names. All automatically poiting to (showing) the same new mainsite now. www.maindomain.nl www.maindomain.be

| Houdoe

www.maindomain.eu

www.maindomain.com

www.otherdomain.nl

www.otherdomain.com

... Some of these domains have backlinks too (but not so much). I suggested to 301 redirect them all to the main site. Just to avoid duplicate content. But now the webhoster comes into play: "It's a problem, client has only 1 hosting account, blablabla...". They told me they could CNAME the 20 domains to the main domain. Or A-record them to an IP address. This is too technical stuff for me. So my concrete questions are: Is it smart to do anything at all or am I just harming my client? The main site is ranking pretty well now. And some backlinks are from their copy sites (probably because everywhere the logo links to the full mainsite url). Does the CNAME or A-record solution has the same effect as a 301 redirect, from SEO perspective? Many thanks,

Hans0 -

Redirecting root domain to a page based on user login

We have our main URL redirecting non-logged in users to a specific page and logged in users are directed to their dashboard when going to the main URL. We find this to be the most user-friendly, however, this is all being picked up as a 302 redirect. I am trying to advise on the ideal way to accomplish this, but I am not having much luck in my search for information. I believe we are going to put a true homepage at the root domain and simply redirect logged in users as usual when they hit the URL, but I'm still concerned this will cause issues with Google and other search engines. Anyone have experience with domains that need to work in this manner? Thank you! Anna

| annalytical0 -

How to find all crawlable links on a particular page?

Hi! This might sound like a newbie question, but I'm trying to find all crawlable links (that google bot sees), on a particular page of my website. I'm trying to use screaming frog, but that gives me all the links on that particular page, AND all subsequent pages in the given sub-directory. What I want is ONLY the crawlable links pointing away from a particular page. What is the best way to go about this? Thanks in advance.

| AB_Newbie0

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.