How to Completely Ruin (or Save) Your Website with Redirects

Have you ever redirected a page hoping to see a boost in rankings, but nothing happened? Or worse, traffic actually went down?

When done right, 301 redirects have awesome power to clean up messy architecture, solve outdated content problems and improve user experience — all while preserving link equity and your ranking power.

When done wrong, the results can be disastrous.

In the past year, because Google cracked down hard on low quality links, the potential damage from 301 mistakes increased dramatically. There's also evidence that Google has slightly changed how they handle non-relevant redirects, which makes proper implementation more important than ever.

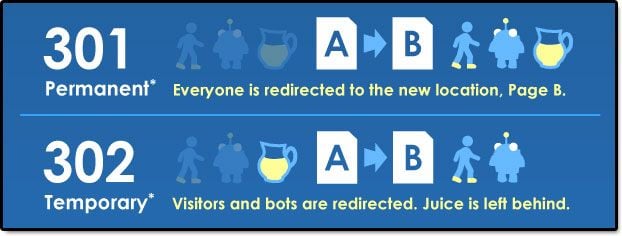

From Dr. Pete's post - An SEO's Guide to HTTP Status Codes

Semantic relevance 101: anatomy of a "perfect" redirect

A perfect 301 redirect works as a simple “change of address” for your content. Ideally, this means everything about the page except the URL stays the same including content, title tag, images, and layout.

When done properly, we know from testing and statements from Google that a 301 redirect passes somewhere around 85% of its original link equity.

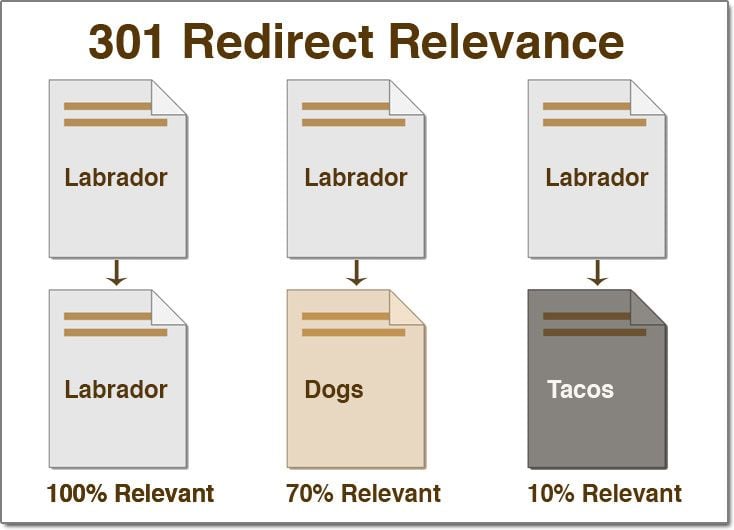

The new page doesn’t have to be a perfect match for the 301 to pass equity, but problems arise when webmasters use the 301 to redirect visitors to non-relevant pages. The further away you get from semantically relevant content, the less likely your redirect will pass maximum link equity.

For example, if you have a page about “labrador,” then redirecting to a page about “dogs” makes sense, but redirecting to a page about “tacos” does not.

A clue to this devaluation comes from the manner in which search engines deal with content that changes significantly over a period of time.

The famous Google patent, Information retrieval based on historical data, explains how older links might be ignored if the text of a page changes significantly or the anchor text pointing to a URL changes in a big way (I added the bold):

...the domain may show up in search results for queries that are no longer on topic. This is an undesirable result. One way to address this problem is to estimate the date that a domain changed its focus. This may be done by determining a date when the text of a document changes significantly or when the text of the anchor text changes significantly. All links and/or anchor text prior to that date may then be ignored or discounted.

If these same properties apply to 301 redirects, it goes a long way in explaining why non-relevant pages don't get a boost from redirecting off-topic pages.

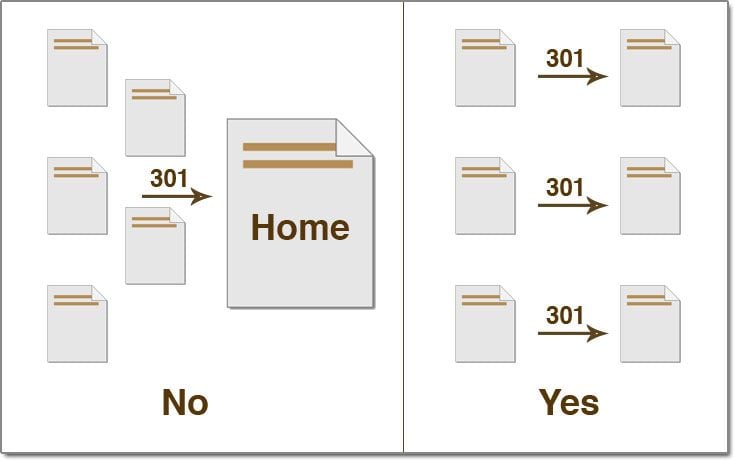

301 redirecting everything to the home page

Savvy SEOs have known for a long time that redirecting a huge number of pages to a home page isn’t the best policy, even when using a 301. Recent statements by Google representatives suggest that Google may go a step further and treat bulk redirects to the home page of a website as 404s, or soft 404s at best.

This means that instead of passing link equity through the 301, Google may simply drop the old URLs from its index without passing any link equity at all.

While it’s difficult to prove exactly how search engines handle mass home page redirects, it’s fair to say that any time you 301 a large number of pages to a single questionably relevant URL, you shouldn’t expect those redirects to significantly boost your SEO efforts.

Better alternative: When necessary, redirect relevant pages to closely related URLs. Category pages are better than a general homepage.

If the page is no longer relevant, receives little traffic, and a better page does not exist, it’s often perfectly okay to serve a 404 or 410 status code.

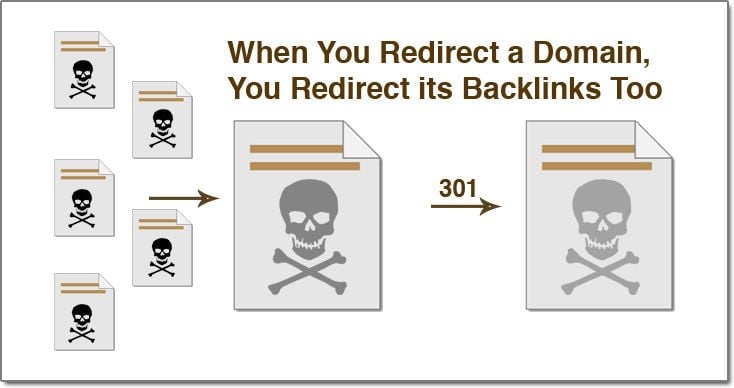

Danger: 301 redirects and bad backlinks

Before Penguin, SEOs widely believed that bad links couldn’t hurt you, and redirecting entire domains with bad links wasn’t likely to have much of an effect.

Then Google dropped the hammer on low-quality links.

If the Penguin update and developments of the past year have taught us anything, it’s this:

When you redirect a domain, its bad backlinks go with it.

Webmasters often roll up several older domains into a single website, not realizing that bad backlinks may harbor poison that sickens the entire effort. If you’ve been penalized or suffered from low-quality backlinks, it’s often easier and more effective to simply stop the redirect than to try and clean up individual links.

Individual URLs with bad links

The same concept works at the individual URL level. If you redirect a single URL with bad backlinks attached to it, those bad links will then point to your new URL.

In this case, it’s often better to simply drop the page with a 404 or 410, and let those links drop from the index.

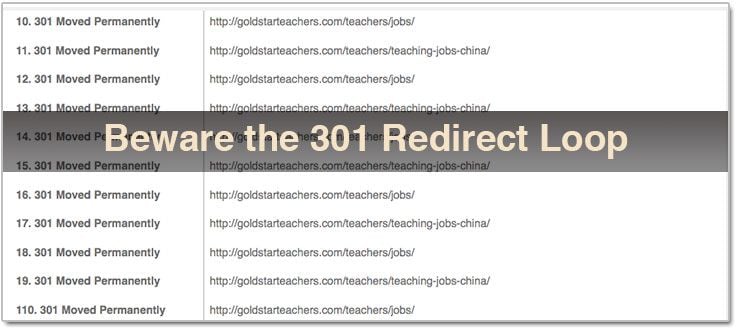

Infinite loops and long chains

If you perform an SEO audit on a site, you’ll hopefully discover any potentially harmful redirect loops or crawling errors caused by overly-complex redirect patterns.

While it’s generally believed that Google will follow many, many redirects, each step has the potential to diminish link equity, dilute anchor text relevance, and lead to crawling and indexing errors.

One or two steps is generally the most you want out of any redirect chain.

New changes for 302s

SEOs typically hate 302s, but recent evidence suggests search engines may now be changing how they handle them — at least a little.

Google knows that webmasters make mistakes, and recent tests by Geoff Kenyon showed that 302 redirects have potential to pass link equity. The theory is that 302s (meant to be temporary) are so often implemented incorrectly, that Google treats them as “soft” 301s.

Duane Forrester of Bing addressed this in a recent tweet.

So, not only do search engines limit us when we try to get too clever, but they also help to keep us from shooting ourselves in the foot.

The author's views are entirely their own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

Comments

Please keep your comments TAGFEE by following the community etiquette

Comments are closed. Got a burning question? Head to our Q&A section to start a new conversation.